Type support: getting started with syslog-ng 4.0

Version 4.0 of syslog-ng is right around the corner. It hasn’tyet been released; however, you can already try some of its features. The largest and most interesting change is type support. Right now, name-value pairs within syslog-ng are represented as text, even if the PatternDB or JSON parsers could see the actual type of the incoming data. This does not change, but starting with 4.0, syslog-ng will keep the type information, and use it correctly on the destination side. This makes your life easier, for example when you store numbers to Elasticsearch or to other type-aware storage.

From this blog, you can learn how type support makes your life easier and helps you to give it a testdrive on your own hosts: https://www.syslog-ng.com/community/b/blog/posts/type-support-getting-started-with-syslog-ng-4-0

syslog-ng logo

Discogs

Last week I became a Discogs user. Why? I have been browsing the site for years to find information on albums. Recently I also needed a solution to create an easy to access database of my CD/DVD collection. Right now I am not interested in the marketplace function of Discogs, but that might change in the long term :-)

Information overload

For many years when I searched for an album, the first few hits were from YouTube and Wikipedia. Nowadays the first few results are often from Discogs. While Wikipedia sometimes provides some interesting background information about the creation of an album, Discogs has more structured and uniform information about albums. It also lists the many variants of the same album. Even for artists where I thought that I have all albums in my collection (like Mike Oldfield), I can find albums I have never heard about before. It is also easy to see who a given artist was working with and using TIDAL I can instantly listen to some really interesting (or awful…) music right away.

My collection

I only have a few hundred CDs, but that is already more than I can remember. When I am in a CD shop, I happily buy new CDs from artists I have never heard about before, as I can be sure that I do not already have that disc. However, when it comes to Solaris, Mike Oldfield or Vangelis, I can never be sure if I already have an album. Of course I tried some DIY methods, but it was difficult to maintain the lists and they were never at hand when I really needed them.

Discogs provides an easy to use mobile application to scan bar codes on the back of CDs. This can speed up adding new items to my collection tremendously. Of course not all bar codes are in available in Discogs, but until now there was only one CD that I could not find at all. The more difficult part is when it lists dozens of disks for the same bar code: various (re)prints of the the same album from around the World. I must admit that I am lazy here and just take an educated guess… I can use the same mobile app to check my collection when away from home.

A few weeks ago I realized that I have a duplicate album, and while entering my collection into Discogs, I discovered another one. I have no plans for selling them, I already know which of my friends would be happy to receive them. But in the long term it could be interesting to buy a few CDs which are otherwise impossible to buy here in Hungary.

Discogs also gives a price estimate for most CDs. It was a kind of surprising: some of my most expensive disks are not worth too much anymore, as they were printed in large numbers. On the other hand I have a large collection of Hungarian progrock music, and the price of those is much higher than I paid for them originally.

You can find my collection at https://www.discogs.com/user/pczanik/collection. The list is constantly growing, as I am still just at less than a half of my collection. The next time I visit my favorite CD shop, Periferic Records - Stereo Kft., I will have an easier job when I see a CD from a familiar artist :-)

flower

GNOME 43 Wallpapers

GNOME 43 Wallpapers

Evolution and design can co-exist happily in the world of desktop wallpapers. It’s desirable to evolve within a set of constraints to create a theme in time, set up a visual brand that doesn’t rely on putting a logo on everything. At the same time it’s healthy to stop once in a while, do a small reflection on what’s perhaps a little dated and do a fresh redesign.

I took extra time this release to focus on refreshing the whole wallpaper set for 43. While the default wallpaper isn’t a big departure from 3.38 hexagon theme, most of the supplemental wallpapers have been refreshed from the ground up. The video above shows a few glimpses of all the one way streets it took for the default to land back in the hexagons.

GNOME 42 was the first release to ship a bunch of SVG based wallpapers that embraced the flat colors and gradients and benefited from being just a few kilobytes in file size. It was also the first release to ship dark preference variants. All of that continues into 43.

![]()

Major change comes from addressing a wide range of display aspect ratios with one wallpaper. 43 wallpapers should work fine on your ultrawides just as well as portrait displays. We also rely on WebP as the file format getting a much better quality with a nice compression ratio (albeit lossy).

What’s still missing are photographic wallpapers captured under different lighting. Hopefully next release.

Blender’s geometry nodes is an amazing tool to do generative art, yet I feel like I’ve already forgotten the small fraction of what can be done that I’ve learned during this cycle. Luckily there’s always the next release to do some repetition. Thanks to everyone following my struggles on the twitch streams.

The release is dedicated to Thomas Wood, a long time maintainer of all things visual in GNOME.

Announcing the availability of two openSUSE mirrors in Mauritius

Yesterday, I attended an open source event organised by OSCA Mauritius and OceanDBA.

I was invited to speak at the event and I chose to explain a little about openSUSE, its different distributions and how we have managed to set up two mirrors to improve the performance of openSUSE updates in Mauritius.

Girish is a representative for OSCA Mauritius and he works at OceanDBA. He put all the effort into organising this event. At about 09h30, the conference room at Flying Dodo was almost full. Girish welcomed everyone and introduced the presentation themes for the day.

First presentation – Linux Kernel history by Chittesh Sham

Chittesh is a DevOps Engineer at Corel Corporation. He did a presentation on the Linux Kernel. He tried to summarise three decades of Linux history in one presentation. It was a very informative and fun prez.

Second presentation – Bash Scripting by Shravan Dwarka

Shravan is a Linux System Engineer at OceanDBA. He did a presentation on the Bourne Again SHell (Bash).

After explaining about Shells, Shravan gave a scenario where a database administrator had to automate backups and perform backup retention.

He then explained line by line how he wrote a Bash script to execute the backup.

Final presentation – openSUSE Mirrors in Mauritius

My presentation was last. It's been a while since I set up two openSUSE mirrors in Mauritius. Any openSUSE user on the island will now benefit from fast updates without having to configure anything on their openSUSE machine.

This event was an opportunity to make a public announcement about the mirrors and explain the process behind. That is, how I contacted sponsors for server/bandwidth in Mauritius and got in touch with the openSUSE Heroes to build the repo mirrors.

However, first I did a history lesson about S.u.S.E, SUSE and openSUSE. I clarified that openSUSE is a project and the various distributions have names like Leap, Leap Micro, Tumbleweed and MicroOS.

Then, I showed the tickets that I opened at openSUSE to get the ball rolling. Within a few days we had the mirrors set up.

I mentioned that the domain opensuse.mu is sponsored by the openSUSE project itself. Then, the Heroes created the records to point the domain & sub-domains to servers in Mauritius, sponsored by cloud.mu and Rogers Capital Technology Services.

While speaking about the mirrors, I explained how MirrorBrain works. It is an open source framework to run a content delivery network for mirror servers. The two openSUSE mirrors in Mauritius are mirror.opensuse.mu and mirror.rcts.opensuse.mu. To show how MirrorBrain works with these two servers, I opened get.opensuse.org to download the latest release of openSUSE Leap 15.4.

I selected the offline installation image and we checked the URL from which the image was downloading. It was the Rogers Capital Technology Services (RCTS) mirror server.

I SSH'ed on the cloud.mu mirror server to show how the rsync is done from the restricted rsync server of openSUSE.org. I did not SSH on the RCTS server because it requires a VPN (RCTS engineers take security very seriously 🤓). My VPN setup was on a different machine than the one I was doing my presentation on.

I explained the difference between rsync.opensuse.org and stage.opensuse.org, the former being a public rsync server. It can be used by anyone wishing to run a private openSUSE mirror for home or office use.

The conference room was packed with attendees which is a promising thing for an open source event.

At the end of the event, Joffrey Michaïe, the founder and CEO of OceanDBA addressed the attendees. He thanked the wonderful audience for showing up to the event. Joffrey explained that OceanDBA's business is built on open source technologies and that they have the open source philosophy at the heart of the company.

He was glad to see a lot of young folks attending the event and said that his company would be keen to sponsor such events again in the future. He mentioned that at the moment there are about fifteen open positions at OceanDBA and anyone wishing to apply could reach out to him directly or talk to any other personnel of the company.

The Managing Director of Rogers Capital Technology Services, Dev Hurkoo, and the Roshan Patroo, Manager at RCTS, attended the event. We met after my presentation. They both told me that they were impressed by the attendee turn-out for an open source event. Dev expressed his interest to support open source activities in the future too.

openSUSE Tumbleweed – Review of the weeks 2022/29-31

Dear Tumbleweed users and hackers,

I was in the fortunate situation of enjoying two weeks of offline time. Took a little bit of effort, but I did manage to not start my computer a single time (ok, I cheated, checked emails, and staging progress on the phone browser). During this time, Richard has been taking good care of Tumbleweed – with the limitations that were put upon him, like reduced OBS worker powers and the like. In any case, I still do want to give you an overview of what changed in Tumbleweed during those three weeks. There was a total of 8 snapshots released (0718, 0719, 0725, 0728, 0729, 0731, 0801, 0802). A few of those snapshots have only been published, but no announcement emails were sent out, as there were also some mailman issues on the factory mailing list.

Those snapshot accumulated the following changes:

- Linux kernel 5.18.11

- Pipewire 0.3.55 & 0.3.56

- nvme-cli 2.1~rc0

- XOrg X11 SFFmpeg21.1.4

- ffmpeg 5.1

- qemu 7.0

- AppArmor 3.0.5

- Poppler 22.07.0

- polkit: split out pkexec into seperate package to make system hardening easier (to avoid installing it jsc#PED-132 jsc#PED-148).

The next snapshot being tested is currently 0804, which mostly looks good with some ‘weird’ things around transactional servers. This snapshot and the current state of staging projects promise to deliver these items soon (for any random value of time to fit into ‘soon’):

- Mesa 22.1.4

- Mozilla Firefox 103.0.1

- AppArmor 3.0.6

- gdb 12.1

- Linux kernel 5.18.15, followed by 5.19

- libvirt 8.6.0

- nvme-cli 2.1.1 (out of RC phase)

- KDE Plasma 5.25.4

- Samba 4.16.4

- Postfix 3.7.2

- RPM 4.17.1, with some major rework on the spec, i.e previously bundled things like debugedit and python-rpm-packaging are split out)

- python-setuptools 63.2.0

- Python 3.10.6

- CMake 3.24.0

An Update

There have been a lot of changes going on for me in the past few months. Without going onto a lot of details that I would rather not share, I’ve changed a lot in my personal and online life and I’ve taken on some new interests and possible changes in my future.

This blog has been running in one form or another for many years and I don’t want to get rid of it but it will be mainly focused on things that interest in me in the Usenet world.

My new blog is https://blog.syntopicon.info and it will be my new general-interest blog but also focused on my other upcoming interests that I’m not going to share here as much.

This blog is being moved to https://blog.theuse.net

By the way, why did I start hosting my own wordpress server again when I have an account on wordpress.com? Because worspress.com sucks. You can no longer create new blogs without a paid account. For the same cost of a paid account, I was able to buy a VPS server and have total control of everything and have plenty of resources left over to restart my usenet server, gemini server, and other services.

Xen, QEMU update in Tumbleweed

The openSUSE Tumbleweed produced five snapshots since last Thursday that have so far been released.

Among some of the packages updated this week besides those listed above in the headline were curl, ffmpeg, fetchmail, vim and more.

Snapshot 20220802 was released a couple hours ago and updated just four packages. The update of webkit2gtk3 2.36.5 fixed video playback for the Yelp browser. It and webkit2gtk3-soup2 also fixed a couple Common Vulnerabilities and Exposures. An update of yast2-trans provided some Slovak translations.

The update of xen 4.16.1_06 arrived in snapshot 20220801 and it offered several patches. One of those was a fix for a GNU Compiler Collection 13 compilation error and xen also addressed a CVE; CVE-2022-33745 had a wrong use of a variable due to a code move and lead to a wrong TLB flush condition. Another of the packages to arrive in the snapshot was an update of fetchmail 6.4.32; the package updated translations and added a patch to clean up some scripts. Many changes were made in the mozilla-nss 3.80 update, which added a few certificates and support for asynchronous client auth hooks. The package also removed the Hellenic Academic 2011 root certificate. Terminal multiplexer, tmux, updated to 3.3a and added systemd socket activation support, which can be built with -enable-systemd.

Snapshot 20220731 had many packages updated. ImageMagick jumped a few minor version to 7.1.0.44. The imaging package eliminated some warnings and a possible buffer overflow. The curl 7.84.0 update deleted two obsolete OpenSSL options and fixed four CVEs. Daniel Stenberg’s video went over CVE-2022-32205 at length, which could have effectively caused a denial of service possible for a sibling site. An update of kdump fixed a network-related dracut handling for Firmware Assisted Dump. An update of codec2 version 1.0.5 fixed a FreeDV Application Programming Interface backward compatibility issue in the previous minor version. An update of inkscape 1.2.1 fixes five crashes, more than 25 bugs and improved 15 user-interface translations. PDF rendering library poppler updated to version 22.07.0 and fixed a crash when filling in forms in some files. It also added gpg keyring validation for the release tarball. The 2.3.7 version of gpg2 fixed CVE-2022-34903 that, in unusual situations, could allow a signature forgery via injection into the status line. Other key packages to update in the snapshot were unbound 1.16.1, libstorage-ng 4.5.33, yast2-bootloader 4.5.2 and kernel-firmware 20220714.

The 20220729 snapshot delivered yast2 4.5.10, which jumped four minor versions; the new version added a method for finding a package according to a pattern and fixed libzypp initialization. Text editor vim 9.0.0073 fixed CVE-2022-2522 and a couple compiler warnings. Linux Kernel security module Apparmor 3.0.5 fixed a build error, had several profile and abstraction additions and removed several upstreamed patches. Both GCC 12 and ceph had some minor git updates with versions 12.1.1 and 16.2.9 respectively.

The 20220728 snapshot had two major version updates. The 7.0 version of qemu had a substantial rework of the spec files and properly fixed CVE-2022-0216. The generic emulator and virtualizer had several RISC-V additions; support for KVM and enablement of Hypervisor extension by default. The package also added new audio-dbus and ui-dbus subpackages, according to the changelog. The other major release was adobe-sourcehanserif-fonts 2.001. The new version added Hong Kong specific subset fonts and variable fonts for all regions for the decorative font. Another package to update in the snapshot was ffmpeg. The 5.1 version brought in IPFS protocol support and removed the X-Video Motion Compensation hardware acceleration. The snapshot also updated bind 9.18.5, sqlite2 3.39.2, virtualbox 6.1.36, zypper 1.14.55 and many other packages.

Paying technical debt in our accessibility infrastructure - Transcript from my GUADEC talk

At GUADEC 2022 in Guadalajara I gave a talk, Paying technical debt in our accessibility infrastructure. This is a transcript for that talk.

The video for the talk starts at 2:25:06 and ends at 3:07:18; you can click on the image above and it will take you to the correct timestamp.

Hi there! I'm Federico Mena Quintero, pronouns he/him. I have been working on GNOME since its beginning. Over the years, our accessibility infrastructure has acquired a lot of technical debt, and I would like to help with that.

For people who come to GUADEC from richer countries, you may have noticed that the sidewalks here are pretty rubbish. This is a photo from one of the sidewalks in my town. The city government decided to install a bit of tactile paving, for use by blind people with canes. But as you can see, some of the tiles are already missing. The whole thing feels lacking in maintenance and unloved. This is a metaphor for the state of accessibility in many places, including GNOME.

This is a diagram of GNOME's accessibility infrastructure, which is also the one used on Linux at large, regardless of desktop. Even KDE and other desktop environments use "atspi", the Assistive Technology Service Provider Interface.

The diagram shows the user-visible stuff at the top, and the infrastructure at the bottom. In subsequent slides I'll explain what each component does. In the diagram I have grouped things in vertical bands like this:

-

gnome-shell, GTK3, Firefox, and LibreOffice ("old toolkits") all use atk and atk-adaptor, to talk via DBus, to at-spi-registryd and assistive technologies like screen readers.

-

More modern toolkits like GTK4, Qt5, and WebKit talk DBus directly instead of going through atk's intermediary layer.

-

Orca and Accerciser (and Dogtail, which is not in the diagram) are the counterpart to the applications; they are the assistive tech that is used to perceive applications. They use libatspi and pyatspi2 to talk DBus, and to keep a representation of the accessible objects in apps.

-

Odilia is a newcomer; it is a screen reader written in Rust, that talks DBus directly.

The diagram has red bands to show where context switches happen when applications and screen readers communicate. For example, whenever something happens in gnome-shell, there is a context switch to dbus-daemon, and another context switch to Orca. The accessibility protocol is very chatty, with a lot of going back and forth, so these context switches probably add up — but we don't have profiling information just yet.

There are many layers of glue in the accessibility stack: atk, atk-adaptor, libatspi, pyatspi2, and dbus-daemon are things that we could probably remove. We'll explore that soon.

Now, let's look at each component separately.

For simplicity, let's look just at the path of communication between gnome-shell and Orca. We'll have these components involved: gnome-shell, atk, atk-adaptor, dbus-daemon, libatspi, pyatspi2, and finally Orca.

Gnome-shell implements its own toolkit, St, which stands for "shell toolkit". It is made accessible by implementing the GObject interfaces in atk. To make a toolkit accessible means adding a way to extract information from it in a standard way; you don't want screen readers to have separate implementations for GTK, Qt, St, Firefox, etc. For every window, regardless of toolkit, you want to have a "list children" method. For every widget you want "get accessible name", so for a button it may tell you "OK button", and for an image it may tell you "thumbnail of file.jpg". For widgets that you can interact with, you want "list actions" and "run action X", so a button may present an "activate" action, and a check button may present a "toggle" action.

However, ATK is just abstract interfaces for the benefit of toolkits. We need a way to ship the information extracted from toolkits to assistive tech like screen readers. The atspi protocol is a set of DBus interfaces that an application must implement; atk-adaptor is an implementation of those DBus interfaces that works by calling atk's slightly different interfaces, which in turn are implemented by toolkits. Atk-adaptor also caches some things that it already asked to the toolkit, so it doesn't have to ask again unless the toolkit notifies about a change.

Does this seem like too much translation going on? It is! We will see the reasons behind that when we talk about how accessibility was implemented many years ago in GNOME.

So, atk-adaptor ships the information via the DBus daemon. What's on the other side? In the case of Orca it is libatspi, a hand-written binding to the DBus interfaces for accessibility. It also keeps an internal representation of the information that it got shipped from the toolkit. When Orca asks, "what's the name of this widget?", libatspi may already have that information cached. Of course, the first time it does that, it actually goes and asks the toolkit via DBus for that information.

But Orca is written in Python, and libatspi is a C library. Pyatspi2 is a Python binding for libatspi. Many years ago we didn't have an automatic way to create language bindings, so there is a hand-writtten "old API" implemented in terms of the "new API" that is auto-generated via GObject Introspection from libatspi.

Pyatspi2 also has a bit of logic which should probably not be there, but rather in Orca itself or in libatspi.

Finally we get to Orca. It is a screen reader written in 120,000 lines of Python; I was surprised to see how big it is! It uses the "old API" in pyatspi2.

Orca uses speech synthesis to read out loud the names of widgets, their available actions, and generally any information that widgets want to present to the user. It also implements hotkeys to navigate between elements in the user interface, or a "where am I" function that tells you where the current focus is in the widget hierarchy.

Sarah Mei tweeted "We think awful code is written by awful devs. But in reality, it's written by reasonable devs in awful circumstances."

What were those awful circumstances?

Here I want to show you some important events surrounding the infrastructure for development of GNOME.

We got a CVS server for revision control in 1997, and a Bugzilla bug tracker in 1998 when Netscape freed its source code.

Also around 1998, Tara Hernandez basically invented Continuous Integration while at Mozilla/Netscape, in the form of Tinderbox. It was a build server for Netscape Navigator in all its variations and platforms; they needed a way to ensure that the build was working on Windows, Mac, and about 7 flavors of Unix that still existed back then.

In 2001-2002, Sun Microsystems contributed the accessibility code for GNOME 2.0. See Emmanuele Bassi's talk from GUADEC 2020, "Archaeology of Accessibility" for a much more detailed description of that history (LWN article, talk video).

Sun Microsystems sold their products to big government customers, who often have requirements about accessibility in software. Sun's operating system for workstations used GNOME, so it needed to be accessible. They modeled the architecture of GNOME's accessibility code on what they already had working for Java's Swing toolkit. This is why GNOME's accessibility code is full of acronyms like atspi and atk, and vocabulary like adapters, interfaces, and factories.

Then in 2006, we moved from CVS to Subversion (svn).

Then in 2007, we get gtestutils, the unit testing framework in Glib. GNOME started in 1996; this means that for a full 11 years we did not have a standard infrastructure for writing tests!

Also, we did not have continuous integration nor continuous builds, nor reproducible environments in which to run those builds. Every developer was responsible for massaging their favorite distro into having the correct dependencies for compiling their programs, and running whatever manual tests they could on their code.

2008 comes and GNOME switches from svn to git.

In 2010-2011, Oracle acquires Sun Microsystems and fires all the people who were working on accessibility. GNOME ends up with approximately no one working on accessibility full-time, when it had about 10 people doing so before.

GNOME 3 happens, and the accessibility code has to be ported in emergency mode from CORBA to DBus.

GitHub appears in 2008, and Travis CI, probably the first generally-available CI infrastructure for free software, appears in 2011. GNOME of course is not developed there, but in its own self-hosted infrastructure (git and cgit back then, with no CI).

Jessie Frazelle invents usable containers 2013-2015 (Docker). Finally there is a non-onerous way of getting a reproducible environment set up. Before that, who had the expertise to use Yocto to set up a chroot? In my mind, that seemed like a thing people used only if they were working on embedded systems.

But it is until 2016 that rootless containers become available.

And it is only until 2018 that we get gitlab.gnome.org - a Git-based forge that makes it easy to contribute and review code, and have a continuous integration infrastructure. That's 21 years after GNOME started, and 16 years after accessibility first got implemented.

Before that, tooling is very primitive.

In 2015 I took over the maintainership of librsvg, and in 2016 I started porting it to Rust. A couple of years later, we got gitlab.gnome.org, and Jordan Petridis and myself added the initial CI. Years later, Dunja Lalic would make it awesome.

When I took over librsvg's maintainership, it had few tests which didn't really work, no CI, and no reproducible environment for compilation. The book by Michael Feathers, "Working effectively with legacy code" describes "legacy code is code without tests".

When I started working on accessibility at the beginning of this year, it had few tests which didn't really work, no CI, and no reproducible environment.

Right now, Yelp, our help system, has few tests which don't really work, no CI, and no reproducible environment.

Gnome-session right now has few tests which don't really work, no CI, and no reproducible environment.

I think you can start to see a pattern here...

This is a chart generated by the git-of-theseus tool. It shows how many lines of code got added each year, and how much of that code remained or got displaced over time.

For a project with constant maintenance, like GTK, you get a pattern like in the chart above: the number of lines of code increases steadily, and older code gradually diminishes as it is replaced by newer code.

For librsvg the picture is different. It was mostly unmaintained for a few years, so the code didn't change very much. But when it got gradually ported to Rust over the course of three or four years, what the chart shows is that all the old code shrinks to zero while new code replaces it completely. That new code has constant maintainenance, and it follows the same pattern as GTK's.

Orca is more or less the same as GTK, although with much slower replacement of old code. More accretion, less replacement. That big jump before 2012 is when it got ported from the old CORBA interfaces for accessibility to the new DBus ones.

This is an interesting chart for at-spi2-core. During the GNOME2 era, when accessibility was under constant maintenance, you can see the same "constant growth" pattern. Then there is a lot of removal and turmoil in 2009-2010 as DBus replaces CORBA, followed by quick growth early in the GNOME 3 era, and then just stagnation as the accessibility team disappeared.

How do we start fixing this?

The first thing is to add continuous integration infrastructure (CI). Basically, tell a robot to compile the code and run the test suite every time you "git push".

I copied the initial CI pipeline from libgweather, because Emmanuele Bassi had recently updated it there, and it was full of good toys for keeping C code under control: static analysis, address sanitizer, code coverage reports, documentation generation. It was also a CI pipeline for a Meson-based project; Emmanuele had also ported most of the accessibility modules to Meson while he was working for the GNOME Foundation. Having libgweather's CI scripts as a reference was really valuable.

Later, I replaced that hand-written setup for a base Fedora container image with Freedesktop CI templates, which are AWESOME. I copied that setup from librsvg, where Jordan Petridis had introduced it.

The CI pipeline for at-spi2core has five stages:

-

Build container images so that we can have a reproducible environment for compiling and running the tests.

-

Build the code and run the test suite.

-

Run static analysis, dynamic analysis, and get a test coverage report.

-

Generate the documentation.

-

Publish the documentation, and publish other things that end up as web pages.

Let's go through each stage in detail.

First we build a reproducible environment in which to compile the code and run the tests.

Using Freedesktop CI templates, we start with two base images for "empty distros", one for openSUSE (because that's what I use), and Fedora (because it provides a different build configuration).

CI templates are nice because they build the container, install the build dependencies, finalize the container image, and upload it to gitlab's container registry all in a single, automated step. The maintainer does not have to generate container images by hand in their own computer, nor upload them. The templates infrastructure is smart enough not to regenerate the images if they haven't changed between runs.

CI templates are very flexible. They can deal with containerized builds, or builds in virtual machines. They were developed by the libinput people, who need to test all sorts of varied configurations. Give them a try!

Basically, "meson setup", "meson compile", "meson install", "meson test", but with extra detail to account for the particular testing setup for the accessibility code.

One interesting thing is that for example, openSUSE uses dbus-daemon for the accessibility bus, which is different from Fedora, which uses dbus-broker instead.

The launcher for the accessibility bus thus has different code paths and configuration options for dbus-daemon vs. dbus-broker. We can test both configurations in the CI pipeline.

HELP WANTED: Unfortunately, the Fedora test job doesn't run the tests yet! This is because I haven't learned how to run that job in a VM instead of a container — dbus-broker for the session really wants to be launched by systemd, and it may just be easier to have a full systemd setup inside a VM rather than trying to run it inside a "normal" containerized job.

If you know how to work with VM jobs in Gitlab CI, we'd love a hand!

The third stage is thanks to the awesomeness of modern compilers. The low-level accessibility infrastructure is written in C, so we need all the help we can get from our tools!

We run static analysis to catch many bugs at compilation time. Uninitialized variables, trivial memory leaks, that sort of thing.

Also, address-sanitizer. C is a memory unsafe language, so catching pointer mishaps early is really important. Address-sanitizer doesn't catch everything, but it is better than nothing.

Finally, a test coverage job, to see which lines of code managed to get executed while running the test suite. We'll talk a lot more about code coverage in the following slides.

At least two jobs generate HTML and have to publish it: the documentation job, and the code coverage reports. So, we do that, and publish the result with Gitlab pages. This "static web hosting inside Gitlab", which makes things very easy.

Adding a CI pipeline is really powerful. You can automate all the things you want in there. This means that your whole arsenal of tools to keep code under control can run all the time, instead of only when you remember to run each tool individually, and without requiring each project member to bother with setting up the tools themselves.

The original accessibility code was written before we had a culture of ubiquitous unit tests. Refactoring the code to make it testable makes it a lot better!

In a way, it is rewarding to become the CI person for a project and learn how to make the robots do the boring stuff. It is very rewarding to see other project members start using the tools that you took care to set up for them, because then they don't have to do the same kind of setup.

It is also kind of a pain in the ass to keep the CI updated. But it's the same as keeping any other basic infrastructure running: you cannot think of going back to live without it.

Now let's talk about code coverage.

A code coverage report tells you which lines of code have been executed, and which ones haven't, after running your code. When you get a code coverage report while running the test suite, you see which code is actually exercised by the tests.

Getting to 100% test coverage is very hard, and that's not a useful goal - full coverage does not indicate the absence of bugs. However, knowing which code is not tested yet is very useful!

Code that didn't use to have a good test suite often has many code paths that are untested. You can see this in at-spi2-core. Each row in that toplevel report is for a directory in the source tree, and tells you the percentage of lines within the directory that are executed as a result of running the test suite. If you click on one row, you get taken to a list of files, from which you can then select an individual file to examine.

As you can see here, librsvg has more extensive test coverage. This is because over the last years, we have made sure that every code path gets exercised by the test suite. It's not at 100% yet (and there are bugs in the way we obtain coverage for the c_api, for example, which is why it shows up almost uncovered), but it's getting there.

My goal is to make at-spi2-core's tests equally comprehensive.

Both at-spi2-core and librsvg use Mozilla's grcov tool to generate the coverage reports. Grcov can consume coverage data from LLVM, GCC, and others, and combine them into a single report.

Glib is a much more complex library, and it uses lcov instead of grcov. Lcov is an older tool, not as pretty, but still quite functional (in particular, it is very good at displaying branch coverage).

This is what the coverage report looks for a single C file. Lines that were executed are in green; lines that were not executed are in red. Lines in white are not instrumented, because they produce no executable code.

The first column is the line number; the second column is the number of times each line got executed. The third column is of course the code itself.

In this extract, you can see that all the lines in the

impl_GetChildren() function got executed, but none of the lines in

impl_GetIndexInParent() got executed. We may need to write a test that

will cause the second function to get executed.

The accessibility code needs to process a bunch of properties in DBus objects. For example, the Python code at the top of the slide queries a set of properties, and compares them against their expected values.

At the bottom, there is the coverage report. The C code that handles each property is indeed executed, but the code for the error path, that handles an invalid property name, is not covered yet; it is color-coded red. Let's add a test for that!

So, we add another test, this time for the error path in the C code. Ask for the value of an unknown property, and assert that we get the correct DBus exception back.

With that test in place, the C code that handles that error case is covered, and we are all green.

What I am doing here is to characterize the behavior of the DBus API, that is, to mirror its current behavior in the tests because that is the "known good" behavior. Then I can start refactoring the code with confidence that I won't break it, because the tests will catch changes in behavior.

By now you may be familiar with how Gitlab displays diffs in merge requests.

One somewhat hidden nugget is that you can also ask it to display the code coverage for each line as part of the diff view. Gitlab can display the coverage color-coding as a narrow gutter within the diff view.

This lets you answer the question, "this code changed, does it get executed by a test?". It also lets you catch code that changed but that is not yet exercised by the test suite. Maybe you can ask the submitter to add a test for it, or it can give you a clue on how to improve your testing strategy.

The trick to enable that is to use the

artifacts:reports:coverage_report key in .gitlab-ci.yml. You have

your tools create a coverage report in Cobertura XML format, and you

give it to Gitlab as an artifact.

See the gitlab documentation on coverage reports for test coverage visualization.

When grcov outputs an HTML report, it creates something that looks and

feels like a <table>, but which is not an HTML table. It is just a

bunch of nested <div> elements with styles that make them look like

a table.

I was worried about how to make it possible for people who use screen readers to quickly navigate a coverage report. As a sighted person, I can just look at the color-coding, but a blind person has to navigate each source line until they find one that was executed zero times.

Eitan Isaacson kindly explained the basics of ARIA tags to me, and

suggested how to fix the bunch of <div> elements. First, give them

roles like table, row, cell. This tells the browser that the

elements are to be navigated and presented to accessibility tools as

if they were in fact a <table>.

Then, generate an aria-label for each cell where the report shows

the number of times a line of code was executed. For lines not

executed, sighted people can see that this cell is just blank, but has

color coding; for blind people the aria-label can be "no coverage"

or "zero" instead, so that they can perceive that information.

We need to make our development tools accessible, too!

You can see the pull request to make grcov's HTML more accessible.

Speaking of making development tools accessible, Mike Gorse found a bug in how Gitlab shows its project badges. All of them have an alt text of "Project badge", so for someone who uses a screen reader, it is impossible to tell whether the badge is for a build pipeline, or a coverage report, etc. This is as bad as an unlabelled image.

You can see the bug about this in gitlab.com.

One important detail: if you want code coverage information, your processes must exit cleanly!!!. If they die with a signal (SIGTERM, SIGSEGV, etc.), then no coverage information will be written for them and it will look as if your code got executed zero times.

This is because gcc and clang's runtime library writes out the

coverage info during program termination. If your program dies before

main() exits, the runtime library won't have a chance to write the

coverage report.

During a normal user session, the lifetime of the accessibilty daemons (at-spi-bus-launcher and at-spi-registryd) is controlled by the session manager.

However, while running those daemons inside the test suite, there is no user session! The daemons would get killed when the tests terminate, so they wouldn't write out their coverage information.

I learned to use Martin Pitt's python-dbusmock to write a minimal mock of gnome-session's DBus interfaces. With this, the daemons think that they are in fact connected to the session manager, and can be told by the mock to exit appropriately. Boom, code coverage.

I want to stress how awesome python-dbusmock is. It took me 80 lines of Python to mock the necessary interfaces from gnome-session, which is pretty great, and can be reused by other projects that need to test session-related stuff.

I am using pytest to write tests for the accessibility interfaces via DBus. Using DBus from Python is really pleasant.

For those tests, a test fixture is "an accessibility registry daemon tied to the session manager". This uses a "session manager fixture". I made the session manager fixture tear itself down by informing all session clients of a session Logout. This causes the daemons to exit cleanly, to get coverage information.

The setup for the session manager fixture is very simple; it just

connects to the session bus and acquires the

org.gnome.SessionManager name there.

Then we yield mock_session. This makes the fixture present itself to whatever needs to call it.

When the yield comes back, we do the teardown stage. Here we just

tell all session clients to terminate, by invoking the Logout method

on the org.gnome.SessionManager interface. The mock session manager

sends the appropriate singals to connected clients, and the clients

(the daemons) terminate cleanly.

I'm amazed at how smoothly this works in pytest.

The C code for accessibility was written by hand, before the time when we had code generators to implement DBus interfaces easily. It is extremely verbose and error-prone; it uses the old libdbus directly and has to piece out every argument to a DBus call by hand.

This code is really hard to maintain. How do we fix it?

What I am doing is to split out the DBus implementations:

- First get all the arguments from DBus - marshaling goo.

- Then, the actual logic that uses those arguments' values.

- Last, construct the DBus result - marshaling goo.

If you know refactoring terminology, I "extract a function" with the actual logic and leave the marshalling code in place. The idea is to do that for the whole code, and then replace the DBus gunk with auto-generated code as much a possible.

Along the way, I am writing a test for every DBus method and property that the code handles. This will give me safety when the time comes to replace the marshaling code with auto-generated stuff.

We need reproducible environments to build and test our code. It is not acceptable to say "works on my machine" anymore; you need to be able to reproduce things as much as possible.

Code coverage for tests is really useful! You can do many tricks with it. I am using it to improve the comprehensiveness of the test suite, to learn which code gets executed with various actions on the DBus interfaces, and as an exploratory tool in general while I learn how the accessibility code really works.

Automated builds on every push, with tests, serve us to keep the code from breaking.

Continuous integration is generally available if we choose to use it. Ask for help if your project needs CI! It can be overwhelming to add it the first time.

Let the robots do the boring work. Constructing environments reproducibly, building the code and running the tests, analyzing the code and extracting statistics from it — doing that is grunt work, and a computer should do it, not you.

"The Not Rocket Science Rule of Software Engineering" is to automatically maintain a repository of code that always passes all the tests. That, with monotonically increasing test coverage, lets you change things with confidence. The rule is described eloquently by Graydon Hoare, the original author of Rust and Monotone.

There is tooling to enforce this rule. For GitHub there is Homu; for Gitlab we use Marge-bot. You can ask the GNOME sysadmins if you would like to turn it on for your project. Librsvg and GStreamer use it very productively. I hope we can start using Marge-bot for the accessibility repositories soon.

The moral of the story is that we can make things better. We have much better tooling than we had in the early 2000s or 2010s. We can fix things and improve the basic infrastructure for our personal computing.

You may have noticed that I didn't talk much about accessibility. I talked mostly about preparing things to be able to work productively on learning the accessibility code and then improving it. That's the stage I'm at right now! I learn code by refactoring it, and all the CI stuff is to help me refactor with confidence. I hope you find some of these tools useful, too.

(That is a photo of me and my dog, Mozzarello.)

I want to thank the people that have kept the accessibility code functioning over the years, even after the rest of their team disappeared: Joanmarie Diggs, Mike Gorse, Samuel Thibault, Emmanuele Bassi.

Work Group Shifts to Feedback Session

Members of openSUSE’s Adaptable Linux Platform (ALP) community workgroup had a successful install workshop on August 2 and are transitioning to two install feedback sessions.

The first feedback session is scheduled to take place on openSUSE’s Birthday on August 9 at 14:30 UTC. The second feedback session is scheduled to take place on August 11 during the community meeting at 19:00 UTC.

Attendees of the workshop were asked to install MicroOS Desktop and temporarily use it. This is being done to gain some feedback on how people use their Operating System, which allows the work groups to develop a frame of reference for how ALP can progress.

The call for people to test spin MicroOS Desktop has received a lot of feedback and the workshop also provided a lot of feedback. One of the comments in the virtual feedback session was “stable base + fresh apps? sign me up”.

“stable base + fresh apps? sign me up.” - listed in comments during workshop

Two install videos were posted to the openSUSETV YouTube channel to help get people started with installing and testing MicroOS.

The video Installing Workshop Video (MicroOS Desktop) went over the expectations for ALP and then discussed experiences going through a testing spreadsheet.

The other video, which was not shown during the workshop due to time limitations, was called Installing MicroOS on a Raspberry Pi 400 and gave an overview on how to get MicroOS Desktop with a graphical interface running on the Raspberry Pi.

A final Lucid Presentation is scheduled for August 16 during the regularly scheduled workgroup.

People are encouraged to send feedback to the ALP-community-wg mailing list and to attend the feedback sessions, which will be listed in the community meeting notes.

Users can download the MicroOS Desktop at https://get.opensuse.org/microos/ and see instructions and record comments on the spreadsheet.

YaST Development Report - Chapter 6 of 2022

Time for more news from the YaST-related ALP work groups. As the first prototype of our Adaptable Linux Platform approaches we keep working on several aspects like:

- Improving Cockpit integration and documentation

- Enhancing the containerized version of YaST

- Evolving D-Installer

- Developing and documenting Iguana

It’s a quite diverse set of things, so let’s take it bit by bit.

Cockpit on ALP - the Book

Cockpit has been selected as the default tool to perform 1:1 administration of ALP systems. Easing the adoption of Cockpit on ALP is, therefore, one of the main goals of the 1:1 System Management Work Group. Since clear documentation is key, we created this wiki page explaining how to setup and start using Cockpit on ALP.

The document includes several so-called “development notes” presenting aspects we want to work on in order to improve the user experience and make the process even more straightforward. So stay tuned for more news in that regard.

Improvements in Containerized YaST

As you already know, Cockpit will not be the only way to administer an individual ALP system. Our containerized version of YaST will also be an option for those looking for a more traditional (open)SUSE approach. So we took some actions to improve the behavior of YaST on ALP.

First of all, we reduced the size of the container images as shown in the following table.

| Container | Original Size | New size | Saved Space |

|---|---|---|---|

| ncurses | 433MB | 393MB | 40MB |

| qt | 883MB | 501MB | 382MB |

| web | 977MB | 650MB | 327MB |

We detected more opportunities to reduce the size of the container images, but in most cases they would imply relatively deep changes in the YaST code or a reorganization of how we distribute the YaST components into several images. So we decided to postpone those changes a bit to focus on other aspects in the short term.

We also adapted the Bootloader and Kdump YaST modules to work containerized and we added them to the available container images.

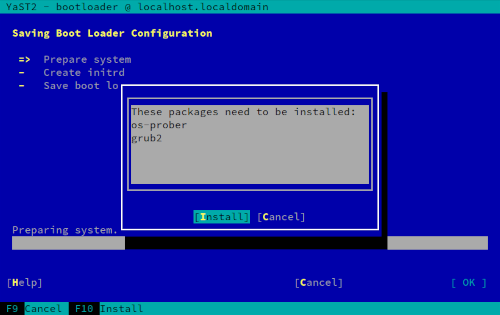

We took the opportunity to rework a bit how YaST handles the on-demand installation of software. As you know, YaST sometimes asks to install a given set of packages in order to continue working or to configure some particular settings.

Due to some race conditions when initializing the software management stack, YaST was sometimes checking and even installing the packages in the container instead of doing it so in the host system that was being configured. That is fixed now and it works as expected in any system in which immediate installation of packages is possible, no matter if YaST runs natively or in a container. But that still leaves one open question. What to do in a transactional system in which installing new software implies a reboot of the system? We still don’t have an answer to that but we are open to suggestions.

As you can imagine, we also invested quite some time checking the behavior of YaST containerized on

top of ALP. The good news is that, apart from the already mentioned challenge with software

installation, we found no big differences between running in a default (transactional) ALP system or

in a non-transaction version of it. The not-so-good news is that we found some issues related to

rendering of the ncurses interfaces, to sysctl configuration and to some other aspects. Most of

them look like relative easy to fix and we plan to work on that in the upcoming weeks.

Designing Network Configuration for D-Installer

Working on Cockpit and our containerized YaST is not preventing us to keep evolving D-Installer. If you have tested our next-generation installer you may have noticed it does not include an option to configure the network. We invested some time lately researching the topic and designing how network configuration should work in D-Installer from the architectural point of view and also regarding the user experience.

In the short term, we have decided to cover the two simplest use cases: regular cable and WiFi adapters. Bear in mind that Cockpit does not allow setting up WiFi adapters yet. We agreed to directly use the D-Bus interface provided by NetworkManager. At this point, there is no need to add our own network service. At the end of the installation, we will just copy the configuration from the system executing D-Installer to the new system.

The implementation will not be based on the existing Cockpit components for network configuration

since they present several weaknesses we would like to avoid. Instead, we will reuse the

components of the cockpit-wicked extension, whose architecture is better suited for the task.

D-Installer as a Set of Containers

In our previous report we announced D-Installer 0.4 as the first version with a modular architecture. So, apart from the already existing separation between the back-end and the two existing user interfaces (web UI and command-line), the back-end consists now on several interconnected components.

And we also presented Iguana, a minimal boot image capable of running containers.

Sure you already guessed then what the next logical step was going to be. Exactly, D-Installer running as a set of containers! We implemented three proofs of concept to check what was possible and to research implications like memory consumption:

- D-Installer running as a single container that includes the back-end and the web interface.

- Splitting the system in two containers, one for the back-end and the other for the web UI.

- Running every D-Installer component (software handling, users management, etc.) in its own container.

It’s worth mentioning that containers communicate with each other by means of D-Bus, using different techniques on each case. The best news if that all solutions seem to work flawlessly and are able to perform a complete installation.

And what about memory consumption? Well, it’s clearly bigger than traditional SLE installation with YaST, but that’s expected at this point in which no optimizations has been performed on D-Installer or the containers. On the other hand, we found no significant differences between the three mentioned proofs of concept.

- Single all-in-one container:

- RAM used at start: 230 MiB

- RAM used to complete installation: 505 MiB

- Two containers (back-end + web UI):

- At start: 221 MiB (191 MiB back-end + 30 MiB web UI)

- To complete installation: 514 MiB (478 MiB back-end + 36 MiB web UI)

- Everything as a separate container:

- At start: 272 MiB (92 MiB software + 74 MiB manager + 75 MiB users + 31 web)

- After installation: 439 MiB (245 MiB software + 86 manager + 75 users + 33 web)

Those numbers were measured using podman stats while executing D-Installer in a traditional

openSUSE system. We will have more accurate numbers as soon as we are able to run the three proofs

of concepts on top of Iguana. Which take us to…

Documentation About Testing D-Installer with Iguana

So far, Iguana can only execute a single container. Which means it already works with the all-in-one D-Installer container but cannot be used yet to test the other approaches. We are currently developing a mechanism to orchestrate several containers inspired by the one offered by GitHub Actions.

Meanwhile, we added some basic documentation to the project, including how to use it to test D-Installer.

Stay Tuned

Despite the reduced presence on this blog post, we also keep working on YaST beside containerization. Not only fixing bugs but also implementing new features and tools like our new experimental helper to inspect YaST logs. So stay tuned to get more updates about Cockpit, ALP, D-Installer, Iguana and, of course, YaST!

Member

Member CzP

CzP