Budgie 10.4 on openSUSE Leap 15.1

Outside the Cubicle | Gladiator Geartrack Gardening Pack

Debian 10 | Review from an openSUSE User

What are ACPI SLIT tables and why are they filled with lies?

Let’s say you’ve got a computer with a single processor and you want more computational power. A good option is to add more processors since they can mostly use the same technologies already present in your computer. Boom. Now you’ve got a symmetric multiprocessing (SMP) machine.

But pretty quickly you’re going to run into one of the drawbacks of SMP machines with a single main memory: bus contention. When data requests cannot be serviced from a processor’s cache, you get multiple processors trying to access memory via a single bus. The more processors you have, the worse the contention gets.

To fix this, you might decide to attach separate memory to each processor and call it local memory. Memory attached to remote processors becomes remote memory. Now each processor can access its own local memory using a separate memory bus. This is what many manufacturers started doing in the early 1990’s, and they called it Non-uniform Memory Access (NUMA), and named each group of processor, local memory, and I/O buses a NUMA node.

NUMA systems improve the performance of most multiprocessor workloads, but it’s rare for any workload to be perfectly confined to a single NUMA node. All kinds of things can happen that result in remote memory accesses, such as a task being migrated to a new NUMA node or running out of free local memory.

This might sound like the pre-1990 memory bus contention problem all over again, but the situation is actually worse now. Accessing remote memory takes much longer than accessing local memory, and not all remote memory has the same access latency. Depending on how the memory architecture is configured, NUMA nodes can be multiple hops away with each hop adding more latency.

So when a task exhausts all of local memory and the Linux kernel needs to grab free block from a remote node it has a decision to make: which remote node has enough free memory and the lowest access latency?

To figure that out, the kernel needs to know the access latency between any two NUMA nodes. And that’s something the firmware describes with the ACPI System Locality Distance Information Table (SLIT).

What are SLIT tables?

System firmware provides ACPI SLIT tables (described in the section 5.2.17 of

the ACPI 6.1

specification)

that describe the relative differences in access latency between NUMA nodes.

It’s basically a matrix that Linux reads on boot to build a map of NUMA memory

latencies, and you can view it multiple ways: with numactl, the

node/nodeX/distance sysfs file, or by dumping the ACPI tables directly with

acpidump.

Here is the data from my NUMA test machine. It only has two NUMA nodes so it doesn’t give a good sense of how the node distances can vary, but at least we’ve got much less data to read.

$ numactl -H

available: 2 nodes (0-1)

node 0 cpus: 0 1 2 3 4 5 6 7 8 9 10 11 24 25 26 27 28 29 30 31 32 33 34 35

node 0 size: 31796 MB

node 0 free: 30999 MB

node 1 cpus: 12 13 14 15 16 17 18 19 20 21 22 23 36 37 38 39 40 41 42 43 44 45 46 47

node 1 size: 32248 MB

node 1 free: 31946 MB

node distances:

node 0 1

0: 10 21

1: 21 10 $ cat /sys/devices/system/node/node*/distance

10 21

21 10$ acpidump > acpidata.dat

$ acpixtract -sSLIT acpidata.dat

Intel ACPI Component Architecture

ACPI Binary Table Extraction Utility version 20180105

Copyright (c) 2000 - 2018 Intel Corporation

SLIT - 48 bytes written (0x00000030) - slit.dat

$ iasl -d slit.dat

Intel ACPI Component Architecture

ASL+ Optimizing Compiler/Disassembler version 20180105

Copyright (c) 2000 - 2018 Intel Corporation

Input file slit.dat, Length 0x30 (48) bytes

ACPI: SLIT 0x0000000000000000 000030 (v01 SUPERM SMCI--MB 00000001 INTL 20091013)

Acpi Data Table [SLIT] decoded

Formatted output: slit.dsl - 1278 bytes

$ cat slit.dsl

/*

* Intel ACPI Component Architecture

* AML/ASL+ Disassembler version 20180105 (64-bit version)

* Copyright (c) 2000 - 2018 Intel Corporation

*

* Disassembly of slit.dat, Thu Jul 11 11:53:37 2019

*

* ACPI Data Table [SLIT]

*

* Format: [HexOffset DecimalOffset ByteLength] FieldName : FieldValue

*/

[000h 0000 4] Signature : "SLIT" [System Locality Information Table]

[004h 0004 4] Table Length : 00000030

[008h 0008 1] Revision : 01

[009h 0009 1] Checksum : DE

[00Ah 0010 6] Oem ID : "SUPERM"

[010h 0016 8] Oem Table ID : "SMCI--MB"

[018h 0024 4] Oem Revision : 00000001

[01Ch 0028 4] Asl Compiler ID : "INTL"

[020h 0032 4] Asl Compiler Revision : 20091013

[024h 0036 8] Localities : 0000000000000002

[02Ch 0044 2] Locality 0 : 0A 15

[02Eh 0046 2] Locality 1 : 15 0A

Raw Table Data: Length 48 (0x30)

0000: 53 4C 49 54 30 00 00 00 01 DE 53 55 50 45 52 4D // SLIT0.....SUPERM

0010: 53 4D 43 49 2D 2D 4D 42 01 00 00 00 49 4E 54 4C // SMCI--MB....INTL

0020: 13 10 09 20 02 00 00 00 00 00 00 00 0A 15 15 0A // ... ............The distances (memory latency) between a node and itself is normalised to 10, and every other distance is scaled relative to that 10 base value. So in the above example, the distance between NUMA node 0 and NUMA node 1 is 2.1 and the table shows a value of 21. In other words, if NUMA node 0 accesses memory on NUMA node 1 or vice versa, access latency will be 2.1x more than for local memory.

At least, that’s what the ACPI tables claim.

How accurate are the SLIT table values?

It’s a perennial problem with ACPI tables that the values stored are not always accurate, or even necessarily true. Suspecting that might be the case with SLIT tables, I decided to run Intel’s Memory Latency Checker tool to measure the actual main memory read latency between NUMA nodes on my test machine.

Measuring memory latency is notoriously difficult on modern machines because of

hardware prefetchers. Fortunately, MLC disables prefetchers using the Linux

msr module but that also means you need to run it as root.

$ ./Linux/mlc --latency_matrix

Intel(R) Memory Latency Checker - v3.7

Command line parameters: --latency_matrix

Using buffer size of 200.000MiB

Measuring idle latencies (in ns)...

Numa node

Numa node 0 1

0 78.5 119.4

1 120.8 76.6 A bit of quick maths shows that these latency measurements do not match with the SLIT values. In fact, the relative distance between node 0 and 1 is closer to 1.5.

OK, but who cares?

Remember when I said that NUMA node distances were used by the kernel? One of the places they’re used is figuring out whether to load balance tasks between nodes. Moving tasks across NUMA nodes is costly, so the kernel tries to avoid it whenever it can.

How costly is too costly? The current magic value used inside Linux kernel is 30 – if the NUMA node distance between two nodes is more than 30, the Linux kernel scheduler will try not to migrate tasks between them.

Of course, if the ACPI tables are wrong, and claim a distance of 30 or more but in reality it’s less, you’re unnecessarily impacting performance because you’ll likely see one extremely busy NUMA node while over nodes sit idle. And the scheduler will refuse to migrate tasks to the idle nodes until the active load balancer kicks in.

This

patch

fixes this exact bug for AMD EYPC machines which report a distance of 32

between some nodes (I measured the memory latency with mlc and it’s

definitely less than 3.2x). With the patch applied you get a nice 20-30%

improvement to CPU-intensive benchmarks because the scheduler balances tasks

across the entire system.

Ugh. Firmware.

Outside the Cubicle | Sledgehammer Repair, Handle Replacement

Gtk-rs tutorial

Leonora Tindall has written a

very nice tutorial on Speedy Desktop Apps With GTK and

Rust.

It covers prototyping a dice roller app with Glade, writing the code with

Rust and the gtk-rs bindings, and integrating the app into the desktop with

a .desktop file.

SimpleScreenRecorder on openSUSE

Battle of the Bilerps: Image Scaling on the CPU

I've been on a quest for better bilerps lately. "Bilerp" is, of course, a contraction of "bilinear interpolation", and it's how you scale pictures when you're in a hurry. The GNOME Image Viewer (née Eye of GNOME) and ImageMagick have both offered somewhat disappointing experiences in that regard; the former often pauses noticeably between the initial nearest-neighbor and eventual non-awful scaled images, but way more importantly, the latter is too slow to scale animation frames in Chafa.

So, how fast can CPU image scaling be? I went looking, and managed to produce some benchmarks — and! — code. Keep reading.

What's measured

The headline reference falls a little short of the gory details: The practical requirement is to just do whatever it takes to produce middle-of-the-road quality output. Bilinear interpolation starts to resemble nearest-neighbor when you reduce an image by more than 50%, so below that threshold I let the implementations use the fastest supported algorithm that still looks halfway decent.

There are other caveats too. I'll go over those in the discussion of each implementation.

I checked for correctness issues/artifacts by scaling solid-color images across a large range of sizes. Ideally, the color should be preserved across the entire output image, but fast implementations sometimes take shortcuts that cause them to lose low-order bits due to bad rounding or insufficient precision.

I ran the benchmarks on my workstation, which is an i7-4770K (Haswell) @ 3.5GHz.

Performance summary

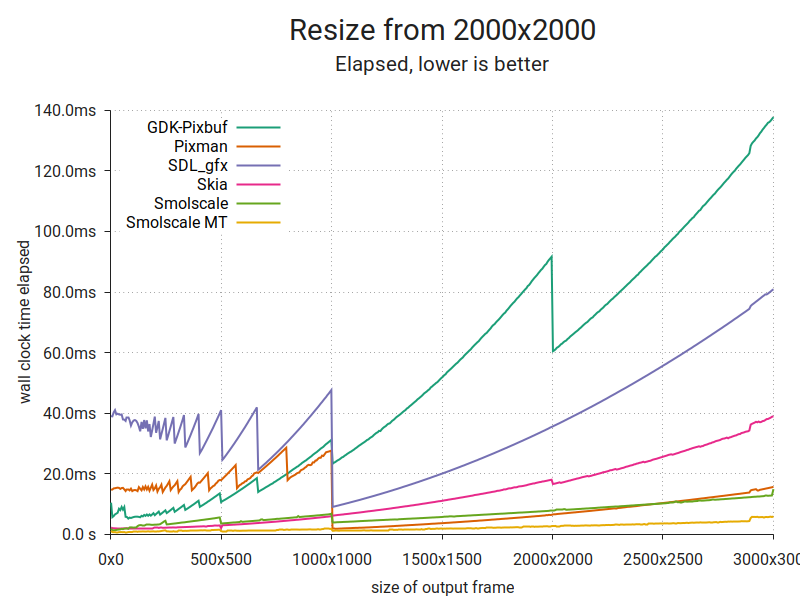

This plot consists of 500 samples per implementation, each of which is the fastest out of 50 runs. The input image is 2000×2000 RGBA pixels at 8 bits per channel. I chose additional parameters (channel ordering, premultiplication) to get the best performance out of each implementation. The image is scaled to a range of sizes (x axis) and the lowest time taken to produce a single output image at each size is plotted (y axis). A line is drawn through the resulting points.

Here's one more:

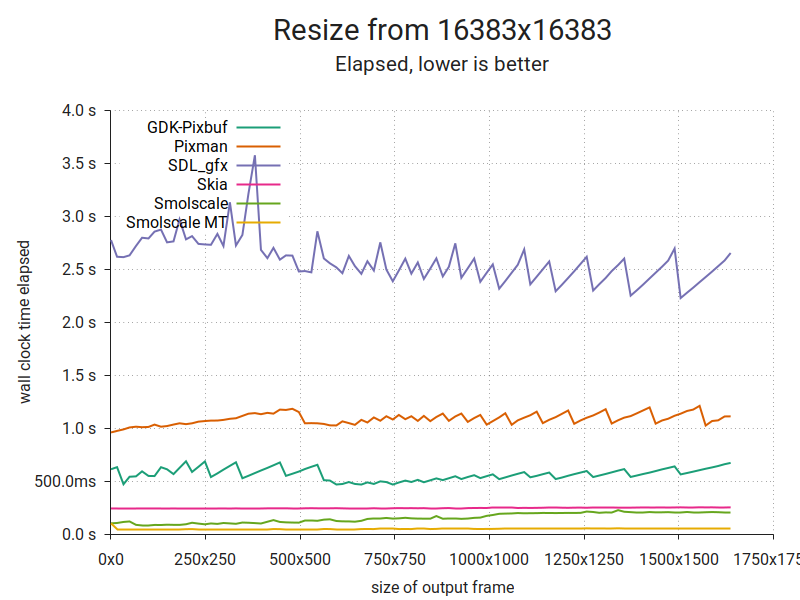

It's the same thing, but with a huge input and smaller outputs. As you can see, there are substantial differences. It's hard to tell from the plot, but Smolscale MT averages about 50ms per frame. More on that below. But first, a look at another important metric.

Output quality

Input image. It's fair to say that one of these is not like the others.

Discussion

GDK-Pixbuf

GDK-Pixbuf is the traditional GNOME image library. Despite its various warts, it's served the project well for close to two decades. You can read more about it in Federico's braindump from last year.

For the test, I used the gdk-pixbuf 2.38.1 packages in openSUSE Tumbleweed. With the scaling algorithm set to GDK_INTERP_BILINEAR, it provides decent quality at all sizes. I don't think that's strictly in line with how bilinear interpolation is supposed to work, but hey, I'm not complaining.

It is, however, rather slow. In fact, at scaling factors of 0.5 and above, it's the slowest in this test. That's likely because it only supports unassociated alpha, which forces it to do extra work to prevent colors from bleeding disproportionately from pixels of varying transparency. To be fair, the alpha channel when loaded from a typical image file is usually unassociated, and if I'd added the overhead of premultiplying it to the other implementations, it would've bridged some or most of the performance difference.

I suspect it's also the origin of the only correctness issue I could find; color values from completely transparent pixels will be replaced with black in the output. This makes sense because any value multiplied by a weight of zero, will be zero. It's mostly an issue if you plan to change the transparency later, as you might do in the realm of very serious image processing. And you won't be using GDK-Pixbuf in such a context, since GEGL exists (and is quite a bit less Spartan than its web pages suggest).

Pixman

Pixman is the raster image manipulation library used in X.Org and Cairo, and therefore indirectly by the entire GNOME desktop. It supports a broad range of pixel formats, transforms, composition and filters. It's otherwise quite minimal, and works with premultiplied alpha only.

I used the pixman 0.36.0 packages in openSUSE Tumbleweed. Pixman subscribes to a stricter definition of bilerp, so I went with that for scaling factors 0.5 and above, and the box filter for smaller factors. Output quality is impeccable, but it has trouble with scaling factors lower than 1/16384, and when scaling huge images it will sometimes leave a column of uniformly miscolored pixels at one extreme of the image. I'm chalking that up to limited precision.

Anyhow, the corner cases are more than made up for by Pixman's absolutely brutal bilerp (proper) performance. Thanks to its hand-optimized SIMD code, it's the fastest single-threaded implementation in the 0.5x-1.0x range. However, the box filter does not appear to be likewise optimized, resulting in one of the worst performances at smaller scaling factors.

SDL_gfx

The Simple Directmedia Layer is a cross-platform hardware abstraction layer originating in the late 90s as a vehicle for games development. While Loki famously used it to port a bunch of games to Linux, it's also been a boon to more recent independent games development (cf. titles like Teleglitch, Proteus, Dwarf Fortress). SDL_gfx is one of its helper libraries. It has a dead simple API and is packaged for pretty much everything. And it's games stuff, so y'know, maybe it's fast?

I tested libSDL_gfx 2.0.26 and libSDL2_gfx 1.0.4 from Tumbleweed. They perform the same: Not great. Below a scaling factor of 0.5x I had to use a combination of zoomSurface() and shrinkSurface() to get good quality. That implies two separate passes over the image data, which explains the poor performance at low output sizes. However, zoomSurface() alone is also disappointingly slow.

I omitted SDL from the sample output above to save some space, but quality-wise it appears to be on par with GDK-Pixbuf and Pixman. There were no corner cases that I could find, but zoomSurface() seems to be unable to work with surfaces bigger than 16383 pixels in either dimension; it returns a NULL surface if you go above that.

It's also worth noting that SDL's documentation and pixel format enums do not specify whether the alpha channel is supposed to be premultiplied or unassociated. The alpha blending formulas seem to imply unassociated, but zoomSurface() and shrinkSurface() displayed color bleeding with unassociated-alpha input in my tests.

Skia

Skia is an influential image library written in C++; Mozilla Firefox, Android and Google Chrome all use it. It's built around a canvas, and supports drawing shapes and text with structured or raster output. It also supports operations directly on raster data — making it a close analogue to Cairo and Pixman combined.

It's not available as a separate package for my otherwise excellent distro, so I built it from Git tag chrome/m76 (~May 2019). It's very straightforward, but you need to build it with Clang (as per the instructions) to get the best possible performance. So that's what I did.

I tested SkPixmap.scalePixels(), which takes a quality setting in lieu of the filter type. That's perfect for our purposes; between kLow_SkFilterQuality, kMedium_SkFilterQuality and kHigh_SkFilterQuality, medium is the one we want. The documentation describes it as roughly "bilerp plus mip-maps". The other settings are either too coarse (nearest-neighbor or bilerp only) or too slow (bicubic). The API supports both premultiplied and unassociated alpha. I used the former.

So, about the apparent quality… In all honesty — it's poor, especially when the output is slightly smaller than 1/2ⁿ relative to the input, i.e. ½*insize-1, ¼*insize-1, etc. I'm not the first to make this observation. Apart from that, there seems to be a precision issue when working with images (input or output) bigger than 16383 pixels in either dimension. E.g. the color #54555657 becomes #54545454, #60616263 becomes #60606060 and so on.

At least it's not slow. Performance is fairly respectable across the board, and it's one of the fastest solutions below 0.5x.

Smolscale

Smolscale is a smol piece of C code that does image scaling, channel reordering and (un)premultiplication. It's this post's mystery contestant, and you've never heard of it before because up until now it's been living exclusively on my local hard drive.

I wrote it specifically to meet the requirements I laid out before: Fast, middling quality, no artifacts, handles input/output sizes up to 65535×65535. Smolscale MT is the same implementation, just driven from multiple threads using its row-batch interface.

As I mentioned above, when running in 8 threads it's able to process a 16383×16383-pixel image to a much smaller image in roughly 50ms. Since it samples every single pixel, that corresponds to about 5.3 gigapixels per second, or ~21 gigabytes per second of input data. At that point it's close to maxing out my old DDR3-1600 memory (its theoretical transfer rate is 12.8GB/s, times two for dual channel ~= 26GB/s).

I'm going to write about it in detail at some point, but I'll save that for another post. In the meantime, I put up a Github dump.

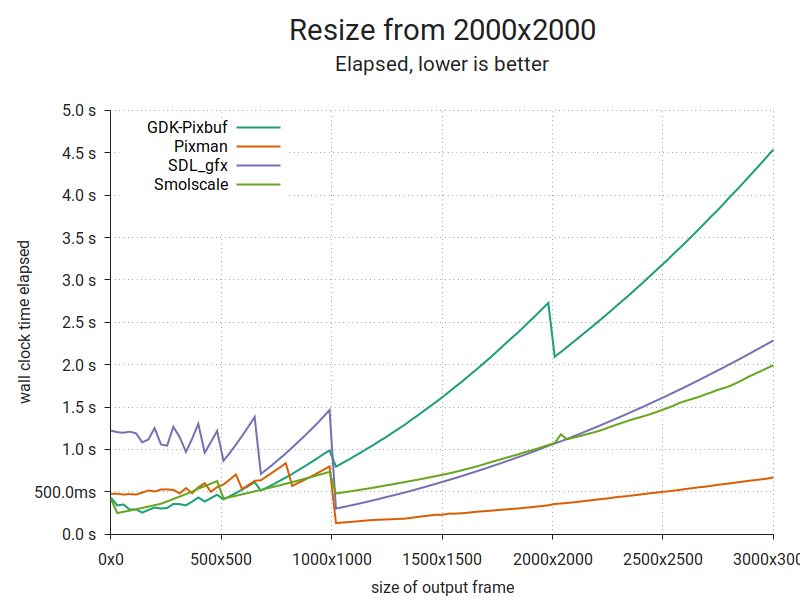

Bonus content!

I found a 2012-issue Raspberry Pi gathering dust in a drawer. Why not run the benchmarks on that too?

I dropped Skia from this run due to build issues that looked like they'd be fairly time consuming to overcome. Smolscale suffers because it's written for 64-bit registers and multiple cores, and this is an ARMv6 platform with 32-bit registers and a single core. Pixman is still brutally efficient; it has macro templates for either register size. Sweet!

OpenMandriva | Review from an openSUSE User

Removing rsvg-view

I am preparing the 2.46.0 librsvg release. This will no longer have the rsvg-view-3 program.

History of rsvg-view

Rsvg-view started

out

as a 71-line C program to aid development of librsvg. It would just

render an SVG file to a pixbuf, stick that pixbuf in a GtkImage

widget, and show a window with that.

Over time, it slowly acquired most of the command-line options that

rsvg-convert supports. And I suppose, as a way of testing the

Cairo-ification of librsvg, it also got the ability to print SVG files

to a GtkPrintContext. At last count, it was a 784-line C program

that is not really the best code in the world.

What makes rsvg-view awkward?

Rsvg-view requires GTK. But GTK requires librsvg, indirectly, through gdk-pixbuf! There is not a hard circular dependency because GTK goes, "gdk-pixbuf, load me this SVG file" without knowing how it will be loaded. In turn, gdk-pixbuf initializes the SVG loader provided by librsvg, and that loader reads/renders the SVG file.

Ideally librsvg would only depend on gdk-pixbuf, so it would be able to provide the SVG loader.

The rsvg-view source code still has a few calls to GTK functions which are now deprecated. The program emits GTK warnings during normal use.

Rsvg-view is... not a very good SVG viewer. It doesn't even start up

with the window scaled properly to the SVG's dimensions! If used for

quick testing during development, it cannot even aid in viewing the

transparent background regions which the SVG does not cover. It just

sticks a lousy custom widget inside a GtkScrolledWindow, and does

not have the conventional niceties to view images like zooming with

the scroll wheel.

EOG is a much better SVG viewer than rsvg-view, and people actually invest effort in making it pleasant to use.

Removal of rsvg-view

So, the next version of librsvg will not provide the rsvg-view-3

binary. Please update your packages accordingly. Distros may be able to

move the compilation of librsvg to a more sensible place in the

platform stack, now that it doesn't depend on GTK being available.

What can you use instead? Any other image viewer. EOG works fine; there are dozens of other good viewers, too.

Member

Member Futureboy

Futureboy