openSUSE Board Election 2020 results announced

The announcement was made by election official, Ariez Vachha, on the openSUSE Project's mailing list.

The election started on 15 December 2020 and it ended on 30 December 2020. The results were computed today at around 08h00 UTC. All members received the results by email.

Ariez announced the results and provided some statistics.

229 out of 518 eligible voters have cast their vote in this election. In terms of percentage, the turnout is lower than last year's board election.

The complete results are as follows:

- Axel Braun — 142 votes

- Gertjan Lettink — 134 votes

- Neal Gompa — 131 votes

- Maurizio Galli — 103 votes

- Nathan Wolf — 59 votes

The "none of the above" option, which was offered for the first time in a regular board election, obtained five votes.

Therefore, Axel, Gertjan and Neal are elected to serve for a 2-year term on the openSUSE Board.

Printing with a Brother MFC-7460DN laser printer on Fedora Linux 33

This is a follow-up to my previous post Configuring a Brother MFC-7460DN Laser Printer/Scanner on Fedora 23 (64-bit), as things have fortunately changed to the better in the meanwhile.

As described in this post, setting up this printer in CUPS on Fedora has become quite an ordeal, as Brother no longer updates the printer drivers for these old models and they don’t provide 64 bit binaries.

As I had to switch laptops a few weeks ago, I had to reinstall Linux from scratch and needed to reconfigure my printer settings as well. As I did not want to go through the same hoops again, I did some research and was happy to learn that Peter De Wachter has been working on an open source version of CUPS printer drivers for a wide range of Brother laser printers, which include the MFC-7460DN as well!

Sadly, the printer-driver-brlaser package is not part of the Fedora Linux distribution (yet), so I again had to go out and scour the usual places for a suitable RPM package. Luckily, an RPM package is available from the openSUSE Build Service! There’s no dedicated Fedora package, but downloading and installing the driver package for the openSUSE Tumbleweed distribution worked flawlessly.

To set up the printer, first download and install the RPM package directly from the build service repo:

$ sudo dnf install https://download.opensuse.org/repositories/Printing/openSUSE_Tumbleweed/x86_64/printer-driver-brlaser-6+git20200420.9d7ddda-21.4.x86_64.rpm

The actual URL may vary if the package version is updated, so check the download page for the latest version if the download fails.

Now you can set up the printer using the usual CUPS printer configuration tools. In the printer driver selection box, choose “Brother MFC-7460DN, using brlaser v6” and you’re all set!

BTW, this works on Ubuntu Linux as well, they actually include the driver in their core distribution. However, the MFC-7460DN model is not listed explicitly in the driver selection, but choosing any other brlaser device just works.

openSUSE community elects Axel, Gertjan and Neal to serve on the Board

The election lasted for two weeks and it ended last night at 23h59 UTC. The results were published today at mid-day (for me).

The complete election results are:

- Axel Braun — 142 votes

- Gertjan Lettink — 134 votes

- Neal Gompa — 131 votes

- Maurizio Galli — 103 votes

- Nathan Wolf — 59 votes

Five votes were recorded for the "none of the above" option. Out of 518 eligible voters, 229 voters have cast their vote in this election, which represents a turnout of 44%. It's a low turnout compared to last year's board election which was 56%.

Axel, Gertjan and Neal are elected to serve for two years on the openSUSE Board.

ownClouds Virtual Files on the Linux Desktop

it could already be read somewhere that 2021 will be the year of Linux on the desktop :-D

Fine with me. Just to support that, I did a little hackery over XMas to improve the support for ownClouds virtual file system on the Linux Desktop.

What are Virtual Files?

In professional usecases, users often have a huge amount of data stored in ownCloud. Syncing these completely to the desktop computer or laptop would be too much and costly in bandwidth and harddisk space. That is why most mature file sync solutions came up with the concept of virtual files. That means that users have the full structure with directories and files mirrored to their local machines, but have placeholder of the real files in the local file manager.

The files, however, are not on the disk. They get downloaded on demand.

That way, users see the full set of data virtually, but save time and space of files they never will need on the local system.

The ownCloud Experience

ownCloud has innovated on that topic a while ago, and meanwhile support virtual files mainly on Windows, because there is an elaborated system API to work with placeholder files. As we do not have this kind of API on Linux desktops yet, ownCloud desktop developers implemented the following solution for Linux: The virtual files are 1 byte sized files with the name of the original file, plus a suffix “.owncloud” to indicate that they are virtual.

That works, yet has one downside: Most file managers do not display these placeholder files nicely, because they loose the MIME type information. Also, downloading and also freeing up the space of downloaded files is not integrated. To summarize, there is a building block missing to make that useful on Linux.

Elokab-files-manager with ownCloud

I was wondering if it wasn’t possible to change an existing file manager to support the idea of virtual files. To start trying I found the Elokab-files-manager which is a very compact, yet feature rich file manager built on Qt. It is built with very few other dependencies. Perfect to start playing around with.

In my Github fork you can see the patches I came up with to make Elokab-fm understand ownCloud virtual files.

New Functionality

The screenshot shows the changes in the icon view of Elokab-fm.

Screenshot of patched Elokab-files-manager to support ownCloud Virtual Files.

To make that possible, Elokab-fm now pulls some information from the ownCloud Sync Client config file and connects to the sync client via local socket to share some information. That means, that the sync client needs to run to make that work.

Directories that are synced with ownCloud now show an cloud overlay in the center (1).

The placeholder files (2) which are not present on the local hard drive indicate that by showing a little cloud icon bottom right. However, other than before, they are displayed with their correct name and mime-type, which makes this already much more useful.

Files, which are on the local disk as the image (3) show their thumbnail as usual.

In the side panel (4) there are a few details added: The blue box on the bottom indicates that the file manager is connected to the sync client. For the selected virtual file (2), it shows an button that downloads the file if clicked which would turn it into a non virtual, local file. There is also an entry in the context menu to achieve that.

Status

This is just a proof of concept and a little XMas fun project. There are bugs, and the implementation is not complete. And maybe Jürgen’s idea of a FUSE layer to improve that is the better approach, but anyway. It just shows what is possible with virtual files also on Linux.

If you like that idea, please let us know or send a little PR if you like. We do not wanna fail providing our share on the year of the Linux Desktop, right? ;-)

Building it from my Github branch should be fairly easy, as it only depends on Qt.

For openSUSE users, I will provide some test packages in my home project on Open Build Service.

openSUSE Leap 15.3 Alpha

Two weeks ago, openSUSE Release Manager, Luboš Kocman, announced on the availability of the Alpha images for openSUSE Leap 15.3.

Leap 15.3 aims to become more binary compatible with SUSE Linux Enterprise. As such, Leap now aligns with SLE and its service packs to keep the system updated, stable and patched.

Alpha images of Leap 15.3 will be rolling until mid-February when it will transition in the Beta phase.

Users of openSUSE Leap 15.1 have until January of 2021 before it reaches its End of Live and users need to update to Leap 15.2. The Public Availability of Leap 15.3 is scheduled to be released in July, 2021, according to the releases roadmap Users of Leap 15.2 will need to update to the newer version within six months of the release of Leap 15.3.

The test releases can be downloaded from software.opensuse.org/distributions/testing.

openSUSE 2020 - End of Year Survey

The openSUSE Project is carrying an EoY survey to collect ideas and comments from the community. The survey carries 40 questions which aim to gain a better understanding of the issues and expectations of openSUSE users.

We encourage you to spend a few minutes to answer the questions and help in making openSUSE a better Linux distribution. 🙂

Repairability

My Macbook Pro

I have a Macbook pro retina 15 inch, that I bought in 2016. A few days back, the battery started bulking up and the laptop has totally stopped working. It has grown so big now that I cannot keep the laptop in a flat surface; it almost rocks like a see-saw. The touchpad panel is also feeling the bulge. The Macbook pro is not even switching on now, presumably to safeguard against battery explosions.

I bought the laptop 4 years back, for about 200,000 INR (~2700 USD / 2200 EUR). Electronics are very costly in India :( This is the pre-touchbar Macbook pro. I did not like the new keyboard in the then new Macbooks (the first edition with the touchbar). Luckily, I did not purchase the touchbar version which probably is one of the worst electronic devices ever manufactured.

I took my macbookpro today to a nearby Apple service center and I was told that the battery replacement would cost about 40,000 INR (~550 USD / 450 EUR). For such a costly laptop, it has a terrible battery longevity. I have no interest in paying this much for just a battery for an old laptop which is anyway not fun anymore to work on. I could purchase a new laptop with this money. For this money I could even setup a private cloud of a few Rasperry PIs and even launch kubernetes in them for fun.

I thought that I could purchase a battery offline and replace the battery. But to replace the battery, you need to disassemble almost everything (Harddisk, Speakers, CPU, fans, etc.) in a macbook and use chemicals (acetone). Thankfully Apple is not yet making cars, otherwise, to change Engine oil, we may have to dissasemble the headlamps, engine, transmission, differential, etc.

What is more evil is, The Macbook pro will not work without a battery even if it is plugged in an electric power supply. I do this for my old HP laptop (for parents+kids) whose battery is long gone. The magsafe charger of Macbook is another well-known disaster. I believe that Apple gets a lot of undeserving praise for their hardware. They have shiny aluminium body, a good screen and the best touchpad; But their Thermal management, Longevity, Repairability are all abysmally bad. I have replaced the charger three times in ~3 years because the plastic covering near the charging point goes bad in daily usage and the internal wire gets damaged. The wires also become very yellow and dirty in Indian climate, for some reason. Even people far more connected and influential than me, could not change Apple's behavior.

I personally, am never buying any apple device or Macbook pro ever again, for personal use. It's just because I do not like their greed and exploitation of vulnerable customers.

Thinkpads

The only reason I bought the Macbook pro in 2016 was because I had to do some iOS app development. Prior to that in my $DAYJOBs I have almost exclusively used Thinkpads right from the days they were owned by IBM (and had an extra-ordinary keyboard) until they were sold off to Lenovo (and have this chiclet non-sense). The old Thinkpads were a delight to have. We could replace the batteries, replace the fans, replace individual keys etc. We could also add RAM or Disk whenever we need, how much ever we need. All without needing anything more than a normal screwdriver set.

Good things are not meant to last. Just like how Macbooks have gone worse, Thinkpads too have gone worse. In my current $DAYJOB I use a Thinkpad E series (the cheapest version) and it is terrible. The management of Lenovo is either dumb and do not understand what its loyal customers want; or just plain evil (or capitalistic extremists) and embraced planned obsolesence.

The thinkpad now comes in many series L, T, X, P, E etc. and almost none of them have external batteries. Almost all of them have the RAM soldered and cannot be replaced. If the soldered RAM goes wrong, we need to throw away the laptop. Thinkpads were supposed to be the most developer friendly laptops. But even in 2020, we cannot get a single 32GB RAM in any of the medium cost thinkpad ranges. If you want anything more than 32GB RAM, you must shell out a lot of money and go for a ridiculously high cost series with 4k screen or some such luxury that I do not want. And their fingerprint readers never seem to reliably work on Linux for some reason, despite most kernel developers using Thinkpads.

E Waste and Green Earth

When I was in school, I have lived in a house with no electricity. I have then grown up and lived in Indian towns where 8-12 hours power cut per day was not unheard of. Luckily I now live in a big Indian city where powercuts are just an occasional weekly-few-hours affair. If it were not for the powercuts, I would happily purchase a desktop instead of a laptop. Atleast until now, desktops (Not those integrated all-in-one pieces) age better than laptops. But powercuts are a part of life where I live and I need battery backup.

Mobile Phones

Laptops and desktops are only a small part of the story. Now, with mobile phones coming on, the amount of e waste getting generated is exploding (literally in some sense). Android is a bigger culprit than Apple here. Even Google (which does not have "Do no evil" as a motto anymore) is refusing to push updates for pixel phones that are just 3 years old.

Once electric cars become more available, it is going to be worse for third world nations which import e waste. Rich billionaires and millionaires will claim to be more green by switching to electric cars and will send off the batteries to electronic graveyards in the other side of the Earth.

By making it difficult to replace/recycle batteries in laptops, phones, OEMs are making the world generate a lot of e waste. The first world nations worry about e-waste polluting their water and land, so what do they do ? They simply dump it out to third world nations, like India, Vietnam etc. As if, us people of these nations, do not have enough things to worry about on our own, now we have to accomodate tonnes of these e-wastes, which spoil our water and pollute our air.

We cannot even protest against these e-waste processing units that import world's junk, because most of the third world nations do not even have healthy democracies where citizens can opine against the Government/rulers, unlike the west.

What to do ?

The European Union atleast is trying to do something, while rest of the world seem to be not bothered. The USA especially has a lot of responsibility, because most of the OEMs like Apple, HP, Dell, etc. are walking the evil path of denying repairability and increasing sales, only to please the wallstreet and their $SHARE_SYMBOLs in the American stock market. It is pointless to spend millions for green-earth initiatives, if you do not produce re-cyclable / repairable electronic gadgets.

What can we Engineers do to combat such planned obsolecence and promote right-to-repair and recycling ?

Honestly, I do not have an answer. May be we could influence in our small circles of hardware purchase. When your $EMPLOYER is looking to update the company hardware and get laptops for everyone, insist them to get only hardware which can be easily repaired.

If you work for an e-commerce giant (like Amazon, Walmart, EBay, etc.) push your employer to provide "Repairability score" as a filter condition in the product pages (similar to 3*, 4*, 5* , etc.) of electronic devices. May be if enough companies/customers start demanding these, the OEMs will have a financial motivation to do the right thing.

Are there any other steps that you believe that we as individuals could do to bring a change ? If so, please comment.

What laptops do you like using that have a good repairability score, even in 2020/2021.

Thanks for coming to my TED talk ;-)

PS: If you know any good third party battery (which wouldn't explode in hot Indian weather) for Macbook Pro please let me know. If you refer a mechanic/shop who does the Macbook battery replacement in Chennai/Bangalore, India, that would be even better.

openSUSE Tumbleweed – Review of the week 2020/52

Dear Tumbleweed users and hackers,

Xmas is upon us – at least in some areas of the world. This means quitea lot of people are away from their computers and the number of submissions is getting a bit lower. Tumbleweed is not stopping though – it just rolls at the pace contributors create submissions. For week 2020/52 this means a total of 3 snapshots that were published. Saddest of this all is that the new kernel 5.10 is not behaving very nicely when the iwlwifi module is being loaded. The three snapshots published were 1218, 1221 and 1223, containing those changes:

- KDE Applications 20.12.0

- Poppler 20.12.1

- Linux kernel 5.10.1: as noted in the intro, there is an issue with iwlwifi. Unfortunately i was informed about that too late. The earlier reported amdgpu issue had been fixed in time though. Upstream bug reference: https://bugzilla.kernel.org/show_bug.cgi?id=210733

- Systemd 246.9

The staging projects are still filled up and a few more snapshots are likely to be released this year. Some of the changes that might become ready include:

- Mozilla Firefox 84.0

- icu 68.1: breaks a few things like postgresql. Staging:I

- Ruby 3.0: final release is staged. It will be added short term as additional version, with the default, used by r.g YaST, pinned to version 2.7

- Multiple python 3 versions parallel installable. Adding to python 3.8, version 3.6 week be reintroduced. Python modules will be built for both versions.

- RPM 4.16: all build issues in Staging:A have been fixed, but on upgrades, rpm seems to segfault in some cases.

- brp-check-suse: a bug fix in how it detected dangling symlinks (it detected them, but did not fail as it was supposed to)

- permissions package: prepares for easier listing, while supporting a full /usr merge

- Rpmlint 2.0: experiments ongoing in Staging:M

- openssl 3: not much progress, Staging:O still showing a lot of errors.

Christmastime in the year 2020 | Holiday Blathering

Result of the Modernizing AutoYaST initiative

In April, we announced the Modernizing AutoYaST initiative. The idea was not to rewrite AutoYaST but just introduce a few new features, remove some limitations and improve the code quality.

Although they were not set in stone, we had some ideas about what changes we wanted to introduce. However, as soon as we started to work, it became clear that we needed to adapt our roadmap. So if you compare our initial announcement with the result, you can spot many differences.

This article describes the most relevant changes. If you want to try any of these features, they are already available in openSUSE Tumbleweed.

Reducing profiles size

When AutoYaST generates a profile from an existing system, it includes a lot of information to reproduce the installation. As a consequence, those profiles are rather long, which makes working with them quite annoying.

However, it is not always clear when it is safe to omit some information from the profile without compromising the final result. To address this problem, we decided to introduce the concept of target. Thus, when generating a profile, you can ask AutoYaST to generate a more compact profile.

# yast2 clone_system modules target=compact filename=autoinst-compact.xml

In my current machine, the size of the profile is reduced from 2201 lines to just 834. But which information is omitted? Let’s enumerate a few items:

- System users and groups.

- Not modified firewall zones.

- System services which preset has not been changed.

- Printer settings if the

cupsservice is disabled.

Note that not all YaST modules implement support for this flag. Actually, in some cases, it does not make any sense.

Making easier to write dynamic profiles

When dealing with the installation of multiple systems, it might be useful to use a single profile that adapts to the system being installed at runtime. AutoYaST already offered two mechanisms to implement this behavior: rules and classes and pre-install scripts.

However, we though that it might be easier if you could embed Ruby (ERB) code in your profiles. The idea is to provide a set of helper functions that you can use to inspect the system and adjust the profile by setting values, adding or skipping sections, etc. It sounds cool, right? Let’s see a simple example.

The code below finds the largest disk by sorting them size and sets the value of the device

element.

<partitioning t="list">

<drive>

<% disk = disks.sort_by { |d| d[:size] }.last %> <!-- find the largest disk -->

<device><%= disk[:device] %></device> <!-- print the disk device name -->

<initialize t="boolean">true</initialize>

<use>all</use>

</drive>

</partitioning>

Of course, apart from a set of helpers (disks, network_cards, os_release or hardware), you

have the power of Ruby in your fingertips. What about retrieving a whole section from a remote

location? At some extent, it could replace the classes and rules feature.

<bootloader>

<% require "open-uri" %>

<%= URI.open("http://192.168.1.1/profiles/bootloader-common.xml").read %>

</bootloader>

Unfortunately, the documentation of this feature is still a work in progress. However, we expect to have it ready in the upcoming weeks.

Improved scripting support

Apart from introducing support for ERB, as described in the previous section, we improved script handling. Until now, Shell, Perl and Python were the only supported scripting languages. We removed this limitation and now you can use any interpreter available at installation time. Moreover, it is possible to pass custom options to the interpreter.

<intepreter>/usr/bin/bash -x</interpreter>

Additionally, we fixed a few issues and extended the error handling to inform the user when the script did not run successfully.

Validating the profile

Building and tweaking your profile can be a time-consuming task. AutoYaST offers XML-based validation, but the sort of errors you can detect is rather limited.

To make your life easier, we introduced these new features to leverage profile validation:

- Automatic profile validation at runtime.

- A new

check-profilecommand to detect errors without running the installer.

When AutoYaST fetches the profile, it automatically performs the XML-based validation, reporting any

error found. It works even if you are using features like Rules and classes or Dynamic profiles.

However, it can be easily disabled by setting the YAST_SKIP_XML_VALIDATION parameter to 1 when

booting the installer.

Regarding the check-profile, it basically uses part of the code that runs during

AutoYaST initialization. It includes:

- Profile fetching (even from a remote location).

- XML-based validation.

- Support for dynamic profiles: rules and classes, ERB and pre-installation scripts (optional).

- Optionally, detection of problems during profile import.

Needlessly to say that you should run this command with caution. Bear in mind that ERB and pre-installation scripts can run any arbitrary code. In fact, we are working with our security experts to make this command safer. See bsc#1177123 for further details.

Reducing the second stage

Unlike a normal installation, AutoYaST still uses two phases, which are known as stages. The first stage is responsible for most of the installation tasks: partitioning, registration, software installation, network configuration, etc. Depending on the content of the profile, the second stage comes into play after the first reboot. It takes care of additional configuration processes, like setting the firewall rules, enabling/disabling services, etc.

To reduce the need for a second stage, we moved the processing of several sections to the first

stage. At this point, these sections are processed during this stage: bootloader,

configuration_management, files, firewall, host, kdump, keyboard, language,

networking, partitioning, runlevel, scripts (except post-scripts and init-scripts, which

are processed during the second stage), security, services-manager, software, ssh_import,

suse_register, timezone and users. Thus if your profile does not contain any other section,

you can happily disable the second stage.

<general>

<mode>

<second_stage t="boolean">false</second_stage>

</mode>

</general>

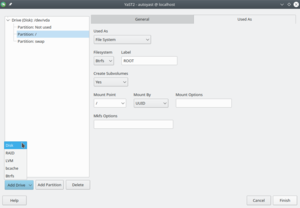

A better UI to define the partitioning section

The user interface offered by AutoYaST to define the partitioning section was confusing, buggy and rather limited. Therefore we took the chance to, basically, rewrite the whole thing.

It is still a work in progress, but it is already much better than the old one. For instance, in addition to disks and LVM, it supports defining sections for RAID, bcache and multi-device Btrfs file systems.

It should be noted that these changes are already available in openSUSE 15.2 and SUSE Linux Enterprise 15 SP2, so you do not need to wait until 15.3 or SP3 to enjoy them.

Conclusion

New features and bug fixes are the most visible changes. However, as part of this process, we refactored a lot of code, improved code coverage, extended the documentation, etc. In general, we feel that we improved AutoYaST quality in a sensible way. And we hope you have that impression too in the future.

Member

Member LenzGr

LenzGr