Art vs Design

Over the weekend I was forced to unload all my photos from my phone due to limited storage space. As I went through a nice capture of Builder nighly caught my attention and I couldn’t help but post it on twitter.

Cyberdyne Builder

Cyberdyne Builder

Obviously posting on twitter meant it was misunderstood immediately and quipped with entitled adjectives. And rather than responding on the wrong platform, I finally have an excuse to post on my blog again. So let’s take a look at the horrible situation we ended up with.

Thanks to Flatpak you now have a way to install a stable and development versions of an app, concurrenly. You can easily tell them apart without resorting to Name suffices in the shell, where the actual name gets horribly truncated due to ellipsization, while still clearly being the same app on a first glimpse.

Stable and Nightly Boxes

Stable and Nightly Boxes

There’s plenty of apps already making use of this. So how does an app developer get one? We actually have the tooling for that. If you have an app icon, you can easily generate a nightly variant with zero effort in most cases.

So what was the situation twitter was praising? Let’s count on how many GNOME applications shipped a custom nighly icon. Umm, how about zero?

A pretty picture an artist spends hours on, modelling, texturing, lighting, adjusting for low resolution screens is not a visual framework nor a reasonable thing to ask app developers to do.

Mesa, Nano, Redis, Git Update in openSUSE Tumbleweed

Another four openSUSE Tumbleweed snapshots were released this week.

A notable package updated this week is a new major version of gucharmap. Plus several python package updates, nano, mesa, git and Xfce packages also had new minor updates.

The most recent snapshot, 202000331 is trending well with a stable rating of 99 on the Tumbleweed snapshot reviewer. The GNOME Character Map, gucharmap, updated to version 13.0.0, but no changelog was provided. An update for glib2 2.62.6 is expected to be the final release of the stable 2.62.x series; maintenance efforts will be shifted to the newer 2.64.x series. The updated glib2 package fixed SOCKS5 username/password authentication. The 2.34 binutils package added and removed a few patches. GTK3 3.24.16 fixed problems with clipboard handling and fixed a crash in the Wayland input method. The package for creating business diagrams, kdiagram 2.6.2 fixed printing issue. The Linux Kernel updated to 5.5.13. A handful of Advanced Linux Sound Architecture changes were made in the kernel update. The 5.6.x kernel is expected to be released in a Tumbleweed snapshot soon. The libstorage-ng 4.2.71 package simplified combining disks with different block sizes into RAID. The programming language vala 0.46.7 made verious improvements and bug fixes and properly set CodeNode.error when reporting an error. Several xfce4 packages were updated and xfce4-pulseaudio-plugin 0.4.3 fixed various memory leaks and warnings and xterm 353 was updated. The yast2-firewall 4.2.4 packaged was updated and forces a reset of the firewalld API instance after modifying the service state and yast2-storage-ng 4.2.104 extended and improved the Application Programming Interface to get udev names for a block device

The package to improve audio and video under Linux pipewire 0.3.1 switched the license to MIT and added fdupes BuildRequires and pass fdupes macro while removing duplicate files, which came in snapshot 20200326. The 1.1.9 spec-cleaner package drop travis and tox and now uses github actions. Several python arrived in this snapshot. Python-packaging 20.3 fixed a bug that caused a 32-bit OS that runs on a 64-bit ARM CPU (e.g. ARM-v8, aarch64), to report the wrong bitness and python-SQLAlchemy 1.3.15 fixed regression in 1.3.14. The Xfce file manager package, thunar 1.8.14 updated translations and reverted a bug that introduced a regression. The snapshot recorded a stable rating of 99.

A stable rating of 97 was recorded for snapshot 20200325. The snapshot updated ImageMagick to version 7.0.10.2 and fixed another sizing issue with the label coder when pointsize is set. Mesa 20.0.2 provided several fixes for the code base. Bluetooth issues were fixed with the class UUID matches before connecting the profile in the bluez 5.54 package. Git 2.26.0 improved the handling of sparse checkouts. Text editor nano 4.9 made the new paragraph and the succeeding one get the appropriate first-line indent when justifying a selection. The latest stable version of the in-memory database redis 5.0.8 uses the tmpfiles macros instead of calling systemd-tempfiles direct and build wrong macro paths and the sssd 2.2.3 package brought new features like a “soft_ocsp” and “soft_crl” options that were added to make the checks for revoked certificates more flexible if the system is offline.

The 20200324 snapshot recorded a stable 93 rating. The snapshot also had an update for ImageMagick and an updated version of Mozilla Thunderbird 68.6.0. The email client adds a new popup display window when starting up on a new profile. A fix for the linker version script was made in FUSE (Filesystem in Userspace) 3.9.1, which provides a simple interface for userspace programs to export a virtual filesystem to the Linux kernel. The lensfun package jumped from version 0.3.2 to 0.3.95 and provides support for several next cameras and lenses. Other packages updated in the snapshot were mercurial 5.3.1, php7 7.4.4 and zypper 1.14.35.

Systemd 245 is expected to arrive in a snapshot in the coming days.

Kubic with Kubernetes 1.18.0 released

Announcement

The Kubic Project is proud to announce that Snapshot 20200331 has been released containing Kubernetes 1.18.0.

Release Notes are avaialble HERE.

Upgrade Steps

All newly deployed Kubic clusters will automatically be Kubernetes 1.18.0 from this point.

For existing clusters, please follow our new documentation our wiki HERE

Thanks and have a lot of fun!

The Kubic Team

openSUSE Tumbleweed – Review of the week 2020/14

Dear Tumbleweed users and hackers,

The week started with problems inside the openSUSE Tumbleweed distribution (caught by QA, so no worries) and ended even worse: we have some trouble on openQA since Thursday and many tests are failing. The failures seem more to be related to openQA’s infrastructure though, and not to openSUSE Tumbleweed. Nevertheless, we will not publish new snapshots until QA is stable again. During this week we have thus only released two snapshots: 0326 and 0331 (promised, no joke).

The snapshots contained these updates:

- Kubernetes 1.18.0

- Linux kernel 5.5.13

- Rust 1.41.1

- XFCE 4.14.2

The things being worked on are

- KDE Plasma 5.18.4.1

- Linux kernel 5.6.0

- Systemd 245: homed will not be enabled/offered just yet

- Poppler 0.86.1

- GNOME 3.34.5: last stable version from the 3.34 branch; this supposedly still gets into Leap 15.2 (via SLE). After this, the team can start focusing on GNOME 3.36.x; a maintained mozjs68 package is the main blocker on this path though

- GNU Make 4.3: missing only a fix for daps

- Guile 3.0.2: breaks gnutls’ test suite on i586

- LLVM 10

- Qt 5.15.0 (currently beta2 being tested)

- Ruby 2.7 – possibly paired with the removal of Ruby 2.6

- GCC 10 as the default compiler

- Removal of Python 2: quite some progress done, but not fully there yet

Sysroot does not seem to be an OS tree

Rant of the day: well, at least Microsoft is making loads of money...

Reputation, I'm convinced, is the main reason for that.

We teach them the wrong thing

Unfortunately, a lot of people try to convince schools, governments, charitable organizations and even companies to not pay anything at all. They are promoting open source solutions as an alternative that is cheaper or free, which just makes it look inferior to management. They are not telling organizations to pay local and open source product companies instead of Microsoft.Open source/Free Software advocates hammer on "but it is free"! And when they do, THEY probably think of Freedom. But the person they talk to just thinks "cheap and bad", no matter how you try to explain freedom. Nobody gets that, really, even if they nod friendly while thinking what a silly, idealistic nerd you are. Been there, done that.

I love the enthusiasm, yes, but in the end it is not helpful: it presents open source as a crappy but cheaper alternative without any real support. Well, there are a few overloaded volunteer enthusiasts who might do a great job for a volunteer but can't compete with a bunch of full time paid people at Microsoft. So the schools and governments and companies will simply use those 'free' (as in cheap and crappy) services as a stop-gap and then beg their bosses for budget to be able to pay a "proper" Microsoft service. There goes more public money in NOT public code.

We need to stop teaching companies that open source is a crappy, cheaper alternative to proper, paid alternatives from big American companies and instead tell them that they can pay for an open source solution that has real good support, no vendor lock-in, doesn't leak your data, protects your privacy and is actually better in many other ways. That way open source companies can actually hire people to make products better instead of just doing consulting one customer at a time.

And yes, some companies and some business areas have figured this out - Red Hat and SUSE are obvious examples, and projects like OpenStack have lots of paid people involved. But lots of other companies, from Bareos (backup) to Kolab (groupware) have struggled for years if not decades to build a product, instead getting sucked into consulting.

It doesn't work that way

I have seen loads of open source product companies go bankrupt or just give up and become consulting firms because their customers simply expected everything for free and to only pay a bit for consulting. Lots of open source people work at or set up their own consulting firms, occasionally even contributing a patch to upstream - but not building a product. Not that they don't want to, but they quickly find out that working your ass off for a maybe decent hourly rate does not leave you time to actually work on the thing you wanted to improve in the first place.Indeed, you can't build a good end user product that way. Frank and myself put together a talk about this recently:

I have also recently written an article about this entire thing, explaining why of all the business models around open source, only subscriptions can lead to a sustainable business that actually builds a great product. Will hopefully soon be on opensource.com.

Yeah but volunteers...

Are fundamental to open source, yes, no doubt. At Nextcloud we could not have build what we did without lots of volunteers, heck, nearly everybody at Nextcloud was a volunteer at some point. And yes, all code we write is AGPL, and that, too is important. I am NOT arguing against that, not in the least.What I say is:

- You can't build a great product without paid developers*

- You can't build a great product on consulting and only getting paid for setting it up/hosting

- You can build a better product collaboratively

- And the (A)GPL are the best licenses to do that

I'm sure there are exceptions to those rules, yes. But compare a great product like Krita, see how its developers struggle every day to be able to pay the bills of just a few full-time volunteers. Do you know how they are currently paying most of them? Last time I spoke to Boudewijn, the reality was sad: the Microsoft App store. Yup. How many does Adobe manage to pay to work on its products? Why should our ambition not be to have as many people working on Krita? Of course it should be. And yes, keep it open source. Is that doable?

Of course it is. Well, maybe not Adobe levels, but we can absolutely do better.

Missed opportunities

I said this was a rant, so I do have to complain a bit. My biggest regret is that KDE failed to catch up during the netbook period (around 2005). I believe that it is in no small part because we failed to work with businesses. Idealism can be super helpful and can also totally keep you irrelevant.KDE is, lately, working more with companies, trying to build up more business around its product. GNOME has been far better at that for a far longer time, by the way. It is hard, and companies like Kolab, struggling for the last ~20 years to make things work, have shown that. Just being a for-profit obviously doesn't solve all problems. Idealism and hard work are not enough to make a business work. But we can do better, and Nextcloud is an example that shows we can. Now not all things are freaking awesome at Nextcloud, really - we work our a**** off and it is hard. We put on our best face in public but sometimes I just want to bang my head on and in the wall...

Still, see the video, read the blog hopefully soon on opensource.com - there are ways.

Thoughts welcome.

Edit:

* let me qualify that statement. You can do it without paid developers in a small project, I dunno, grep or ls or the awesome simplescreenrecorder and tools like that. With those there is a risk of the apps going unmaintained and new ones popping up all the time - look at music players in the KDE community. I'd rather see one well maintained than new ones pop up with all their different flaws, but I totally get that for a volunteer it is often easier and more fun to start fresh. In either case, once you start building something huge, it gets pretty hard without long term dedicated resources. Note that it can be donations-run (like Krita and many others), with a charitable organization. I do think it is about more than 'just' the resources. If somebody 'just' sponsored 25 people to work full-time on Nextcloud, the end result would be different than the situation today. The need to deliver something that makes customers happy (which means focus on details, scalability etc!) and pressure to do things you wouldn't want to do in your free time (developer documentation...) make a big difference.

In any case, I really don't think projects like LibreOffice, Firefox, Nextcloud, KDE or GNOME and the Linux kernel itself would be where they are today without people paid to work on them.

Update on openSUSE + LibreOffice Conference

Organizers of the openSUSE + LibreOffice Conference had a meeting this week to discuss various topics surrounding COVID19 and how it may affect the conference and planning for it.

At this point, it is uncertain what restrictions governments may keep in place in the coming months. While October is some months away, there are many aspects we are considering as to how to run the openSUSE + LibreOffice Conference.

Travel restrictions, flights, hotel and venue availability, event capacity and our community members’ ability to attend the conference are all factors we are considering. We hope to make a decision about the conference at the latest by mid-June.

In these difficult times, we want to assure our communities that we are actively engaged in a good outcome for all members, sponsors and interested parties involved with a successful openSUSE + LibreOffice Conference. We are looking at alternatives for the conference like possibly doing a virtual conference and exploring what tools might help us to achieve this should we decide it’s a viable option.

Please remember that the Call for Papers is open and people can submit their talks until July 21 at events.opensuse.org. We are moving forward, assuming the conference will take place as planned from Oct. 13 - 16 and will keep our communities informed of any decisions we make regarding the plans for the conference and any alternatives options like a virtual conference. Stay safe, healthy and strong during these hard times and we hope to see all of you later this year; celebrating together our anniversary under much better circumstances!

Highlights of YaST Development Sprint 96

While many activities around the world slow down due to the COVID-19 crisis, we are proud to say the YaST development keeps going at full speed. To prove that, we bring you another report about what the YaST Team has been working on during the last couple of weeks.

The releases of openSUSE Leap 15.2 and SUSE Linux Enterprise 15 SP2 are approaching. That implies we invest quite some time fixing bugs found by the testers. Many of them are not specially exciting but we still have enough interesting topics to report about:

- More news about the new Online Search functionality

- Improvements in the user interface to configure NTP

- Progress in the support of Secure Boot for s390 mainframes

- Better reporting in AutoYaST

- Some bugfixes related to the handling of storage devices

- And a bonus: our new tool for mass review of GitHub pull requests

So let’s start!

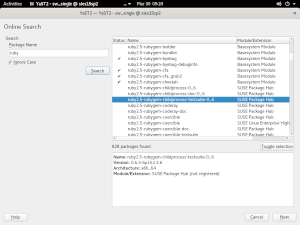

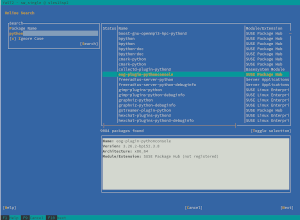

The Online Search Keeps Improving

This is not the first time our loyal readers learn about the YaST feature to search online for packages within SLE modules and extensions. We initially presented it three reports ago, followed by a review of several usability improvements we had decided to implement on top of that initial version.

But, as usual, SUSE’s QA department did a pretty good job forcing us to go one step further and provided us with useful information about how to improve the functionality. Apart from some minor bugs, they reported that there were important performance problems and that the UX could be improved.

The performance issues were annoying when working with big result sets. In one of our testing machines, it took several seconds to display the found packages after having received the list from the SUSE Customer Center. Moreover, scrolling through the results was rather slow too. Hopefully, those problems are gone now: most of the time is spent in network communication and scrolling works smoothly.

Regarding the UX, we introduced a few changes:

- Now there is a button to make clear how to select/unselect a package for installation. In the

text-based interface, it was rather easy to infer that pressing

Enterwas enough. However, in the graphical alternative, things were not that easy. - We have added some information about how many packages were found.

- The help texts were extended and improved.

But the Online Search UI is not the only interface that received some love…

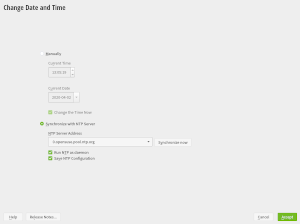

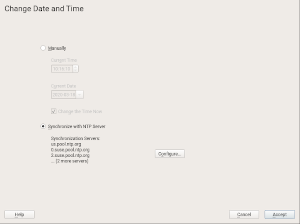

The Strange Case of the Multiple NTP Servers

YaST NTP allows to configure a list of NTP servers to use for synchronizing the date and time of the system. But one of our beloved users reported that a certain sequence of steps in the YaST Timezone module could ruin that list, reducing it to only its first entry. That was caused by a lack of consistency between both YaST modules.

YaST Timezone was designed a long time ago to, unlike YaST NTP, display and configure exactly one server. You may be asking, why can NTP be configured from YaST Timezone if there is an specific and more advanced YaST module for that purpose? The answer is that the YaST Timezone dialog is the only one available during installation, where it makes sense to offer the timezone and NTP configuration all together and with simplified options.

That simplicity also makes sense in an installed system for users with a basic configuration. But in systems with an advanced setup, we adapted that dialog to display the list of servers and to not offer any shortcut to adjust that configuration. Instead, the YaST Timezone dialog offers only a “Configure” button that opens the YaST NTP dialog, where the user can fine-tune the NTP configuration at will.

Secure Boot in zSeries Mainframes - Second Round

A couple of reports ago, we presented the initial support for zSeries Secure boot. We have continued improving that feature based on the feedback received from early testers and mainframe specialists.

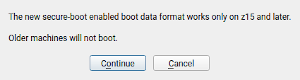

Now, YaST behavior is better adapted to the characteristics of the system in which it’s been executed. We could go into details about each zSeries model and how YaST behaves based on its hardware configuration. But since an image is worth a thousand words, let’s just illustrate it with this new warning about z15+ requirement displayed when secure boot support is turned on.

The help texts and the information displayed in the installer proposal have also been adapted to better explain the consequences of the possible settings in YaST. Once again, let’s see it with an example image.

But, as you already know, this is not the only part of YaST we are improving step by step, one sprint after the other…

More Sanity Checks in AutoYaST

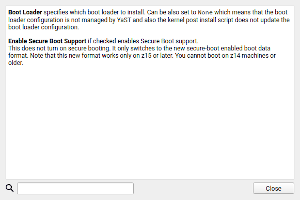

As part of our continuous effort to improve AutoYaST error handling and reporting capabilities (see this section of the previous report), we have added a new check for multi-devices technologies. Thus in case you are setting up an LVM volume group, a RAID, a Bcache or a multi-device Btrfs filesystem, AutoYaST makes sure that their components are also properly defined in the AutoYaST profile.

For instance, let’s say you want to set up a new LVM volume group but you forget to define which devices are going to act as physical volumes for it. In such a case, the new version of AutoYaST informs about the missing definitions and stops the installation.

In the image below you can see how the error reporting mechanism looks. In this example, it reports the AutoYaST profile contains a new multi-device Btrfs file system, but it does not specify which disks or partitions should be part of that file system.

It’s all About Blocks

As mentioned in the previous section and as all our users know, YaST and AutoYaST can be used to define a software RAID in which several disks or devices are combined for extra performance, extra reliability or a combination of both.

The usual scenario is to combine similar disks. But the RAID technology in Linux is so advanced that it allows to combine disks with different block sizes into the same array. Thanks to a recent bug report, we realized YaST was not handling that situation in the best way. That leaded to a wrong estimation about the final size of the RAID device which, in turn, leaded to possible errors while creating partitions in it.

We have fixed the libstorage-ng code and its documentation, that now offers an accurate description on how the situation is handled in Linux and in our storage library.

Apart from the creation of the RAID itself, the YaST Partitioner also offers some related functionality that is very handy in setups with many disks. For example, the button “Clone Partitions to Other Device” that can be used to replicate the same initial layout in all the disks that are going to be subsequently combined using the RAID technology.

When using such button, the Partitioner tries to only offer destination devices that make sense. That means they have to be as least as big as the source device, they have to have the same topology, etc. But guess what! We found out it didn’t check for the block sizes. That is also fixed now and future versions of the Partitioner will not allow to clone a partition table into another disk with a different block size, something that would lead to failures in most cases.

More Accurate Detection of zFCP devices

And talking about storage devices, recently we got a bug report about AutoYaST not being able to install SUSE on an s390 mainframe. After checking the logs and all the information provided, we found out that the profile was basically wrong as it contained the following definition for a zFCP device:

<listentry>

<controller_id/>

<fcp_lun>0x0000000000000000</fcp_lun>

<wwpn>0x0000000000000000</wwpn>

</listentry>

Apart from the controller_id being missing, fcp_lun and wwpn look wrong too. So the profile is

invalid, and there is nothing that AutoYaST can do about it. Done! Well, not that fast: the problem

is that the profile was generated by AutoYaST itself.

We discovered that AutoYaST was wrongly identifying an iSCSI device as a zFCP one. So the profile excerpt above corresponds to an iSCSI device which, obviously, does not have any of those attributes.

A simple fix solved the issue and zFCP devices are now properly detected in openSUSE Tumbleweed and in the AutoYaST version that will be shipped with openSUSE Leap 15.2 and SLES-15-SP2.

Beyond YaST: GitHub Review from Command Line

We have reserved some development time also for learning and innovation. This part about reporting result of such a work.

Sometimes we need to do a simple change but in many Git repositories. Sometimes we need to touch

all repositories, like when we need to change the CONTRIBUTING.md or some similar file.

Approving several dozens of pull requests in the GitHub web user interface is not easy or convenient so we have created a simple script which can approve the pull requests from the Linux command line. The tool is interactive, for each pull request is displays some details, the diff, the Travis status, etc… and then it asks for approval.

If you approve the request then it will approve it at GitHub with the usual “LGTM” (Looks Good To Me) message. If the request is not approved then you need to manually comment at the GitHub web UI why. Unfortunately there is no easy way for commenting a diff from command line…

For more details see this GitHub repository.

Last words… for now

As you can see, the bodies of the YaST Team members may be confined at home, but our minds are still out there, creating, fine-tuning and delivering software for you. And you can help us by testing the beta versions of openSUSE Leap 15.2 and SUSE Enterprise Linux 15 SP2 or just keeping your openSUSE Tumbleweed up to date and reporting any anomalous situation you find in YaST.

We will be back with more news in approximately two weeks. Meanwhile, have a lot of fun and take care of you and yours.

Changing or rename Oracle user schema

Renaming or changing a schema is not an easy task in Oracle operation but if you really want to rename the schema go for the traditional way of exporting the existing schema and import into a new schema.

Step shown in this tutorial is using Oracle 11g, it maybe won’t work for newer version.

> select * from v$version;

|================================================================================|

|BANNER |

|================================================================================|

|Oracle Database 11g Release 11.2.0.4.0 - 64bit Production |

|PL/SQL Release 11.2.0.4.0 - Production |

|CORE 11.2.0.4.0 Production |

|TNS for Linux: Version 11.2.0.4.0 - Production |

|NLSRTL Version 11.2.0.4.0 - Production |

Data Pump Mapping to the imp Utility

Please take note, Data Pump import often doesn’t have a one-to-one mapping of the legacy utility parameter. Data Pump import automatically provides many features of the old imp utility.

For example, COMMIT=Y isn’t required because Data Pump import automatically commits after each table is imported. Table below describes how legacy import parameters map to Data Pump import.

| Original imp Parameter | Similar Data Pump impdp Parameter |

|---|---|

| FROMUSER | REMAP_SCHEMA |

| TOUSER | REMAP_SCHEMA |

How I gonna rename my ORACLE schema

Let say, I accidently create user schema HOST_USER as suppose to be host1

-- Create user schema (mistaken username here..shit)

create user HOST_USER IDENTIFIED BY password4sk default TABLESPACE host_sk;

-- creating table space

create TABLESPACE host_sk datafile 'host_sk.dbf' size 1G autoextend on maxsize 8G;

-- create database role

create role HOST_SK_ROLE;

-- granting some privilage to role we created

grant

CREATE SESSION, ALTER SESSION, CREATE MATERIALIZED VIEW, CREATE PROCEDURE,

CREATE SEQUENCE, CREATE SYNONYM, CREATE TABLE, CREATE TRIGGER, CREATE TYPE,

CREATE VIEW, DEBUG CONNECT SESSION

to HOST_SK_ROLE;

-- grant that role to user (that I mistaken created previously)

-- and give tablespace quota to them

GRANT HOST_SK_ROLE TO HOST_USER;

ALTER USER HOST_USER QUOTA unlimited ON host_sk;

-- create table

CREATE TABLE "HOST_USER"."STOCK_BALANCE_WS"

("TRANSFERID" NUMBER(9,0), "ARTICLE_ID" VARCHAR2(14),

"QUANTITY" NUMBER(6,0)) SEGMENT CREATION IMMEDIATE

PCTFREE 10 PCTUSED 40 INITRANS 1 MAXTRANS 255

NOCOMPRESS LOGGING

STORAGE(INITIAL 1048576 NEXT 1048576 MINEXTENTS 1 MAXEXTENTS 2147483645

PCTINCREASE 0 FREELISTS 1 FREELIST GROUPS 1

BUFFER_POOL DEFAULT FLASH_CACHE DEFAULT CELL_FLASH_CACHE DEFAULT)

TABLESPACE "HOST_SK" ;

-- insert some data inside

INSERT INTO HOST_USER.ARTICLE_SW

(TRANSFERID, ARTICLE_ID, ARTICLE_NAME, DESCRIPTION, WEIGHT)

VALUES (1, '1003', 'CONDOM DUREX', 'Super studs', 10);

As you see, I already do lot of thing with my database, then I just realize schema should be HOST1 instead of HOST_USER! I want to rename the schema. Unfortunately, oracle don’t allow to change schema name easily.

There is a trick by importing and map to HOST1 schema (if you follow my step, please don’t just copy paste. create the target user it if you don’t have and as long that user have same privilage and tablespace it will be fine)

Export with oracle data pump

$ expdp HOST_USER/password4sk directory=tmp schemas=HOST_USER dumpfile=old_schema_to_remap.dmp LOGFILE=exp_schema_to_remap.log

Export: Release 11.2.0.4.0 - Production on Thu Apr 2 04:17:08 2020

Copyright (c) 1982, 2011, Oracle and/or its affiliates. All rights reserved.

Connected to: Oracle Database 11g Release 11.2.0.4.0 - 64bit Production

Starting "HOST_USER"."SYS_EXPORT_SCHEMA_01": HOST_USER/******** directory=tmp schemas=HOST_USER dumpfile=old_schema_to_remap.dmp LOGFILE=exp_schema_to_remap.log

Estimate in progress using BLOCKS method...

Processing object type SCHEMA_EXPORT/TABLE/TABLE_DATA

Total estimation using BLOCKS method: 0 KB

Processing object type SCHEMA_EXPORT/PRE_SCHEMA/PROCACT_SCHEMA

Processing object type SCHEMA_EXPORT/TABLE/TABLE

Processing object type SCHEMA_EXPORT/TABLE/COMMENT

Processing object type SCHEMA_EXPORT/TABLE/INDEX/INDEX

Processing object type SCHEMA_EXPORT/TABLE/CONSTRAINT/CONSTRAINT

Processing object type SCHEMA_EXPORT/TABLE/INDEX/STATISTICS/INDEX_STATISTICS

. . exported "HOST_USER"."ARTICLE_SW" 0 KB 0 rows

Master table "HOST_USER"."SYS_EXPORT_SCHEMA_01" successfully loaded/unloaded

******************************************************************************

Dump file set for HOST_USER.SYS_EXPORT_SCHEMA_01 is:

/tmp/old_schema_to_remap.dmp

Job "HOST_USER"."SYS_EXPORT_SCHEMA_01" successfully completed at Thu Apr 2 04:17:21 2020 elapsed 0 00:00:13

Import to other target user via remap_schema parameter

$ impdp userid=host1/password4sk directory=tmp dumpfile=old_schema_to_remap remap_schema=HOST_USER:host1 LOGFILE=imp_schema_to_remap.log

Import: Release 11.2.0.4.0 - Production on Thu Apr 2 04:19:07 2020

Copyright (c) 1982, 2011, Oracle and/or its affiliates. All rights reserved.

Connected to: Oracle Database 11g Release 11.2.0.4.0 - 64bit Production

Master table "HOST1"."SYS_IMPORT_FULL_01" successfully loaded/unloaded

Starting "HOST1"."SYS_IMPORT_FULL_01": userid=host1/******** directory=tmp dumpfile=old_schema_to_remap remap_schema=HOST_USER:host1 LOGFILE=imp_schema_to_remap.log

Processing object type SCHEMA_EXPORT/PRE_SCHEMA/PROCACT_SCHEMA

Processing object type SCHEMA_EXPORT/TABLE/TABLE

Processing object type SCHEMA_EXPORT/TABLE/TABLE_DATA

. . imported "HOST1"."ARTICLE_SW" 0 KB 0 rows

Job "HOST1"."SYS_IMPORT_FULL_01" successfully completed at Thu Apr 2 04:19:09 2020 elapsed 0 00:00:01

after import via data pump, the next step is just to drop the old schema.

This step is much easier than redo everything IMHO. Anyway, please becareful. I am novice oracle dba, my step maybe not suitable for you :smile:

Chafa 1.4.0: Now with sixels

April 1st seems like as good a time as any for a new Chafa release — though note that Chafa is no joke. At least not anymore, what with the extremely enterprise-ready sixel pipeline and all.

As usual, you can get it from the download page or from Github. There are also release notes. Here are the highlights:

Sixel output

Thanks to this 90s-era technology, you can print excellent-looking graphics directly in the terminal with no need for character cell mosaics or hacky solutions like w3mimagedisplay (from w3m) or Überzug. It works entirely using ANSI escape sequence extensions, so it's usable over ssh, telnet and that old 2400 baud modem you found in grandma's shed.

The most complete existing implementation is probably Hayaki Saito's libsixel, but I chose to write one from scratch for Chafa, since sixel output is remarkably intensive computationally, and I wanted to employ a combination of advanced techniques (parallelism, quantization using a PCA approach, SIMD scaling) and corner-cutting that wouldn't have been appropriate in that library. This gets me fast animation playback and makes it easier to phase out the ImageMagick dependency in the long term.

There are at least two widely available virtual terminals that support sixels: One is XTerm (when compiled with --enable-sixel), and the other is mlterm. Unfortunately, I don't think either is widely used compared to distribution defaults like GNOME Terminal and Konsole, so here's hoping for more mainstream support for this feature.

Glyph import

If sixels aren't your cup of tea, symbol mode has a new trick for you too. It's --glyph-file, which allows you to load glyphs from external fonts into Chafa's symbol map. This can give it a better idea of what your terminal font looks like and allows support for more exotic symbols or custom fonts to suit any respectable retro graphics art project.

Keep in mind that you still need to select the appropriate symbol ranges with --symbols and/or --fill. These options now allow specifying precise Unicode ranges, e.g. --symbols 20,41..5a to emit only ASCII spaces and uppercase letters.

Color extraction

In symbol mode, each cell's color pair is now based on the median color of the underlying pixels instead of the average. Now this isn't exactly a huge feature, at least not in terms of effort, but it can make a big difference for certain images, especially line art. You can get the old behavior back with --color-extractor average.

Member

Member jimmac

jimmac