FOSSCOMM 2019 aftermath

FOSSCOMM (Free and Open Source Software Communities Meeting) is a Greek conference aiming at free-software and open-source enthusiasts, developers, and communities. This year was held at Lamia from October 11 to October 13.

It is a tradition for me to attend this conference. Usually, I have presentations and of course, booths to inform the attendees about the projects I represent.

This year the structure of the conference was kind of different. Usually, the conference starts on Friday with a "beer event". Now it started with registration and a presentation. Personally, I made my plan to leave Thessaloniki by bus. It took me about 4 hours on the road. So when I arrived, I went to my hotel and then waited for Pantelis to go to University and set up our booths.

ALERT: Long projects presentation...

Our goal was to put the stickers and leaflets on the right area. This year we had plenty of projects at our booths. We met a lot of friends at Nextcloud conference and we asked them for brochures and stickers. So this year our basic projects were Nextcloud and openSUSE (we had table cloths). We had stickers from GNOME (I had couple of T-Shirts from GUADEC just in case someone wanted to buy one). Since openSUSE sponsorts GNU Health, I was there to inform students about it (it was great opportunity since the department organizing was Bioinformatics department). We had brochures, stickers, chocolate and pencils from ONLYOFFICE, also we had promo material from our friends Turris. We are happy that Free Software Foundation Europe gave us brochures when we were in Berlin, and we were able to inform attendees about the campaigns and the work they are doing for us. We met Collabora guys also and we asked them if they want to promote them, since Collabora and Nextcloud are working together. Finally, our friends from DAVx5, gave us their promo material since the program works with Nextcloud so well.

I warned you!!! Well, the first day we met the organizers and the volunteers. I was surprised by the number of volunteers and they're willing to help us (even with setting up the booths). The first day ended with going out to eat something. Thank you, Olga, for introduce us to FRESCO. I used to eat at FRESCO when I was in Barcelona. I guess they're not franchise :-)

Well, Saturday started with registration. We put more swag on the booth (we saw that last night they took almost everything). Personally, I went to meet other projects. I was glad that my friend Julita applied to present what she's doing at the university (Linux on Supercomputers). I was kind of surprised but happy for her that her talk upgraded to Keynote. Glad I met her at GUADEC. Glad also that she had Fedora booth and gave some different aura to the conference. Check out her blogpost about her FOSSCOMM experience .

Glad I met Boris from Tirana. He did a presentation about Nextcloud as a service with Cloud68. Never met before, although I can say that I know many people from Albania and Open Labs.

My presentation was the last one on Saturday, so I had plenty of time to be at the booth and inform anyone about all the above projects. Also, I had the opportunity to attend some talks I wanted to see. Well, my talk was about communities. I described my personal example. I started a little bit about what is a contribution to open source projects. I focused on my example, meaning on end-users that they like the software, they want to contribute but they don't have a clue about programming languages. Personally, I translate and promote (articles, conferences, etc). I met a lot of people (Greece and abroad) that I consider friends. Those friends maybe can help find a job (especially you are an IT). The best part though is when we meet AFK and we have fun.

You can see my presentation file here https://tinyurl.com/fosscomm2019.

After my presentation, there was a party (not because I finished it but because it was on schedule ;-) ). We had pizza and wine. Also, there was music. We left kind of early (I guess) with some FOSS friends and some volunteers. We had a beer at the center.

On Sunday I left Lamia by car with a friendly couple from Thessaloniki. Well, she had a presentation as well (before mine) about "Building digital competency in European small and medium-sized businesses with Free and Open Source Software: Results of the FOSS4SMEs project". I suppose to have another, more interesting talk for the Bioinformatics department (subject: "GNU HEALTH: The fight for our rights to universality and excellence in the Public Health System"), but due to my departure, it was canceled. I asked the organizers the day that the schedule was out to change my talk to Saturday but never got an answer.

Well, I took some videos and I'll upload it on youtube soon. Some volunteers took some pictures and I guess we'll have them soon. When I'll have them all, I'll edit this post.

Finally, I would like to thank Nextcloud for sponsoring my trip to Lamia.

EDIT: Here is the video.

Manjaro | Review from an openSUSE User

Highlights of openSUSE Asia Summit 2019

The openSUSE.Asia Summit is one of the big events for the openSUSE community (i.e. both contributors and users) in Asia. Those who normally communicate online can meet from all over the world, talk in person and have fun. Members of the community share their current knowledge, experience and learn FLOSS technologies around openSUSE. The openSUSE.Asia Summit 2019 took place from October 5 to October 6, 2019 at the Information Technology Department, Faculty of Engineering, Udayana University, Bali.

Highlight-Videos Day 1 and 2

Further videos with lectures and workshops are available on YouTube.

Noodlings 6 | Symphony, Power Tools and Storage

Three Drives on my Dell Latitude E6440 | Cuz Two Isn’t Enough

openSUSE WSL images in OBS

A fundamental concept of all openSUSE packages as well as any image offered for download is a fully transparent, reproducible and automatic build and development process based on sources.

In openSUSE developers do not perform manual builds on some specially crafted machine in their basement and then upload the result somewhere. Instead all sources are stored in a version control system inside the open build service (OBS) instance at build.opensuse.org. OBS then automatically builds the sources including all dependencies according to defined build instructions (eg spec files for rpms). OBS also automatically adds cryptographic signatures to files that support it to make sure nobody can tamper with those files.

The WSL appx files are basically zip files that contain a tarball of a Linux system (like a container) and a Windows exe file, the so called launcher. Building a container is something OBS can already do fully automatic by means of Kiwi. The launcher as well as the final appx however is typically built on a Windows machine using Visual Studio by the developer.

Since the goal of the openSUSE WSL offering is to have the appx files officially and automatically be produced along with other images such as the DVD installer, Live images or containers, the appx files have to be built from sources in OBS.

Fortunately there’s already a MinGW cross toolchain packaged as rpms OBS and a tool to generate appx files on Linux.

Combining that all together OBS can actually build the WSL appx from sources. The current state of development can be found in the Virtualization:WSL project in OBS. The generated appx files are published on download.opensuse.org.

The current images for Leap 15.2 Alpha and Tumbleweed there are good enough for some testing already so please go ahead and do so, feedback welcome!

Note that since the appx files are signed by OBS rather than Microsoft, there are a few steps required to install them.

Going forward there is still quite some work needed to polish this up. Kiwi for example can’t build the appx directly itself but rather the fb-util-for-appx is called by a spec file. That requires some hacks with the OBS project config to work. On Linux side there’s currently no password set for the root user, so we need a better “first boot” solution. More details on that in a later article. Meanwhile, remember to have a lot of fun…

Highlights of YaST Development Sprint 86

Introduction

Now that you had a chance to look at our post about Advanced Encryption Options (especially if you are an s390 user), it is time to check what happened during the last YaST development sprint, which finished last Monday.

As usual, we have been working in a wide range of topics which include:

- Improving support for multi-device file systems in the expert partitioner.

- Fixing networking, secure boot and kdump problems in AutoYaST.

- Stop waiting for

chronyduring initial boot when it does not make sense. - Preparing to support the split of configuration files between

/usr/etcand/etc. - Using

/etc/sysctl.dto write YaST related settings instead of the/etc/sysctl.confmain file.

Expert Partitioner and Multi-Device File Systems

So far, the Expert Partitioner was assuming that Btrfs was the only file system built on top multiple devices. But that is not completely true because some file systems are able to use an external journal to accomplish a better performance. For example, Ext3/4 and XFS can be configured to use separate devices for data and the journaling stuff.

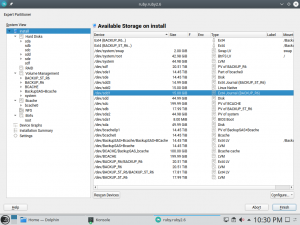

We received a bug report caused by this misunderstanding about multi-device file systems. The Expert Partitioner was labeling as “Part of Btrfs” a device used as an external journal of an Ext4 file system. So we have improved this during the last sprint, and now external journal devices are correctly indicated in the Type column of the Expert Partitioner, as shown in the screenshot below.

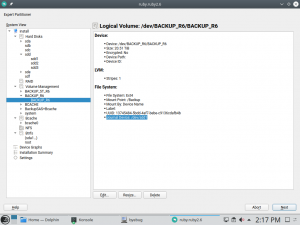

Moreover, the file system information now indicates which device is being used for the external journal.

And finally, we have also limited the usage of such devices belonging to a multi-device Btrfs. Now, you will get an error message if you try to edit one of those devices. In the future, we will extend this feature to make possible to modify file systems using an external journal from the Expert Partitioner.

AutoYaST Getting Some Love

During this sprint, we have given AutoYaST some attention in different areas: networking, bootloader and kdump.

About the networking area, we have finished s390 support in the new network layer, fixing some old limitations in devices activation and udev handling. Apart from that, we have fixed several bugs and improved the documentation a lot, as we found it to be rather incomplete.

Another important change was adding support to disable secure boot for UEFI through AutoYaST. Of course, we updated the documentation too and, during the process, we added some elements that were missing and removed others that are not needed anymore.

Finally, we fixed a tricky problem when trying to get kdump to work on a

minimal system. After some debugging, we found out that AutoYaST adds

too late kdump to the list of packages to install. This issue has been

fixed and now it should work like a charm.

As you may have seen, apart from writing code, we try to contribute to the documentation so our users have a good source of information. If you are curious, apart from the documents for released SUSE and openSUSE versions, you can check the latest builds (including the AutoYaST handbook). Kudos to our documentation team for such an awesome work!

Avoiding chrony-wait time out

Recently, some openSUSE users reported a really annoying issue in Tumbleweed. When time synchronization is enabled through YaST, the system might get stuck during the booting process if no network connection is available.

The problem is that, apart from the chrony service, YaST was enabling

the chrony-wait service too. This service is used to ensure that the

time is properly set before continuing with other services that can be

affected by a time shift. But without a network connection,

chrony-wait will wait for around 10 minutes. Unacceptable.

The discussion about the proper fix for this bug is still open, but for

the time being, we have applied a workaround in YaST to enable

chrony-wait only for those products that require precise time, like

openSUSE Kubic. In the rest of cases, systems will boot faster even

without network, although some service might be affected by a time

shift.

Splitting Configuration Files between /etc and /usr/etc

As Linux users, we are all used to check for system-wide settings under

/etc, which contains a mix of vendor and host-specific configuration

values. This approach has worked rather well in the past, not without

some hiccups, but when things like transactional updates come into

play, the situation gets messy.

In order to solve those problems, the plan is to split configuration

files between /etc and /usr/etc. The former would contain vendor

settings while the latter would define host-specific values. Of course,

such a move have a lot of implications.

So during this sprint we tried to identify potential problems for YaST and to propose solutions to tackle them in the future. If you are interested in the technical details, you can read our conclusions.

Writing Sysctl Changes to /etc/sysctl.d

In order to be able to cope with the /etc and /usr/etc split, YaST

needs to stop writing to files like /etc/sysctl.conf and use an

specific file under .d directories (like /etc/sysctl.d).

So as part of the aforementioned research, we adapted several modules

(yast2-network, yast2-tune, yast2-security and yast2-vpn) to

behave this way regarding /etc/sysctl.conf. From now on, YaST specific

settings will be written to /etc/sysctl.d/30-yast.conf instead of

/etc/sysctl.conf. Moreover, if YaST founds any of those settings in

the general .conf file, it will move them to the new place.

What’s next?

Sprint 87 is already running. Apart from fixing some bugs that were introduced during the network refactoring, we plan to work on other storage-related stuff like resizing support for LUKS2 or some small snapper problems. We will give your more details in our next sprint report.

Stay tunned!

Advanced Encryption Options Land in the YaST Partitioner

Introduction

Welcome to a new sneak peek on the YaST improvements you will enjoy in SLE-15-SP2 and openSUSE Leap 15.2… or much earlier if you, as most YaST developers, are a happy user of openSUSE Tumbleweed.

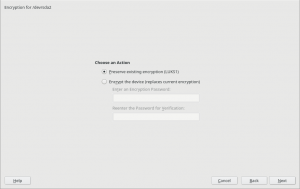

In our report of the 84th sprint we mentioned some changes regarding the encryption capabilities of the YaST Partitioner, like displaying the concrete encryption technology or the possibility to keep an existing encryption layer.

And the report of sprint 85 contained a promise about a separate blog post detailing the new possibilities we have been adding when it comes to create encrypted devices.

So here we go! But let’s start with a small disclaimer. Although some of the new options are available for all (open)SUSE users, it’s fair to say that this time the main beneficiaries are the users of s390 systems, which may enjoy up to four new ways of encrypting their devices.

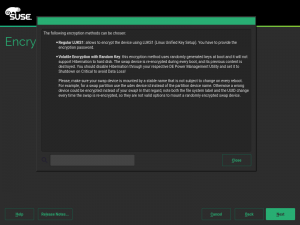

Good Things don’t Need to Change

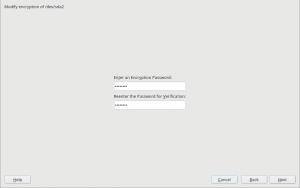

As you may know, so far the YaST Partitioner offered an “Encrypt Device” checkbox when creating or editing a block device. If such box is marked, the Partitioner asks for an encryption password and creates a LUKS virtual device on top of the device being encrypted.

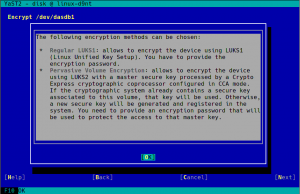

LUKS (Linux Unified Key Setup) is the standard for Linux hard disk encryption. By providing a standard on-disk-format, it facilitates compatibility among distributions. LUKS stores all necessary setup information in the partition header, enabling to transport or migrate data seamlessly. So far, there are two format specifications for such header: LUKS1 and LUKS2. YaST uses LUKS1 because is established, solid and well-known, being fully compatible with the (open)SUSE installation process and perfectly supported by all the system tools and by most bootloaders, like Grub2.

You should not fix what is not broken. Thus, in most cases, the screen for encrypting a device has not changed at all and it still works exactly in the same way under the hood.

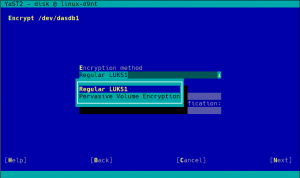

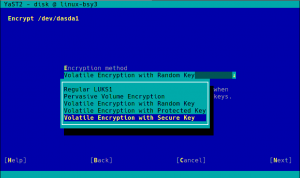

But using an alternative approach may be useful for some use cases, and we wanted to offer an option in the Partitioner for those who look for something else. So in some special cases that screen will include a new selector to choose the encryption method. Let’s analyze all those new methods.

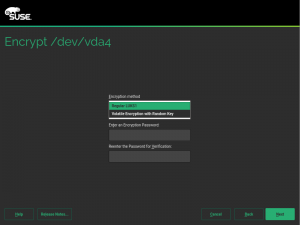

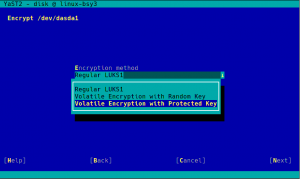

Volatile Swap Encryption with a Random Key

When a swap device has been marked to be encrypted, the user will be able to choose between “Regular LUKS1” and “Volatile Encryption with Random Key”. Both options will be there for swap devices on all hardware architectures. The first option simply uses the classical approach described above.

The second one allows to configure the system in a way in which the swap device is re-encrypted on every boot with a new randomly generated password.

Some advanced users may point that configuring such a random encryption for swap was already possible in versions of openSUSE prior to Leap 15.0. But the procedure to do so was obscure to say the least. The encryption with a random password was achieved by simply leaving blank the “Enter a Password” field in the encryption step. The exact implications were not explained anywhere in the interface and the help text didn’t mention all the risks.

Now the same configuration can be achieved with a more explicit interface, relevant information is provided as you can see in the screenshot below and some extra internal controls are in place to try to limit the potential harm.

With this approach, the key used to encrypt the content of the swap is

generated on every boot using /dev/urandom which is extremely secure.

But you can always go a bit further…

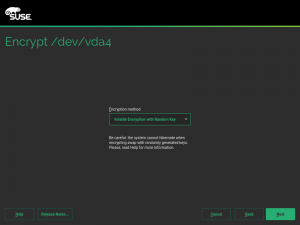

Swap Encryption with Volatile Protected AES Keys

One of the nice things about having a mainframe computer (and believe us there are MANY nice things) is the extra security measures implemented at all levels. In the case of IBM Z or IBM LinuxONE that translates into the so-called pervasive encryption. Pervasive encryption is an infrastructure for end-to-end data protection. It includes data encryption with protected and secure keys.

In s390 systems offering that technology, the swap can be encrypted on

every boot using a volatile protected AES key, which offers an extra

level of security compared to regular encryption using data from

/dev/urandom. This document explains how to setup such system by

hand. But now you can just use YaST and configure everything with a

single click, as shown in the following screenshot.

The good thing about this method is that you can use it even if your s390 system does not have a CCA cryptographic coprocessor. Oh, wait… you may not know what a cryptographic coprocessor is. Don’t worry, just keep reading.

Pervasive Encryption for Data Volumes

Have you ever wondered how James Bond would protect his information from the most twisted and resourceful villains? We don’t know for sure (or, at least, we are supposed to not disclosure that information), but we would bet he has an s390 system with at least one Crypto Express cryptographic coprocessor configured in CCA mode (shortly referred as a CCA coprocessor).

Those dedicated pieces of hardware, when properly combined with CPU with CPACF support, make sure the information at-rest in any storage device can only be read in the very same system where that information was encrypted. They even have a physical mechanism to destroy all the keys when the hardware is removed from the machine, like the self-destruction mechanisms in the spy movies!

As documented here, the process to enjoy the full power of pervasive encryption for data volumes in Linux can be slightly complex… unless you have the YaST Partitioner at hand!

As you can see in the screenshot above, the process with YaST is as simple as choosing “Pervasive Volume Encryption” instead of the classic LUKS1 that YaST uses regularly for non-swap volumes. If YaST finds in the system a secure AES key already associated to the volume being encrypted, it will use that key and the resulting encryption device will have the DeviceMapper name specified for that key. If such secure keys don’t exist, YaST will automatically register a new one for each volume.

Pervasive encryption can be used on any volume of the system, even the root partition.

I want it all!

So far we have seen you can use protected AES keys for randomly encrypting swap and registered secure keys for protecting data volumes. But what if you want your swap to be randomly encrypted with a volatile secure AES key? After all, you have already invested time and money installing those great CCA coprocessors, let’s use them also for the random swap encryption!

If your hardware supports it, when encrypting the swap you will see a “Volatile Encryption with Secure Key” option, in addition to the other four possibilities commented above. As easy as it gets!

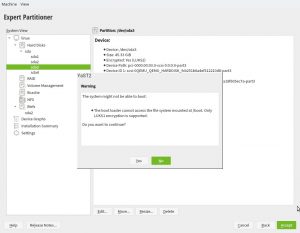

More Booting Checks in non-s390 Systems

As described in the help for pervasive volume encryption showed above,

that encryption method uses LUKS2 under the hood. So we took the

opportunity to improve the Partitioner checks about encryption and

booting. Now, in any architecture that is not s390 the following warning

will be displayed if the expert partitioner is used to place the root

directory in a LUKS2 device without a separate plain /boot.

As mentioned, that doesn’t apply to s390 mainframes. The usage of zipl

makes possible to boot Linux in those systems as long as the kernel

supports the encryption technology, independently of the Grub2 (lack of)

capabilities.

What’s next?

We are still working to smooth off the rough edges of the new encryption methods offered by YaST and to add AutoYaST support for them. You may have noticed that most of the improvements currently implemented will only directly benefit s390 systems… even just a subset of those. But at the current stage, we already have built the foundation for a new era of encryption support in YaSTland.

We are thinking about adding more encryption methods that could be useful for all (open)SUSE users, with general support for LUKS2 being an obvious candidate. But that’s not something we will see in the short term because there are many details to iron up first in those technologies to make then fit nicely into the current installation process.

But hey, meanwhile you can play with all the other new toys!

isolcpus is deprecated, kinda

A problem that a lot of sysadmins and developers have is, how do you run a single task on a CPU without it being interrupted? It’s a common scenario for real-time and virtualised workloads where any interruption to your task could cause unacceptable latency.

For example, let’s say you’ve got a virtual machine running with 4 vCPUs, and you want to make sure those vCPU tasks don’t get preempted by other tasks since that would introduce delays into your audio transcoding app.

Running each of those vCPU tasks on its own host CPU seems like the way to go. All you need to do is choose 4 host CPUs and make sure no other tasks run on them.

How do you do that?

I’ve seen many people turn to the kernel’s isolcpus for this. This

kernel command-line option allows you to run tasks on CPUs without

interruption from a) other tasks and b) kernel threads.

But isolcpus is almost never the thing you want and you should

absolutely not use it apart from one specific case that I’ll get to at

the end of this article.

So what’s the problem with isolcpus?

1. Tasks are not load balanced on isolated CPUs

When you isolate CPUs with isolcpus you prevent all kernel tasks from

running there and, crucially, it prevents the Linux scheduler load

balancer from placing tasks on those CPUs too. And the only way to get

tasks onto the list of isolated CPUs is with taskset. They are

effectively invisible to the scheduler.

Continuing with our audio transcoding app running on 4-vCPUs example

above, let’s say you’ve booted with the following kernel command-line:

isolcpus=1-4 and you use taskset to place your four vCPU tasks on to

those isolated CPUs like so: taskset -c 1-4 -p <vCPU task pid>

The thing that always catches people out is that it’s easy to end up with all of your vCPU tasks running on the same CPU!

$ ps -aLo comm,psr | grep qemu

qemu-system-x86 1

qemu-system-x86 1

qemu-system-x86 1

qemu-system-x86 1Why? Well because isolcpus disabled the scheduler load balancer for

CPUs 1-4 which means the kernel will not balance those tasks equally

among all the CPUs in the affinity mask. You can work around this by

manually placing each task onto a single CPU by adjusting its affinity.

2. The list of isolated CPUs is static

A second problem with isolcpus is that the list of CPUs is configured

statically at boot time. Once you’ve booted, you’re out of luck if you

want to add or remove CPUs from the isolated list. The only way to

change it is by rebooting with a different isolcpus value.

cset to the rescue

My recommended way to run tasks on CPUs without

interruption

by isolating them from the rest of the system with the cgroups subsystem

via the cset shield command, e.g.

$ cset shield --cpu 1-4 --kthread=on

cset: --> shielding modified with:

cset: kthread shield activated, moving 34 tasks into system cpuset...

[==================================================]%

cset: **> 34 tasks are not movable, impossible to move

cset: "system" cpuset of CPUSPEC(0,3) with 1694 tasks running

cset: "user" cpuset of CPUSPEC(1-2) with 0 tasks running

$ cset shield --shield --pid <vCPU task pid 1>,<vCPU task pid 2>,<vCPU task pid 3>,<vCPU task pid 4>

cset: --> shielding following pidspec: 17063,17064,17065,17066

cset: doneWith cset you can update and modify the list of CPUs included in the

cgroup dynamically at runtime. It is a much more flexible solution for

most users.

Sometimes you really do want isolcpus

OK, I admit there are times when you really do want to use isolcpus.

For those scenarios when you really cannot afford to have your tasks

interrupted, not even by the scheduler tick which fires once a second,

you should turn to isolcpus and manually spread tasks over the CPU

list with taskset.

But for most uses, cset shield is by far the best option that’s least

likely to catch you by surprise.

Kurz práce v příkazové řádce Linuxu nejen pro MetaCentrum 2020

Don’t be afraid of command line! It is friendly and powerful tool allowing to process large data and automate tasks. Practically identical is command line also in Apple osX, BSD and another UNIX-based systems, not only in Linux. The course is designed for total beginners as well as intermediate advanced students. The only requirement is an interest (or need) to work in command line, typically on Linux computing server.

Course will be taught in Linux, but most of the point are applicable also for another UNIX systems like osX.

Member

Member Diamond_gr

Diamond_gr