Let's build Software Libre APM together

Alright, you learned about ActiveSupport::Notifications, InfluxDB, Grafana and influxdb-rails

in the two previous posts. Let's dive a bit deeper and look how we built the dashboards for you. So we can

study, change and improve them together.

“Individually we are one drop; but together we are an ocean.” – Ryunosoke Satoro

Welcome to your Ruby on Rails Application Monitoring 101.

- ActiveSupport::Notifications is rad! 💘

- Measure twice, cut once: App Performance Monitoring with influxdb-rails

- Let's build Software Libre APM together (this post)

The Ying and Yang of measurements

Basically there are two types of measurements we do. How often something happened and how long something took. Both types are most often complementary, interconnected and interdependent. On the performance dashboard we count for instance how many requests your application is serving.

We also look at the time your application spends on doing that.

At some point the number of requests will have an influence on the time spend (oversaturation). If one of your actions is using too many resources it will have influence on the number of requests you are able to serve (overutilization). Ying and Yang.

The minions of measurements

Looking at the graphs above you see some helpers at work you should know about.

Time

First and foremost: Time Windows. The requests are counted per minute and we look at measurements in the last hour. Time windows help to lower information density and make your measurements a bit more digestible. Look at the same measurements per second for the last 12 hours. 60 times 24 higher information density. Not so easy to interpret anymore...

Statistics

The same way time windows will make it easier for you to understand your data, some descriptive statistics will help you too. For instance calculating the maximum time 99% of requests in the last minute took. Like we did above. I won't bore you to death with the math behind this, if you're into this checkout wikipedia or something. Just remember, it makes data digestible for you. Look at the same performance data without applying statistics.

Grouping

The third helper that makes data understandable for you is grouping data. Like ActiveJobs per minute grouped by queue. That grouping might make more clear to you why the number of jobs so high. Or grouping requests per minute by HTTP Status might reveal how much stuff goes wrong.

A different form of grouping is to visualize all measurements connected to a specific event, like a single request. Or all the measurements a specific controller action has fired in the last hour.

Rankings

Another thing we do on the dashboard is ranking (groups of) events by time, slow to fast. So you know where you might want to concentrate your efforts to improve performance.

Apply your knowledge, let's collaborate on Rails APM/AHM

Maybe I inspired some ideas for new features in the 101 above? I'm also sure there are many people out in the Rails community that have way more knowledge and ideas about statistics, measurements and all the tools involved than Chris and me. Let's work together, patches to the collection of dashboards (and the Ruby code) are more than welcome!

Why build, not buy?

But Henne, you say, there is already Sentry, New Relic, Datadog, Skylight and tons of other services that do this. Why build another one? Why reinvent the wheel?

Because Software Libre is an deeply evolutionary process. Software Libre, just like Evolution, experiments all the time. Many experiments find their niche to exist. Some even go global.

Coronavirus: BW CG Illustration by Yuri Samoilov

Like Linux, the largest install base of ALL operating systems on this planet. Wordpress powering an unbelievable 30% of the top 10 million websites. Mediawiki running the 5th most popular site globally. But also, more than 98% of all projects on GitHub are not seeing any development beyond the first year they were created. Just like 99% of all species that ever lived on Earth are estimated to be extinct.

We need to experiment and collaborate together. Evolution is what we do baby! 🤓 Can't run, copy, distribute, study, change and improve the software SaaS providers run. We can't scratch our itch, can't break it, learn how it works and make it better together, that's why. Let's do this!

Super Resolution Video Enhancing with AMD GPU

I’ve had a somewhat recent AMD Radeon RX 560 graphics card in my Mini-ITX PC for over a year already, and one long term interest I have would be to be able to enhance old videos. Thanks to Hackweek I could look at this as one of the things that I’ve waited to have time for. In recent years there have been approaches to use eg neural networks to do super resolution handling of photos and also videos, so that there would be more actual details and shapes than what would be possible via normal image manipulation. The only feasible way of doing those is by using GPUs, but unfortunately the way those are utilized is a bit of a mess, most of all because proprietary one vendor only CUDA is the most used one.

On the open source side, there is OpenCL in Mesa by default and Vulkan Compute, and there’s AMD’s separate open source ROCm that offers a very big platform for computing but also among else OpenCL 2.x support and a source level CUDA to portable code (including AMD support) translator called HIP. I won’t go into HIP, but I’m happy there’s at least the idea of portable code being thrown around. OpenCL standardization has been failing a bit, with the newest 3.0 trying to fix why the industry didn’t adopt 2.x properly and OpenCL 1.2 being the actual (but a bit lacking) baseline. I think the same happened a bit with OpenGL 1.x -> 2.x -> 3.x a long time ago by the way… Regardless if the portable code is OpenCL or Vulkan Compute, the open standards are now making good progress forward.

I first looked at ROCm’s SLE15SP2 installation guide - interestingly, it also installed on Tumbleweed if ignoring one dependency problem of openmp-extras. However, on my Tumbleweed machine I do not have Radeon so this was just install test. I was however surprised that even the kernel module compiled against TW’s 5.11 kernel - and if it would have not, there’s the possibility of using upstream kernel’s module instead.

Over at the Radeon machine side, I installed ROCm without problems. Regardless of that SDK, many real world applications for video enhancing are still CUDA specific so would at minimum require porting to portable code with HIP. These include projects like DAIN, DeOldify etc. However, there are also projects using OpenCL and Vulkan Compute - I’ll take video2x as an example here that supports multiple backends, some of which support OpenCL (like waifu2x-converter-cpp) or Vulkan (like realsr-ncnn-vulkan). I gave the latter a try on my Radeon as it won the CVPR NTIRE 2020 challenge on super-resolution.

I searched my video collection far enough back in time so that I could find some 720p videos, and selected one which has a crowd and details in distance. I selected 4x scaling even though that was probably a time costly mistake as even downscaling that 5120x2880 video probably wouldn’t be any better than 2x scaling to 2560x1440 and eg downscaling that to 1920x1080. I set up the realsr-ncnn-vulkan and ffmpeg paths to my compiled versions, gave the 720p video as input and fired way!

And waited. For 3.5 hours :) Noting that I should probably buy an actual highend AMD GPU, but OTOH my PC case is smallish and on the other hand the market is unbearable due to crypto mining. The end result was fairly good, even if not mind blowing. There are certainly features it handles very well, and it seems to clean up noise a bit as well while improving contrast a bit. It was clearly something that would not be possible by traditional image manipulation alone. Especially the moving video seems like higher resolution in general. Maybe the samples I’ve seen from some other projects out there have been better, then OTOH my video was a bit noisy with movement blur included so not as high quality as would be eg a static photo or a video clip tailored to show off an algorithm.

For showing the results, I upscaled a frame from the original 1280x720 clip to the 4x 5120x2880 resolution in GIMP, and then cropped a few pieces of both it and the matching frame in the enhanced clip. I downscaled them actually then to 1440p equivalent crop. Finally, I also upscaled the original frame to FullHD and downscaled the enhanced one to FullHD as well, to be more in line what could be one actual desired realistic end result - 720p to 1080p conversion while increasing detail.

The “1440p equivalent” comparison shots standard upscale vs realsr-ncnn-vulkan.

The FullHD scaling comparison - open both as full screen in new window and compare.

Measure twice, cut once: App Performance Monitoring with influxdb-rails

Now that we learned how truly magnificent ActiveSupport::Notifications is in the previous post,

let's explore a RubyGem Chris, others and me have built around this: influxdb-rails.

Together with even more awesome Software Libre, it will help you to deep dive into your Ruby on Rails application performance.

- ActiveSupport::Notifications is rad! 💘

- Measure twice, cut once: App Performance Monitoring with influxdb-rails (this post)

- Let's build Software Libre APM together

Application Performance Monitoring (APM)

I'm not sure we have to discuss this at all anymore, but here is why I think I need application performance monitoring for my Ruby on Rail apps: I'm an expert in using the software I hack. Hence I always travel the happy path, cleverly and unconsciously avoid the pitfalls, use it with forethought of the underlying architecture. Because of that, I usually think my app is fast: perceived performance.

It's not what you look at that matters, it's what you see. – Henry David Thoreau

That is why I need someone to correct that bias for me, in black and white.

Free Software APM

The good folks at Rails bring the instrumentation framework. InfluxData deliver a time series database. Grafana Labs make a dashboard builder. All we need to do, as so often, is to plug Software Libre together: SUCCE$$!

InfluxDB + Grafana == 🧨

I assume your Rails development environment is already running on 127.0.0.0:300. Getting InfluxDB and Grafana is a matter

of pulling containers these days. You can use this simple docker-compose configuration for running them locally.

# docker-compose.yml

version: "3.7"

services:

influx:

image: influxdb:1.7

environment:

- INFLUXDB_DB=rails

- INFLUXDB_USER=root

- INFLUXDB_USER_PASSWORD=root

- INFLUXDB_READ_USER=grafana

- INFLUXDB_READ_USER_PASSWORD=grafana

volumes:

- influxdata:/var/lib/influxdb

ports:

- 8086:8086

grafana:

image: grafana/grafana:7.4.5

ports:

- 4000:3000

depends_on:

- influx

volumes:

- grafanadata:/var/lib/grafana

volumes:

influxdata:

grafanadata:

A courageous docker-compose up will boot things and you can access Grafana at http://127.0.0.1:4000 (user: admin / password: admin).

To read data from the InfluxDB container in Grafana, leave the /datasources

InfluxDB defaults alone and configure:

URL: http://influx:8086

Database: rails

User: grafana

Password: grafanainfluxdb-rails: 🪢 things together

The influxdb-rails RubyGem is the missing glue code for making your app report metrics into the InfluxDB.

Plug it into your Rails app by adding it to your bundle.

bundle add influxdb-rails

bundle exec rails generate influxdbThe next time you boot your Rails dev-env it will start to measure the performance of your app. Now comes the interesting part, interpreting this data with Grafana.

Understanding Ruby on Rails Performance

Every time you use your dev-env, influxdb-rails will write a plethora of performance data into InfluxDB.

Let's look at one of the measurements so you get an idea of what's going on. You remember the ActiveSupport::Notification

called process_action.action_controller from the previous post? Rails sends this message every time an action in

your controller has finished. It includes performance data for this action.

You should know this from somewhere: development.log! It contains the same information.

Started GET "/things" for 127.0.0.1 at 2021-03-25 15:20:14 +0100

...

Completed 200 OK in 5ms (Views: 4.1ms | ActiveRecord: 0.1ms | Allocations: 3514)

ThingsController#index took 5ms to finish overall, 4.1ms of those 5 in rendering, 0.1ms in querying the database.

You find the same data for every request you make in your InfluxDB. Head over to http://127.0.0.1:4000/explore

and let Grafana plot it for you.

Only want to see how your views are performing? Change the field from controller to view.

Magic 🪄 But this is only one out of many different ways to look at this measurement. All of the panels below use this one

measurement and the data it brings to visualize controller actions.

And this is only one measurement, influxdb-rails reports around a dozen.

Now I could send you off your way to learn about

ALL.

THE.

SOFTWARE.

involved, but that would be mean wouldn't it?

Ruby on Rails Dashboards

We, the Free Software community, are in this together! We collaborate on Ruby on Rails. We work together to make InfluxDB better. We cooperate to improve Grafana. Why not do the same for the dashboards to visualize Rails performance data? Let's collaborate! That is why we have build a couple of dashboards you can import. Just copy and paste the URLs into your Grafana.

- Ruby On Rails Performance Overview: Ruby On Rails Performance Overview

- Performance insights into individual requests: Ruby On Rails Performance per Request

- Performance of individual actions: Ruby On Rails Performance per Action

- HTTP Request Health: Ruby On Rails Health Overview

- ActiveJob Insights: Ruby on Rails ActiveJob Overview

- A list of the slowest requests: Ruby on Rails Slowlog by Request

- A list of the slowest actions: Ruby on Rails Slowlog by Action

- A list of the slowest queries: Ruby on Rails Slowlog by SQL

Play a little, I will tell you about all the nitty gritty details in the last post of this series: Let's build Software Libre APM together

Yakuake | Drop-down Terminal Emulator on openSUSE

ActiveSupport::Notifications is Rad!

One of the lesser known parts of Rails core is the ActiveSupport instrumentation framework.

ActiveSupport::Notifications includes all the things you need to implement pub-sub in Rails.

Pub-Sub is a software architecture where you publish (send) a message without being specific about who should

receive it. Fire and forget.

Receiving a message, and doing something with it, "just" requires you to subscribe to it. Because the publisher doesn't need to know about the subscribers (as they are decoupled), this provides nice opportunities for organization and scale.

Let's explore the joyful shenanigans of this.

- ActiveSupport::Notifications is rad! (this post)

- Measure twice, cut once: App Performance Monitoring with influxdb-rails

- Let's build Software Libre APM together

Publish & Subscribe

There is an instrumentation message emitted from ActionController that includes interesting data

about the things happening in your controller action. Let's explore this.

If you don't have a Ruby on Rails app at hand, just setup a minimal one with rails new --minimal

Add this code into an initializer

# config/initializers/instrumentation_subscriber.rb

# What happens in ActionController?

module ActionController

class InstrumentationSubscriber < ActiveSupport::Subscriber

attach_to :action_controller

def process_action(message)

Rails.logger.debug "Instrumentation #{message.name} Received!"

end

end

end

Boot the app (rails server), visit http://127.0.0.0:3000 and you'll

see the new log lines in your development.log. So what? What's the difference to calling Rails.logger in

an action or callback inside your controller? Why is ActiveSupport::Notifications fabulous?

ActiveSupport::Notifications Scales

First, as explained in the intro, the main advantage is that the publisher is decoupled from the subscriber. For instance,

you can have more than one subscriber listening to process_action.action_controller.

# config/initializers/slowlog_subscriber.rb

module ActionController

class SlowlogSubscriber < ActiveSupport::Subscriber

attach_to :action_controller

def process_action(message)

return if message.duration <= 10

controller_location = [message.payload[:controller], message.payload[:action]].join("#") # -> ThingsController#something

Rails.logger.debug "#{controller_location} was slow (#{message.duration}ms)"

end

end

endYou are free to organize this however you want. Decouple publisher/subscriber in different files, chronological or even in different threads.

# config/initializers/poor_mans_background_job_subscriber.rb

module ActionController

class PMBGJSubscriber < ActiveSupport::Subscriber

include Concurrent::Async

attach_to :action_controller

def process_action(message)

async.background_job(message)

end

def background_job(message)

# ...do something expensive with the message in a thread

sleep(60)

end

end

endActivesupport::Notifications Is Everywhere Today

Second, and you probably already guessed this from the example, what makes this most awesome are the ready made messages that are already published today.

Rails for instance uses ActiveSupport::Notifications to publish close to 50(!) different instrumentation events

that include data about your application. Data ranging from the HTTP status code of your requests, over which partials

were rendered, to more esoteric measurements like the byte range attempted to be read from your ActiveStorage service.

Check the instrumentation guide

for all the dirty secrets.

ActiveSupport::Notifications Is Easily Extendible

Last but not least, you can not only listen to messages others publish, you can publish messages to yourself.

ActiveSupport::Notifications.instrument "cool_thing_happened.my_app", {some: :data} do

MyApp.do_cool_thing(true)

end

Okay, you're convinced I hope! Now what do people do with ActiveSupport::Notifications out in the world?

Application Health/Performance Monitoring

Ever wondered how your metrics get to Sentry, New Relic, Datadog or Skylight? You guessed it, via ActiveSupport::Notifications.

Now if the main work, publishing tons of messages through a pub-sub architecture, is already done by Rails, surely there are non-SaaS (a.k.a. Software Libre) options to display this data, right? Turns out there are not. While evaluating options for some of my projects (especially Open Build Service) we came across this gap and started to fill it.

How, why and where? Read on in the next part of this series: Measure twice, cut once: App Performance Monitoring with influxdb-rails

Cubicle Chat | 20 Mar 2021

Thinking in Questions with SQL

I love SQL, despite its many flaws.

Much is argued about functional programming vs object oriented. Different ways of instructing computers.

SQL is different. SQL is a language where I can ask the computer a question and it will figure out how to answer it for me.

Fluency in SQL is a very practical skill. It will make your life easier day to day. It’s not perfect, it has many flaws (like null) but it is in widespread use (unlike, say, prolog or D).

Useful in lots of contexts

As an engineer, sql databases often save me writing lots of code to transform data. They save me worrying about the best way to manage finite resources like memory. I write the question and the database (usually) figures out the most efficient algorithm to use, given the shape of the data right now, and the resources available to process it. Like magic.

SQL helps me think about data in different ways, lets me focus on the questions I want to ask of the data; independent of the best way to store and structure data.

As a manager, I often want to measure things, to know the answer to questions. SQL lets me ask lots of questions of computers directly without having to bother people. I can explore my ideas with a shorter feedback loop than if I could only pose questions to my team.

SQL is a language for expressing our questions in a way that machines can help answer them; useful in so many contexts.

It would be grand if even more things spoke SQL. Imagine you could ask questions in a shell instead of having to teach it how to transform data

Why do we avoid it?

SQL is terrific. So why is there so much effort expended in avoiding it? We learn ORM abstractions on top of it. We treat SQL databases as glorified buckets of data: chuck data in, pull data out.

Transforming data in application code gives a comforting amount of control over the process, but is often harder and slower than asking the right question of the database in the first place.

Do you see SQL as a language for storing and retrieving bits of data, or as a language for expressing questions?

Let go of control

The database can often figure out the best way of answering the question better than you.

Let’s take an identical query with three different states of data.

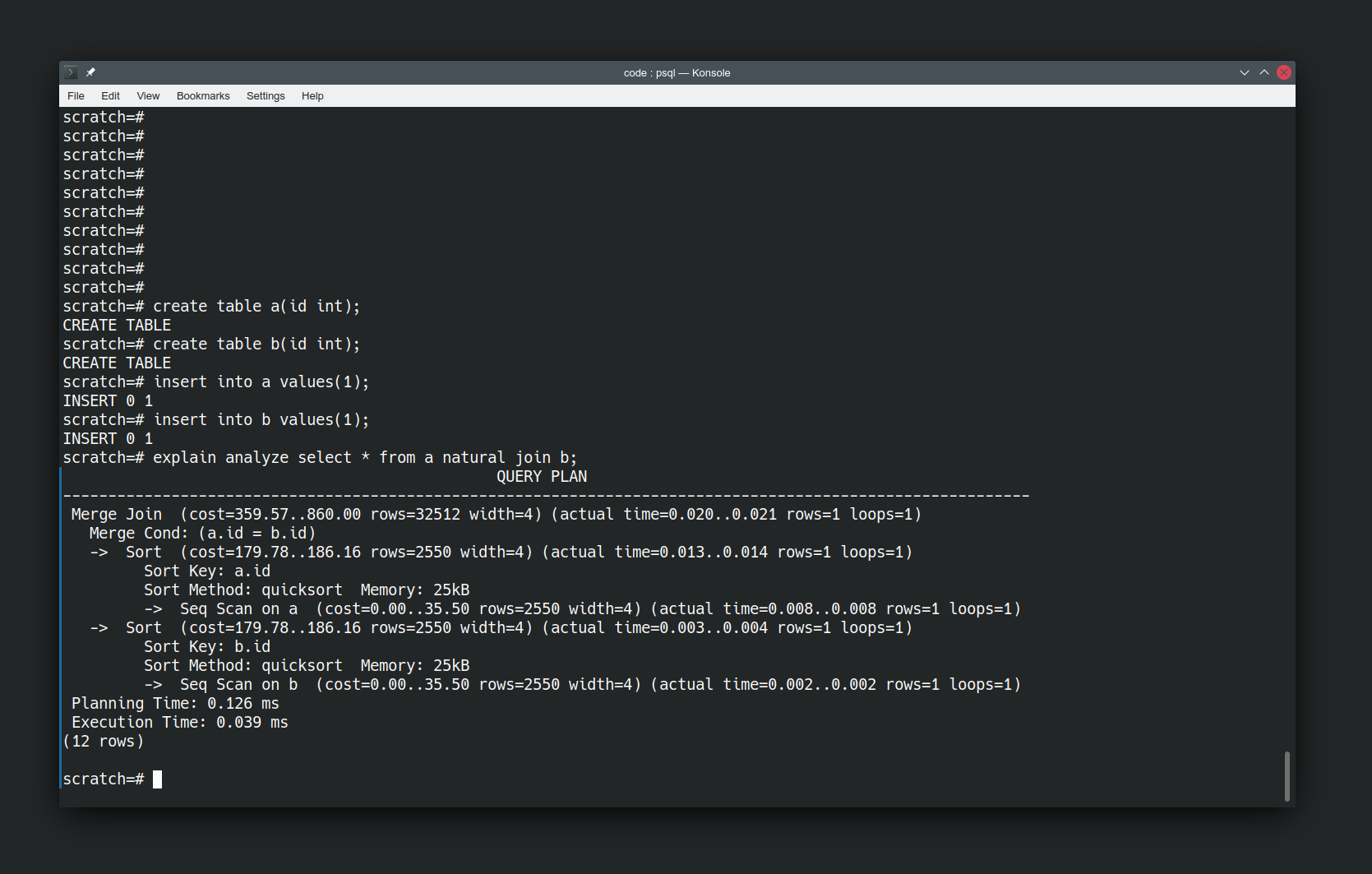

Here’s two simple relations with 1 attribute each. a and b. With a single tuple in each relation.

create table a(id int);

create table b(id int);

insert into a values(1);

insert into b values(1);

explain analyze select * from a natural join b;

“explain analyze” is telling us how postgres is going to answer our question. The operations it will take, and how expensive they are. We haven’t told it to use quicksort, it has elected to do so.

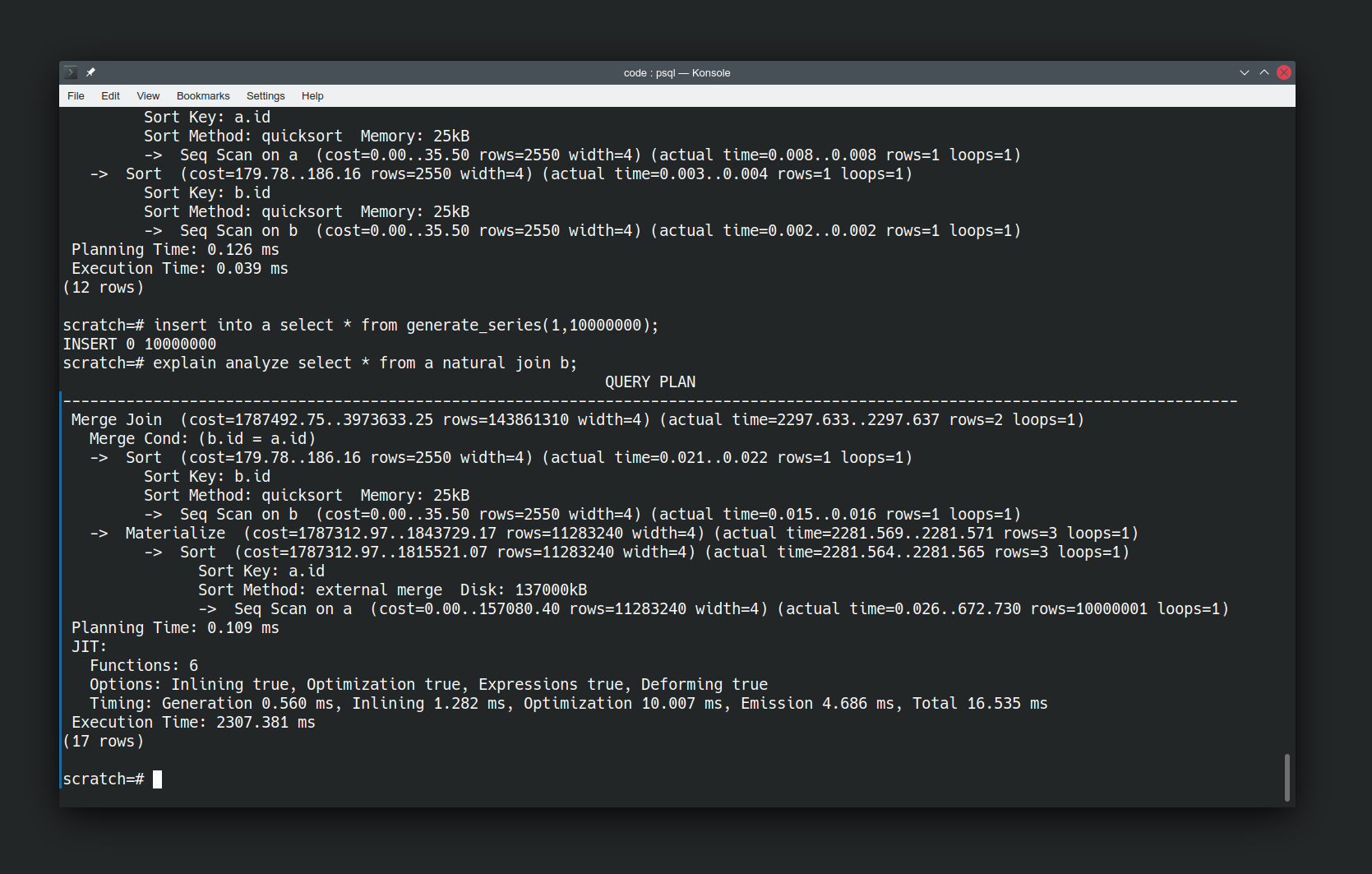

Looking at how the database is doing things is interesting, but let’s make it more interesting by changing the data. Let’s add in a boatload more values and re-run the same query.

insert into a select * from generate_series(1,10000000);

explain analyze select * from a natural join b;

We’ve used generate_series to generate ten million tuples in relation ‘a’. Note the “Sort method” has changed to use disk because the data set is larger compared to the resources the database has available. I haven’t had to tell it to do this. I just asked the same question and it has figured out that it needs to use a different method to answer the question now that the data has changed.

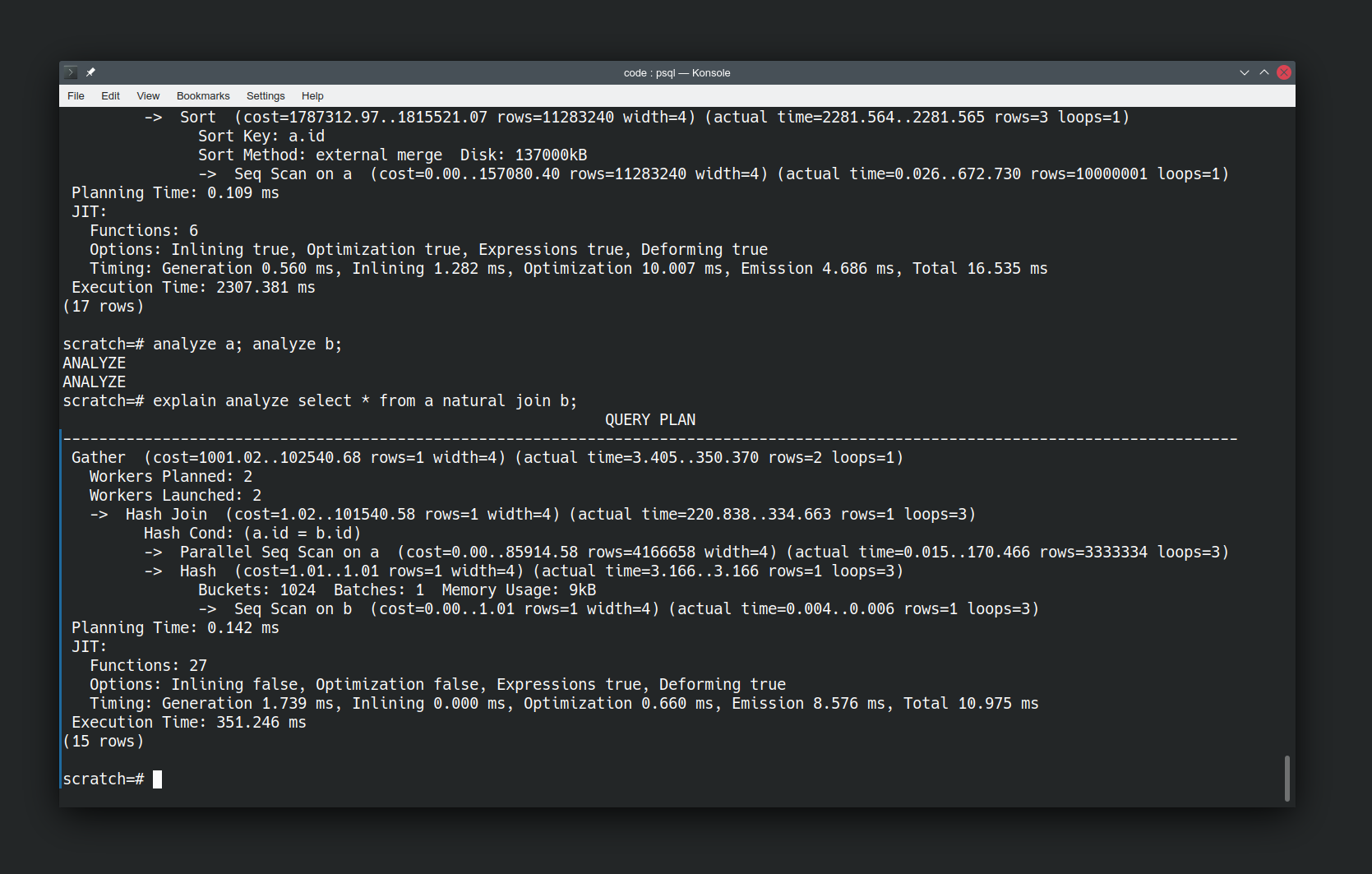

But actually we’ve done the database a disservice here by running the query immediately after inserting our data. It’s not had a chance to catch up yet. Let’s give it a chance by running analyze on our relations to force an update to its knowledge of the shape of our data.

analyze a;

analyze b;

explain analyze select * from a natural join b;

Now re-running the same query is a lot faster, and the approach has significantly changed. It’s now using a Hash Join not a Merge Join. It has also introduced parallelism to the query execution plan. It’s an order of magnitude faster. Again I haven’t had to tell the database to do this, it has figured out an easier way of answering the question now that it knows more about the data.

Asking Questions

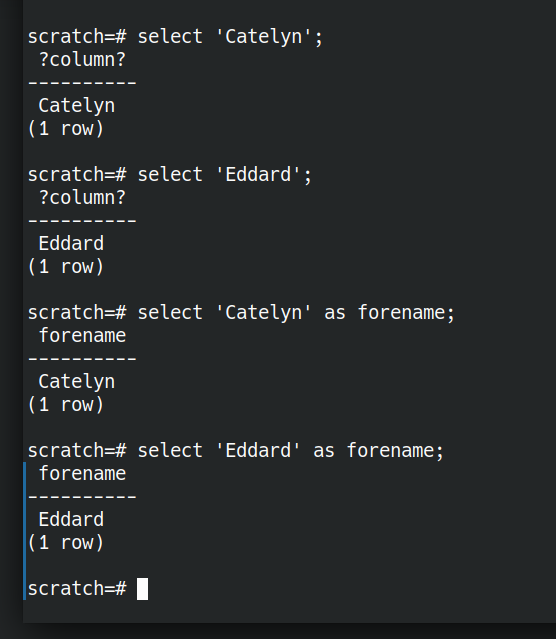

Let’s look at some of the building blocks SQL gives us for expressing questions. The simplest building block we have is asking for literal values.

SELECT 'Eddard';

SELECT 'Catelyn';

A value without a name is not very useful. Let’s rename them.

SELECT 'Eddard' AS forename;

SELECT 'Catelyn' AS forename;

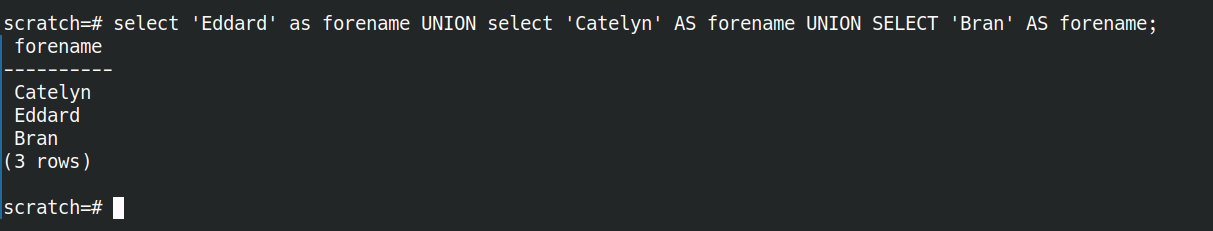

What if we wanted to ask a question of multiple Starks: Eddard OR Catelyn OR Bran? That’s where UNION comes in.

select 'Eddard' as forename

UNION select 'Catelyn' AS forename

UNION SELECT 'Bran' AS forename;

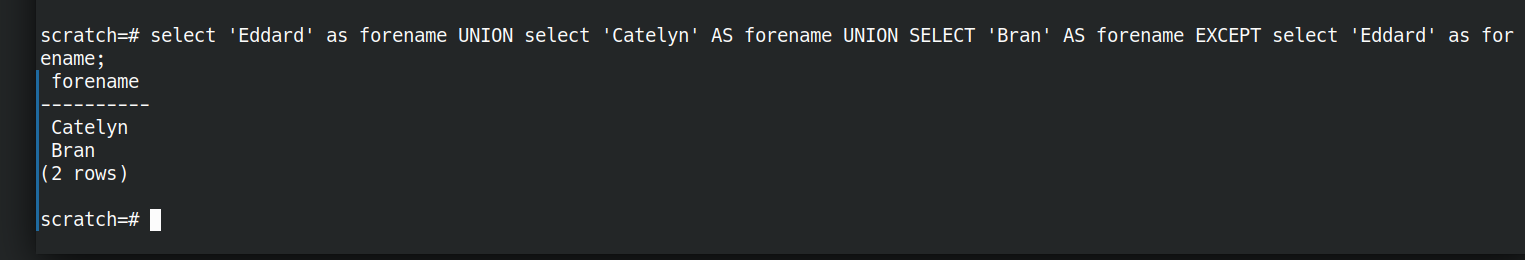

We can also express things like someone leaving the family. With EXCEPT.

select 'Eddard' as forename

UNION select 'Catelyn' AS forename

UNION select 'Bran' AS forename

EXCEPT select 'Eddard' as forename;

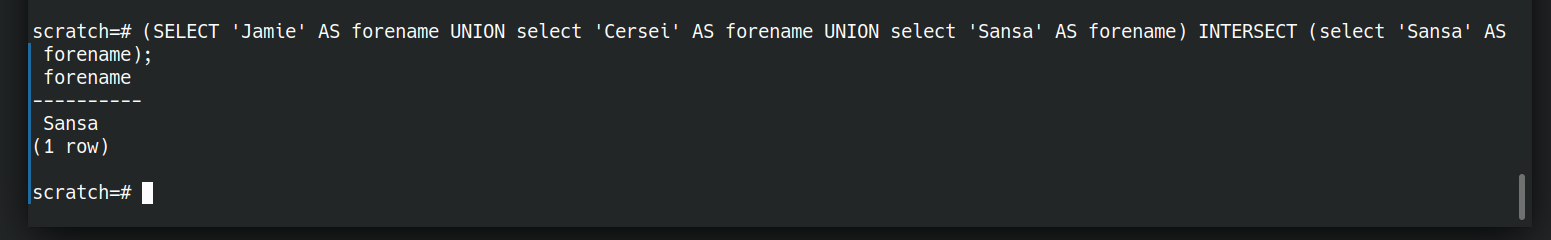

What about people joining the family? How can we see who’s in both families. That’s where INTERSECT comes in.

(

SELECT 'Jamie' AS forename

UNION select 'Cersei' AS forename

UNION select 'Sansa' AS forename

)

INTERSECT

(

select 'Sansa' AS forename

);

It’s getting quite tedious having to type out every value in every query already.

SQL uses the metaphor “table”. We have tables of data. To me that gives connotations of spreadsheets. Postgres uses the term “relation” which I think is more helpful. Each “relation” is a collection of data which have some relation to each other. Data for which a predicate is true.

Let’s store the starks together. They are related to each other.

create table stark as

SELECT 'Sansa' as forename

UNION select 'Eddard' AS forename

UNION select 'Catelyn' AS forename

UNION select 'Bran' AS forename ;

create table lannister as

SELECT 'Jamie' AS forename

UNION select 'Cersei' AS forename

UNION select 'Sansa' AS forename;

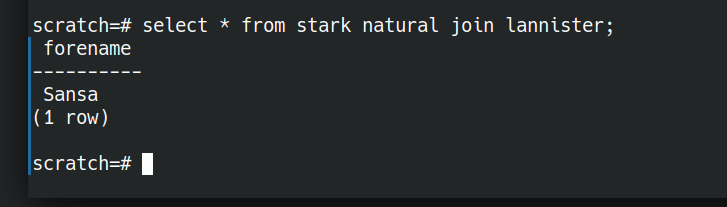

Now we have stored relations of related data that we can ask questions of. We’ve stored the facts where “is a member of house stark” and “is a member of house lannister” are true. What if we want people who are in both houses. A relational AND. That’s where NATURAL JOIN comes in.

NATURAL JOIN is not quite the same as the set based and (INTERSECT above). NATURAL JOIN will work even if there are different arity tuples in the two relations we are comparing.

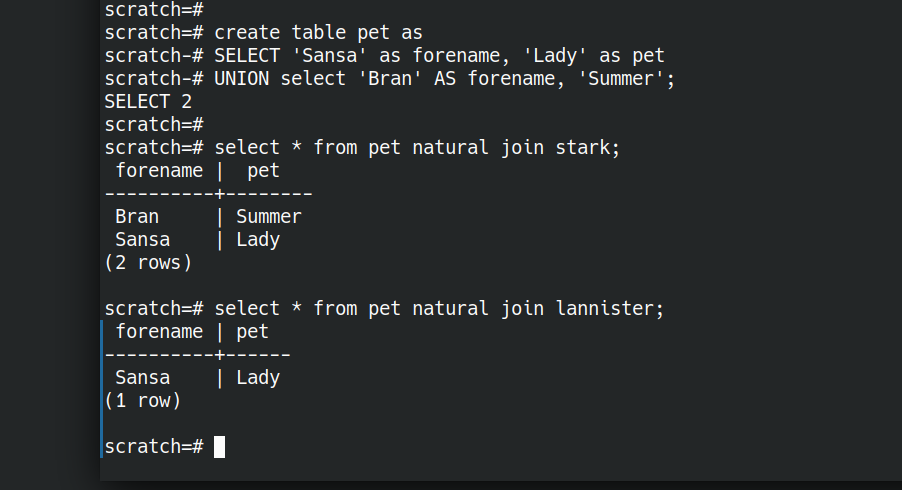

Let’s illustrate this by creating a relation pet with two attributes.

create table pet as

CREATE TABLE pet as

SELECT 'Sansa' as forename, 'Lady' as pet

UNION select 'Bran' AS forename, 'Summer' as pet;

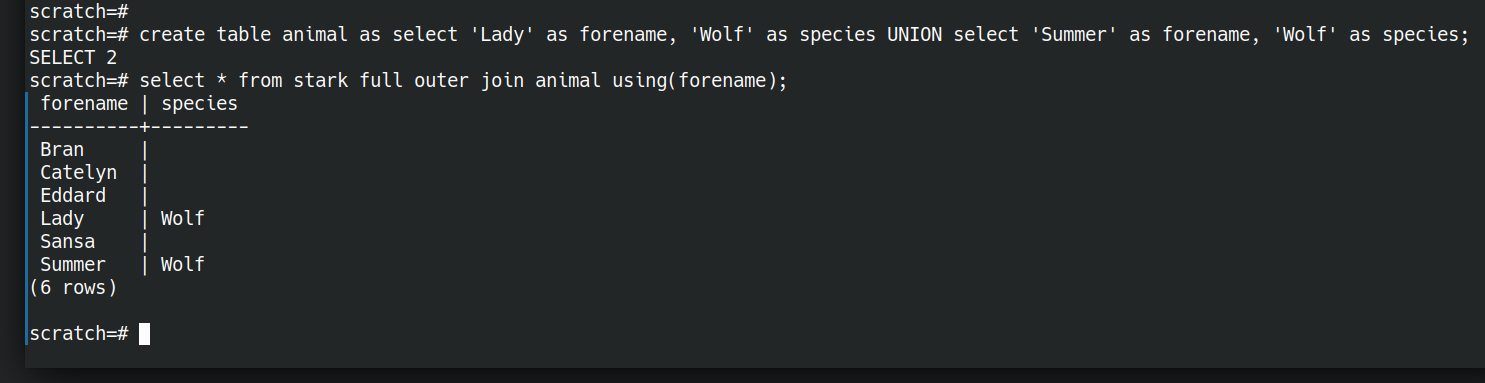

Now we have an AND, what about OR? We have a set-or above (UNION). I think the closest thing to a relational OR is a full outer join.

create table animal as select 'Lady' as forename, 'Wolf' as species UNION select 'Summer' as forename, 'Wolf' as species;

select * from stark full outer join animal using(forename);

Ok so we can ask simple questions with ands and ors. There are also equivalents of most of the relational algebra operations.

What if I want to invade King’s Landing?

What about more interesting questions? We can do those too. Let’s jump ahead a bit.

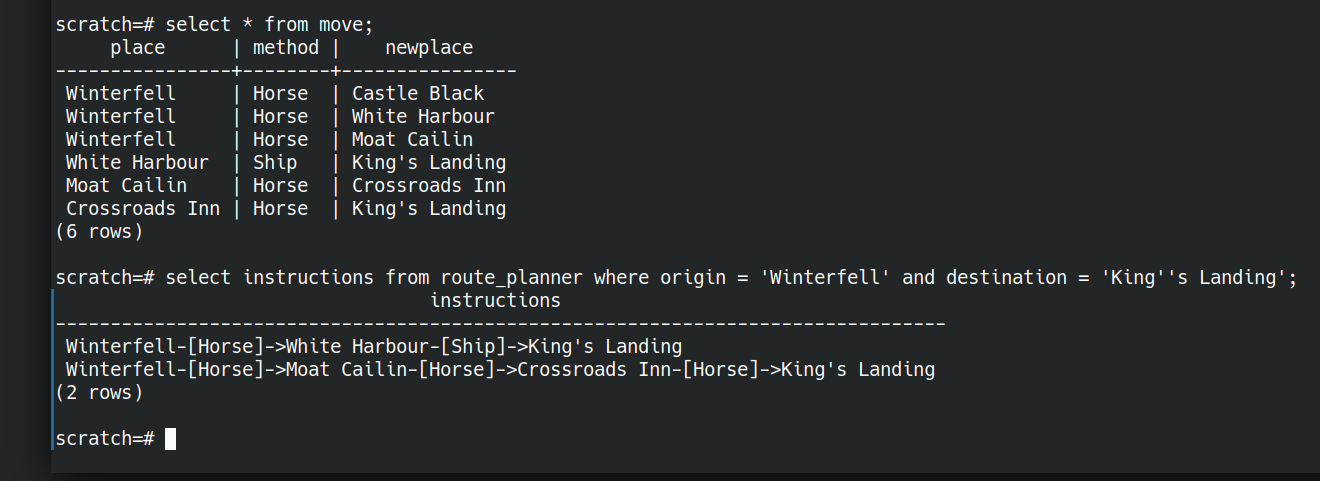

What if we’re wanting to plan an attack on Kings Landing and need to consider the routes we could take to get there. Starting from just some facts about the travel options between locations, let’s ask the database to figure out routes for us.

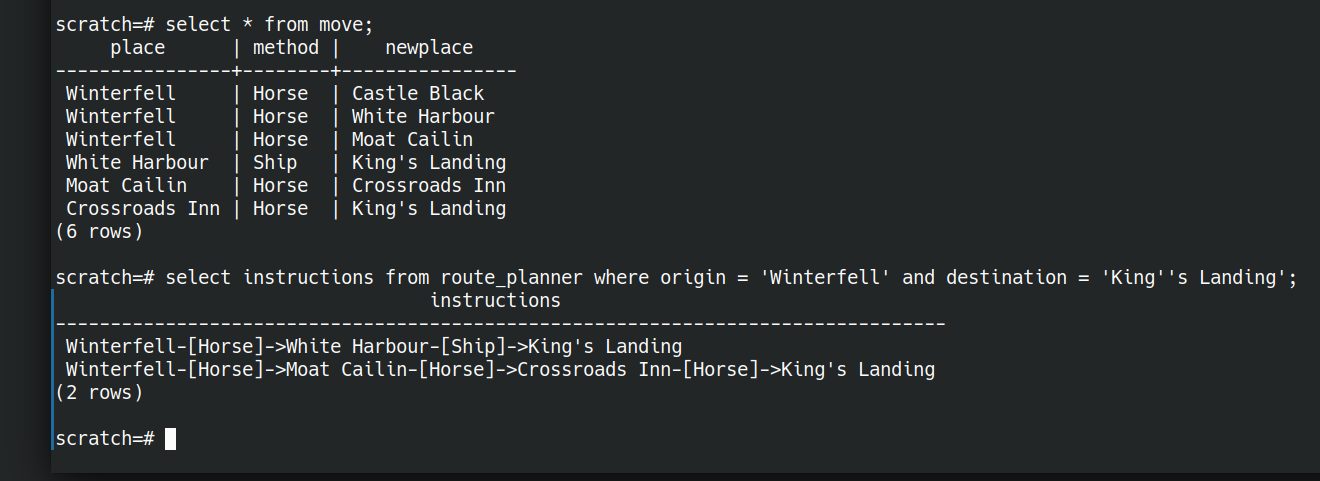

First the data.

create table move (place text, method text, newplace text);

insert into move(place,method,newplace) values

('Winterfell','Horse','Castle Black'),

('Winterfell','Horse','White Harbour'),

('Winterfell','Horse','Moat Cailin'),

('White Harbour','Ship','King''s Landing'),

('Moat Cailin','Horse','Crossroads Inn'),

('Crossroads Inn','Horse','King''s Landing');

Now let’s figure out a query that will let us plan routes between origin and destination as below

We don’t need to store any intermediate data, we can ask the question all in one go. Here “route_planner” is a view (a saved question)

create view route_planner as

with recursive route(place, newplace, method, length, path) as (

select place, newplace, method, 1 as length, place as path from move --starting point

union -- or

select -- next step on journey

route.place,

move.newplace,

move.method,

route.length + 1, -- extra step on the found route

path || '-[' || route.method || ']->' || move.place as path -- describe the route

from move

join route ON route.newplace = move.place -- restrict to only reachable destinations from existing route

)

SELECT

place as origin,

newplace as destination,

length,

path || '-[' || method || ']->' || newplace as instructions

FROM route;

I know this is a bit “rest of the owl” compared to what we were doing above. I hope it at least illustrates the extent of what is possible. (It’s based on the prolog tutorial). We have started from some facts about adjacent places and asked the database to figure out routes for us.

Let’s talk it through…

create view route_planner as

this saves the relation that’s the result of the given query with a name. We did this above with

create table lannister as

SELECT 'Jamie' AS forename

UNION select 'Cersei' AS forename

UNION select 'Sansa' AS forename;

While create table will store a static dataset, a view will re-execute the query each time we interrogate it. It’s always fresh even if the underlying facts change.

with recursive route(place, newplace, method, length, path) as (...);

This creates a named portion of the query, called a “common table expression“. You could think of it like an extract-method refactoring. We’re giving part of the query a name to make it easier to understand. This also allows us to make it recursive, so we can build answers on top of partial answers, in order to build up our route.

select place, newplace, method, 1 as length, place as path from move

This gives us all the possible starting points on our journeys. Every place we know we can make a move from.

We can think of two steps of a journey as the first step OR the second step. So we represent this OR with a UNION.

join route ON route.newplace = move.place

Once we’ve found our first and second steps, the third step is just the same—treating the second step as the starting point. “route” here is the partial journey so far, and we look for feasible connected steps.

path || '-[' || route.method || ']->' || move.place as path;

here we concatenate instructions so far through the journey. Take the path travelled so far, and append the next mode of transport and next destination.

Finally we select the completed journey from our complete route

SELECT

place as origin,

newplace as destination,

length,

path || '-[' || method || ']->' || newplace as instructions

FROM route;

Then we can ask the question

select instructions from route_planner

where origin = 'Winterfell'

and destination = 'King''s Landing';

and get the answer

instructions ------------------------------------------------------------------------------- Winterfell-[Horse]->White Harbour-[Ship]->King's Landing Winterfell-[Horse]->Moat Cailin-[Horse]->Crossroads Inn-[Horse]->King's Landing (2 rows)

Thinking in Questions

Learning SQL well can be a worthwhile investment of time. It’s a language in widespread use, across many underlying technologies.

Get the most out of it by shifting your thinking from “how can I get at my data so I can answer questions” to “How can I express my question in this language?”.

Let the database figure out how to best answer the question. It knows most about the data available and the resources at hand.

The post Thinking in Questions with SQL appeared first on Benji's Blog.

User Friendly Printer Management | openSUSE YaST

openSUSE Tumbleweed – Review of the week 2021/11

Dear Tumbleweed users and hackers,

The biggest trouble of the week was the mirror infrastructure having a hard time catching up to the full rebuild. Tumbleweed itself was, as usual, solid and has been steadily rolling. In total, there were 4 snapshots (0312, 0315, 0316, and 0317) released last week.

The main changes in those snapshots included:

- Mozilla Thunderbird 78.8.1

- Mozilla Firefox 86.0.1

- KDE Frameworks 5.80.0

- Bison 3.7.6

- grub2: boothole v2 fixes: the first iteration was blocked, as dual boot was broken. New signing certs and revocation of old certs will follow.

- PipeWire 0.3.23

- Linux kernel 5.11.6

- SQLite 3.35.0

- Systemd 246.11

The staging projects are largely unchanged, the main topics there are still:

- KDE Plasma 5.21.3

- Perl 5.32.1

- SELinux 3.2

- Python 3.9 modules: besides python36-FOO and python38-FOO, we are testing to also shop python39-FOO modules; we already have the interpreter after all. Python 3.8 will remain the default for now.

- UsrMerge is gaining some traction again, thanks to Ludwig for pushing for it

- GCC 11 as the default compiler

Member

Member henne

henne