Looking for exceptions with awk

Sometimes you just need to search using awk or want to use plain bash to search for an exception in a log file, it’s hard to go into google, stack overflow, duck duck go, or any other place to do a search, and find nothing, or at least a solution that fits your needs.

In my case, I wanted to know where a package was generating a conflict for a friend, and ended up scratching my head, because I didn’t want to write yet another domain specific function to use on the test framework of openQA, and I’m very stubborn, I ended up with the following solution

journalctl -k | awk 'BEGIN {print "Error - ",NR; group=0}

/ACPI BIOS Error \(bug\)/,/ACPI Error: AE_NOT_FOUND/{ print group"|",

$0; if ($0 ~ /ACPI Error: AE_NOT_FOUND,/ ){ print "EOL"; group++ };

}'

This is short for:

- Define

$START_PATTERN as /ACPI BIOS Error \(bug\)/,and$END_PATTERN as /ACPI Error: AE_NOT_FOUND/ - Look for

$START_PATTERN - Look for

$END_PATTERN - If you find

$END_PATTERNadd anEOLmarker (that is not needed, since the group variable will be incremented)

And there you go: How to search for exceptions in logs, of course it could be more complicated, because you can have nested cases and whatnot, but for now, this does exactly what I need:

EOL

10| May 20 12:38:36 deimos kernel: ACPI BIOS Error (bug): Could not resolve symbol [\_SB.PCI0.LPCB.HEC.CHRG], AE_NOT_FOUND (20200110/psargs-330)

10| May 20 12:38:36 deimos kernel: ACPI Error: Aborting method \PNOT due to previous error (AE_NOT_FOUND) (20200110/psparse-529)

10| May 20 12:38:36 deimos kernel: ACPI Error: Aborting method \_SB.AC._PSR due to previous error (AE_NOT_FOUND) (20200110/psparse-529)

10| May 20 12:38:36 deimos kernel: ACPI Error: AE_NOT_FOUND, Error reading AC Adapter state (20200110/ac-115)

EOL

11| May 20 12:39:12 deimos kernel: ACPI BIOS Error (bug): Could not resolve symbol [\_SB.PCI0.LPCB.HEC.CHRG], AE_NOT_FOUND (20200110/psargs-330)

11| May 20 12:39:12 deimos kernel: ACPI Error: Aborting method \PNOT due to previous error (AE_NOT_FOUND) (20200110/psparse-529)

11| May 20 12:39:12 deimos kernel: ACPI Error: Aborting method \_SB.AC._PSR due to previous error (AE_NOT_FOUND) (20200110/psparse-529)

11| May 20 12:39:12 deimos kernel: ACPI Error: AE_NOT_FOUND, Error reading AC Adapter state (20200110/ac-115)

EOL

12| May 20 13:37:41 deimos kernel: ACPI BIOS Error (bug): Could not resolve symbol [\_SB.PCI0.LPCB.HEC.CHRG], AE_NOT_FOUND (20200110/psargs-330)

12| May 20 13:37:41 deimos kernel: ACPI Error: Aborting method \PNOT due to previous error (AE_NOT_FOUND) (20200110/psparse-529)

12| May 20 13:37:41 deimos kernel: ACPI Error: Aborting method \_SB.AC._PSR due to previous error (AE_NOT_FOUND) (20200110/psparse-529)

12| May 20 13:37:41 deimos kernel: ACPI Error: AE_NOT_FOUND, Error reading AC Adapter state (20200110/ac-115)

EOL

So I could later write some code that looks per string, separates the string using the pipe | discards the group id, and adds that record to an array

of arrays or hashes: [ {group: id, errors: [error string .. error string] ]

openSUSE Talks at SUSECON Digital

SUSECON Digital 2020 starts today and it is free to register and participate in SUSE’s premier annual event. This year features more than 190 sessions and hands-on training from experts.

There are less than a handful of openSUSE related talks. The first openSUSE related talk is about openSUSE Kubic and takes place on May 20 at 14:00 UTC. In the presentation, attendees will receive an update about the last year of openSUSE Kubic development and see a demonstration on deploying Kubernetes with Kubic-control on a Raspberry Pi 4 cluster. Attendees will see how to install new nodes with YOMI, which is the new Salt-based auto installer that was integrated into Kubic.

The next talk is about open-source licenses. The talk will focus on the legal review app used by SUSE lawyers and the openSUSE Community. The app called Cavil was developed as an open source application to verify the use of licenses in open source software that help lawyers to evaluate risk related to software solutions. The talk scheduled for June 3 at 13:00 UTC will explain the ease of use for the lawyers and how the model significantly increases the throughput of the ecosystem.

The last openSUSE related talks centers on openSUSE Leap and SUSE Linux Enterprise. The talk will take place on June 10 at 13:30 UTC. Attendees will learn about the relationship between openSUSE Leap and SUSE Linux Enterprise 15, how it is developed and maintained and how to migrate instances of Leap to SLE.

The event as a whole is a great opportunity for people who are familiar with open-source and openSUSE. People who just want to learn more about the technology free of cost also have a great opportunity to experience the openness of SUSE and the event.

Cloud based workers for openQA

Cloud based workers for openQA

For those who do not know openQA, this is an automated test tool for operating systems and the engine at the heart of openSUSE’s automated testing initiative. It’s implemented mainly in Perl and uses QEMU, by default, for starting and controlling the virtual machines and OpenCV for fuzzy image matching. The general architecture is split in 2:

- One openQA server, aka openQA web UI

- Multiple openQA workers, which run the tests

If you want to know more about openQA, please check the documentation.

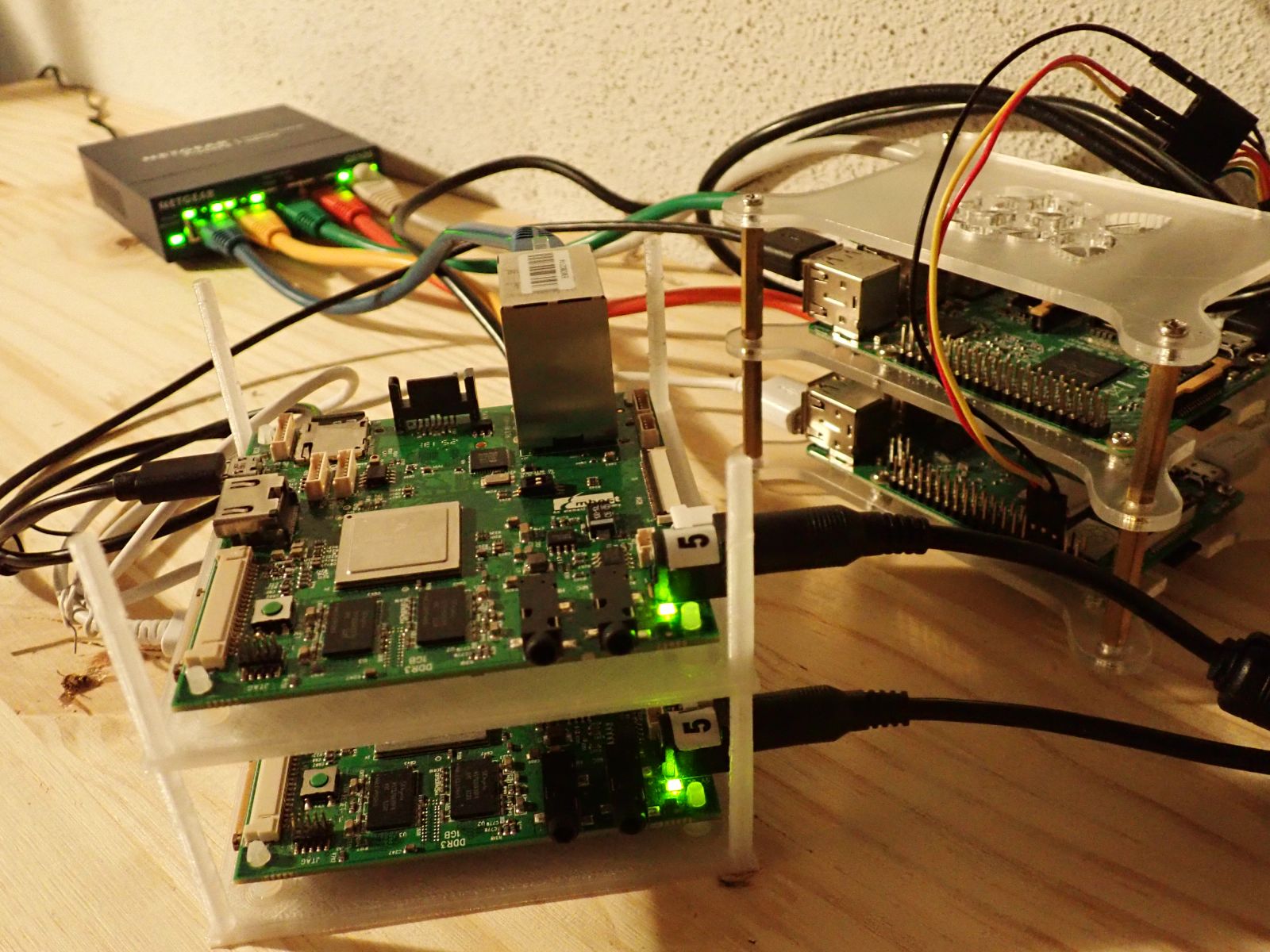

openQA workers, which run the tests, are generally on the same network as the openQA web UI server which is fine most of the time, but if some additionnal hardware must be added, they must be sent physically and only few people can take care of it, which can be problematic. One solution to this problem is to use cloud based machines, which are by definition on a separate network and accessible through Internet.

The good news is openQA supports such setups by using a local cache service on the worker. This service downloads the assets (ISO, HDD images, etc.) on demand through the openQA API via HTTPS, instead of using the legacy NFS mount method. Tests and needles are already in git repositories so they can be fetched from the remote git repositories directly instead of using them from the NFS share.

First tests have been done on local workers connected to openqa.opensuse.org (o3 for short), where NFS share has been replaced by the cache service. But this was still on the same network.

Then, more tests have been performed with additionnal aarch64 workers on:

- Public cloud: An AWS EC2 A1 bare metal instance which runs qemu/kvm based tests

- Remote machines:

- Marvell ThunderX2 and SolidRun HoneyComb LX2K which also runs qemu/kvm based tests

- A Softiron Overdrive 1000 which runs a generalhw backend to perform tests on real hardware, currently a Raspberry Pi 2 and two Raspberry Pi 3 (B and B+)

The only caveat is the developer mode which requires the worker to be reachable from the openQA web UI server at specific ports, so here, reachable on the Internet. Unfortunately, this is not the case with current aarch64 remote workers.

For qemu based tests KVM enabled systems are highly recommended otherwise you will get very poor performances with runtimes about 10x slower compared to KVM enabled systems. So, you need to use bare metal instances or nested virtualization when available.

Now, we will detail specific configurations to setup a remote cloud worker which has access only to the openQA API.

Setup

Install required software on the worker

As any other openQA worker, you need to install some packages.

You likely want to use the latest version of openQA and thus use the binaries from devel:openQA and devel:openQA:Leap:15.1 projects (adjust the URL, if you do not use Leap 15.1):

sudo zypper ar -f https://download.opensuse.org/repositories/devel:/openQA/openSUSE_Leap_15.1/devel:openQA.repo

sudo zypper ar -f https://download.opensuse.org/repositories/devel:/openQA:/Leap:/15.1/openSUSE_Leap_15.1/devel:openQA:Leap:15.1.repo

If you use SLE15-SP1, you need to enable the matching repositories and also PackageHub:

sudo zypper ar -f https://download.opensuse.org/repositories/devel:/openQA/SLE_15_SP1/devel:openQA.repo

sudo zypper ar -f https://download.opensuse.org/repositories/devel:/openQA:/SLE-15/SLE_15_SP1/devel:openQA:SLE-15.repo

sudo SUSEConnect -p PackageHub/15.1/aarch64

Now, you can install required packages:

sudo zypper install openQA-worker os-autoinst-distri-opensuse-deps

Get API keys from openQA web UI host

Create a new set of API keys from openQA web UI or ask someone with admin permissions to create a set for you.

Setup worker to use API keys and cache

With a remote worker, you cannot NFS mount /var/lib/openqa/cache from the openQA server as only the openQA API is reachable. Instead, you must use the cache service as described below.

Update /etc/openqa/workers.ini with:

[global]

HOST = http://openqa.opensuse.org http://myotheropenqa.org

CACHEDIRECTORY = /var/lib/openqa/cache

CACHELIMIT = 50 # GB, default is 50.

CACHEWORKERS = 5 # Number of parallel cache minion workers, defaults to 5

Update /etc/openqa/client.conf with the key generated from web UI:

[openqa.opensuse.org]

key = 0123456789ABCDEF

secret = FEDCBA9876543210

Start and enable the Cache Service:

sudo systemctl enable --now openqa-worker-cacheservice

Enable and start the Cache Worker:

sudo systemctl enable --now openqa-worker-cacheservice-minion

Synchronize tests and needles

Tests and needles are not part of the cache services, but are hold in GIT repositories, so you need to setup an auto-update of those repos. Currently, the easiest way is to use the fetchneedles script from openQA package to fetch Git repos and create a cron job to update it often enough (say, every minute).

Install required package and run the fetch script a first time.

sudo zypper in --no-recommends openQA system-user-wwwrun

sudo /usr/share/openqa/script/fetchneedles

Also, if you plan to run MicroOS tests:

cd /var/lib/openqa/share/tests

sudo ln -s opensuse microos

cd /var/lib/openqa/share/tests/opensuse/products/microos

sudo ln -s ../opensuse/needles/

Now, add a cron job to fetch tests and needles every minute /etc/cron.d/fetchneedles:

-*/1 * * * * geekotest env updateall=1 /usr/share/openqa/script/fetchneedles

And restart the service:

sudo systemctl restart cron

Enjoy your remote worker

Now you can (re)start your worker(s):

sudo systemctl enable --now openqa-worker@1

And, your remote worker should be registered on the openQA server. Check the /admin/workers page from the openQA web UI. Enjoy!

Have a lot of fun!

Petition for the re-election of the openSUSE Board

Back in March, openSUSE member Pierre Böckmann wrote to the project mailing list calling for a non-confidence vote following recent events . Technically that means he was calling for a re-election of the current elected board.

The board election rules state:

If 20 per cent or more of the openSUSE members require a new board, an election will be held for the complete elected Board seats.

The openSUSE Election Committee was tasked to find our whether 20% of the community are actually calling for the re-election.

We have at our disposal the Helios voting platform which we can use to register an "answer" from community members. Instead of running a vote with several answer options, we consulted among Election Officials, and agreed that there will be only one answer to select, which will represent a virtual signature, similar to like signing an electronic petition. That will allow us to effectively measure whether 20% of the community are petitioning for a re-election of the openSUSE Board.

I sent an email to the project mailing list, on behalf on the Election Committee, explaining this process and called for comments by community members. If you are reading this post and would like to share your views about the procedure, then the deadline for comments submission is 20 May 2020 23h59 CET.

Remaining openSUSE Services to Switch to New Authentication System

Dear Community

On Monday 18 May 2020 at 07:00 UTC, we will switch over all remaining openSUSE services to the new authentication system. At the same time, the openSUSE forums will also move to the new setup in Nürnberg.

Important:

The change of our authentication system will require you to set a new password for your user account.

If you have already changed your password for the bugzilla or OBS migration, no action - besides using your new password is needed.

For more details about the changes to the authentication system, its migration status and the re-registration verification, please go to https://idp-portal-info.suse.com.

openSUSE Tumbleweed – Review of the week 2020/20

Dear Tumbleweed users and hackers,

This week we had some trickier changes entering our beloved distro, which might even have causes some (minor) problems for you while running zypper dup. It’s about how we package symlinks in our RPMs. So far, brp-check-suse converted the symlinks to absolute ones, then rpm warned about that fact. No way of pleasing the packager, right? The brp policy has been updated and symlinks are now all converted to relative ones (almost all – things to /dev stay absolute, e.g. /dev/null). This has some advantages when inspecting chroots or other layouts that could be mounted somewhere else. The issues seen were that not everything got rebuilt in the same go, which resulted in zypper complaining about file conflicts. The error was save to be ignored, but annoying. In total, we have released 5 snapshots this week (0507, 0508, 0509,0511 and 0513) containing these changes:

- Absolute->Relative symlink changes, as described

- Mozilla Firefox 76.0

- Linux kernel 5.6.11

- KDE Plasma 5.18.5

- KDE Frameworks 5.70.0

- NetworkManager 1.24.0

- Libvirt 6.3.0

- GCC 10.1 (still not the default compiler)

The staging projects are still filled up with these major changes:

- Inkscape 1.0

- TeXLive 2020

- RPM change: %{_libexecdir} is being changed to /usr/libexec. This exposes quite a lot of packages that abuse %{_libexecdir} and fail to build

- Qt 5.15.0 (currently release candidate is staged)

- Guile 3.0.2: breaks gnutls’ test suite, but it passes when built with gcc10

- GCC 10 as the default compiler

Updated KDE Frameworks, Redis Arrive in Tumbleweed, Curl Gets New Experimental Feature

Ninetynine seems to be a new norm for openSUSE Tumbleweed as the rolling release trends and posts stable ratings of 99 for every snapshot this month, according to the Tumbleweed snapshot reviewer.

Among the packages to arrive in this week’s updates were Curl, OpenConnect, KDE’s Plasma and Frameworks as well as a major version update of Redis.

KDE Frameworks 5.70.0 arrived in snapshot 202000511; these libraries for programming with Qt introduced a small font theme for Kirigami and improved icon rendering on multi-screen multi-dpi setups. KConfig added the standard shortcut for “Show/Hide Hidden Files” with the Alt+ keys. The text rendering bitmap package freetype2 updated to version 2.10.2 and dropped support for Python 2 in Freetype’s API reference generator; the version also supports Type 1 fonts with non-integer metrics by the new Compact Font Format engine introduced in FreeType 2.9. The 1.45.6 e2fsprogs package for maintaining the ext2, ext3 and ext4 file systems improved e2fsck’s ability to deal with file systems that have a large number of directories, such that various data structures take more than 2GB of memory; the new version uses better structure packing to improve the memory efficiency of these data structures. The libressl 3.1.1 package completed an initial Transport Layer Security 1.3 implementation with a completely new state machine and record layer. TLS 1.3 is now enabled by default for the client side, with the server side to be enabled in a future release. The changelog noted that the OpenSSL TLS 1.3 API is not yet visible/available. RubyGem had a plethora of packages updates in ; rubygem-fluentd 1.10.3 had some refactored code and enhancements like adding a set method to record_accessor. The rubygem-activerecord-6.0 6.0.3 package fixed support for PostgreSQL 11+ partitioned indexes and noted a recommendation in the changelog that applications shouldn’t use the database Keyword Arguments (kwarg) in connected_to. The database kwarg in connected_to is meant to be used for one-off scripts but is often used in requests, which is a dangerous practice because it re-establishes a connection every time. It’s deprecated in 6.1 and will be removed in 6.2 without replacement.

The Remote Desktop Protocol (RDP) client freerdp had multiple Common Vulnerabilities and Exposures fixes in the 202000509 snapshot; the 2.1.0 version improved server certificate support, made various fixes for the wayland client and had fixes for leak and crashing issues. The 6.3.0 libvirt toolkit now supports use of the VirtualBox 6.0 and 6.1 APIs. The new version also added support for ‘passthrough’ hypervisor feature, which is a Xen-specific option new to Xen 4.13 that enables PCI passthrough for guests. It must be enabled to allow hotplugging PCI devices. The Lightweight Directory Access Protocol package openldap2 2.4.50 have fixes for the stand-alone LDAP daemon and several YaST packages updated to version 4.3.0.

One major version update of shared-mime-info 2.0 was delivered in snapshot 202000508. The package, which allows for central updates of Multipurpose Internet Mail Extensions information for all supporting applications, installs ITS file to allow the internationalization and localization (i18n and l10n) system gettext to translate mime-type descriptions. A new experimental MQTT protocol was added with curl 7.70.0. The ghostscript 9.52 package forked LittleCMS2 into LittleCMS2mt “multi-thread”. SSL VPN client openconnect 8.09 fixed a CVE regarding an OpenSSL validation for trusted but invalid certificates. An updated version of the wireless-regdb package, which is a database package of legal regulations on radio emissions, to 20200429 change the rules for the United States on 2.4/5G.

The 202000507 snapshot that started off the week brought several package updates like ImageMagick 7.0.10.10, Mozilla Firefox 76.0 and Thunderbird 68.8.0. The new Firefox strengthens the protection of online account logins and passwords and automatically generates secure, complex passwords for new password preferences. A major version update of Redis 6.0.1 arrived in the snapshot as well. The new release of the in-memory data structure store has the ACL GENPASS command and now uses HMAC-SHA256 and has an optional “bits” argument, which means it can be used as a general purpose “secure random strings” primitive. Plasma 5.18.5 update arrived in the snapshot a had many fixes in the minor release for KDE’s Long-Term Support version. Some of the packages remove a too strict Qt/KF5 deprecation rule and KWin had a fix to avoid a potential crash for Wayland. Other packages updated in the snapshot were the terminal multiplexer tmux 3.1b, mail client mutt 1.14.0 and the 5.6.11 Linux Kernel.

btrfs: making "send" more "capable"

What’s new in openSUSE Leap 15.2

openSUSE Leap 15.2 has entered the Beta phase on the 25th February 2020. I have recently installed this on my laptop to check it out. Leap 15.2 will coincide with SUSE Enterprise Linux Desktop 15 Service Pack 2. Both Leap and SLED share a lot of underlying packages, so this will be (again) a rock solid release.

openSUSE Leap 15.2 features many big updates. This includes a new version of the KDE desktop environment, a new version of the GNOME desktop environment and a new Linux kernel. In the Leap 15.2 column of the table below, I have highlighted in green the packages that are significantly changed in comparison to Leap 15.1. And in blue, I have highlighted the packages that are changed compared to Leap 15.1 at time of its release, but which are now also available in updated Leap 15.1 installations.

openSUSE Tumbleweed is a rolling distribution, so it will always change. The Tumbleweed column features a snapshot of the situation at the end of April 2020. I have only highlighted in green the packages that are significantly newer than the packages in Leap 15.2. This shows the areas where Leap 15.2 is behind the software development curve. Staying a bit behind the curve is not necessarily a bad thing. You install openSUSE Leap because you don’t want to deal with constant change. You install openSUSE Tumbleweed if you want to stay on the cutting edge. They both have their own audiences. This comparison helps (future) openSUSE users understand the main differences between Leap 15.2 and Tumbleweed, so they can figure out which of these distributions is right for them.

| Package name | Leap 15.1 | Leap 15.1 | Leap 15.2 | Tumbleweed (Apr 2020) |

| with updates | Amarok | 2.9.0 | 2.9.0 | 2.9.7 | 2.9.7 |

| Audacity | 2.2.2 | 2.2.2 | 2.2.2 | 2.3.3 |

| Calibre | 3.40 | 3.40 | 3.48 | 4.13 |

| Calligra suite | 3.1.0 | 3.1.0 | 3.1.0 | 3.1.0 |

| Chromium browser | 73 | 81 | 81 | 81 |

| Darktable | 2.6.2 | 2.6.3 | 2.6.3 | 3.0.2 |

| Digikam | 6.0 | 6.0 | 6.4 | 6.4 |

| Flatpack | 1.2.3 | 1.2.3 | 1.6.3 | 1.6.3 |

| GIMP | 2.8.22 | 2.8.22 | 2.10.12 | 2.10.18 |

| Gnome Applications | 3.26 | 3.26 | 3.34 | 3.36 |

| GNU Cash | 3.0 | 3.0 | 3.9 | 3.9 |

| Hugin | 2018.0 | 2018.0 | 2019.2 | 2019.2 |

| Inkscape | 0.92.2 | 0.92.2 | 0.92.2 | 0.92.4 |

| KDE Applications | 18.12.3 | 18.12.3 | 19.12.3 | 19.12.3 |

| KDE Plasma 5 desktop | 5.12.8 | 5.12.8 | 5.18.4 | 5.18.4 |

| Krita | 4.1.8 | 4.1.8 | 4.2.9 | 4.2.9 |

| LibreOffice | 6.1.3 | 6.4.2 | 6.4.2 | 6.4.3 |

| Linux kernel | 4.12.14 | 4.12.14 | 5.3.18 | 5.6.4 |

| Mozilla Firefox ESR | 60.6 | 68.7 | 68.6 | 68.7 |

| Mozilla Thunderbird | 60.6 | 68.7 | 68.6 | 68.7 |

| OpenShot | 2.4.1 | 2.4.1 | 2.4.1 | 2.5.1 |

| Rapid Photo Downloader | 0.9.09 | 0.9.14 | 0.9.22 | 0.9.22 |

| Scribus | 1.4.7 | 1.4.7 | 1.5.5 | 1.5.5 |

| Telegram | 1.6.7 | 1.6.7 | 2.0.1 | 2.1.0 |

| VLC | 3.0.6 | 3.0.9 | 3.0.7 | 3.0.9 |

| YaST | 4.1.68 | 4.1.75 | 4.2.82 | 4.2.82 |

Software package updates

Chromium moved from version 73 to version 81 and a lot has changed. Important new features include a Forced Dark Mode that enables dark mode on every website, the Silent Notification Popup feature contains notifications under a special icon in the URL address bar, the Tab Freezing feature unloads tabs that are not used for 5 minutes (saving CPU and RAM). A lot of security improvements were made, including DNS over HTTPS (experimental) where DNS (domain lookup) requests are send over a secured connection. Mixed HTTP/HTTPS content is now auto-upgraded to HTTPS, the Password Checkup utility checks if your saved passwords have been breached and the Predictive Phishing feature warns users if they are about to enter their passwords in a phishing website.

Digikam 6.4 features a lot of changes in comparison to Digikam 6.0. The 15 year old KIPI plugin interface is swapped for the much improved DPlugins interface. This allows plugins to work on all aspects of the Digikam application and accompanying tools. And a lot of new plugins take advantage of that. There is a new plugin to use images as your desktop wallpaper. The GMic-Qt plugin adds a lot of filters (comparable to GIMP and Krita). A RAW import tool plugin allows configurations from Darktable, Rawtherapee and Ufraw to be imported via the Image Editor. And finally a new Clone Tool Plugin allowing you to clone parts of an image. The new LibRaw 0.19.3 library allows for more RAW formats to be imported into Digikam.

Flatpak 1.6.3 has changed the protocol and API for handling authentication, making it more secure, flexible and futureproof.

Gimp 2.10.12 is a major update compared to Gimp 2.08.22. It has a new image processing engine called GEGL. This image processing engine makes use of multi-threading, making Gimp much faster. Gimp now works in a linear RGB color space. Color management is also a core feature of Gimp. Gimp 2.10 has better file formats support, including reading and writing TIFF, PNG, PSD and FITS files. The interface of Gimp is also improved. It now has HiDPI support and the icons are more stylized. Gimp 2.10 has better transformation tools, better selection tools, an improved text tool and a number of digital painting and digital photography improvements. If you want a detailed description of all improvements, check the release notes.

The GNOME desktop and applications are updated from version 3.26 to 3.34. That is a difference of 4 major releases. The GNOME Shell has seen many performance/speed improvements, so it feels much more responsive. The Adwaita theme has gotten an overhaul and now features a flat style and more beautiful icons. openSUSE Leap 15.2 also allows you to install the Yaru theme (Ubuntu) and the Pop!_OS theme (System 76). The touch keyboard is much improved and now features emoji’s. And finally you can group apps into folders in the Activities overview.

Many GNOME applications feature UI improvements, including Web, Settings, Contacts, Notes and Terminal. The Photos application features new editing tools. The Files application features a new unified navigation/search bar. Web also features a new reader mode and the option to enable hardware acceleration. GNOME Software features a faster search engine. There are many improvements to the Boxes virtualization application, but according to a recent Arstechnica article it is still less usable than Virt-manager. There are also 3 new application introduced. Fractal is a Matrix Chat client. Matrix works similar to WhatsApp and Telegram but it’s Open Source and decentralized. It is co-developed and heavily used by the French government to make sure that their conversations stay private. Usage is a new (basic looking) performance monitor tool. And Podcast (obviously) is a Podcast player.

GNUCash 3.9 features many improvements for your accounting needs. Usability is improved, by adding better tooltips, multi-selection in the import transaction matcher and the ability to assign a single target account to more than one transaction in the import matcher. It features improvements to reporting, including a full rewrite of Customer/Employee/Vendor reports, improvements to the Transaction report and the introduction of a user-customizable CSS based stylesheet. It features currency handling improvements, including using the default currency for the summary bar currency. And using the customer and vendor currency instead of the default currency when generating bills, invoices or credit notes.

Hugin 2019.2 can now convert the RAW images to TIFF with a new RAW converter.

Inkscape remains on version 0.92.2. Which is unfortunate, as Inkscape 1.0 was just released and contains many new features.

KDE Applications were updated from 18.12.3 to 19.12.3. Which equals 3 releases. A lot of improvements were made over the course of that year. Dolphin (file manager) can now be launched from anywhere via the Windows + E keyboard shortcut. Dolphin handles the rendering of tumbnails much better and now includes previews of .cb7 files. Gwenview (image viewer) has better touchscreen and HiDPI support. Its tumbnails load faster. It has a new share menu to share images via email, phone, NextCloud or Twitter. And Gwenview now integrates with Krita out of the box. Okular (document viewer) has improved touchscreen support. It now supports viewing and verifying digital signatures in PDF files. It also adds support for LaTex documents. It improves ePub support. And it has improved annotation features.

In terms of productivity, Kmail (e-mail client) has seen some improvements. It now has unicode color emoji and markdown support. It has grammarly integration for better grammar checking. And it detects phone numbers, which can be dialed via KDE Connect. Korganizer (calendar) improved its support for Google Calendar. A new application was added, which is called Kitinerary, a travel assistant.

In terms of multimedia, Kdenlive (video editor) has seen a big code re-write. It should now be faster and more performant and easier to use. It features new keyboard-mouse shortcuts to make you more productive. And it comes with a new sound mixer to help you synchronize music with video clips. Elisa is a new KDE music player. It looks quite similar to Amarok, which was my go-to music player for the last 10 years. It looks interesting enough to give it a try. Amarok didn’t have any new releases over the last 2 years. So maybe it’s time to switch.

In terms of utilities, Spectacle (my new favorite screenshot tool) now allows you to configure what happens when you press the screenshot button while the application is open. It features a neat taskbar indicator, showing you (if you choose to delay it) when the screenshot will be taken. And you can now use the touchscreen to select the area that you want to screenshot. Kate (text editor) added a quick open feature to open your most recent files. Konsole (terminal) now has an awesome tiling feature, that allows you to split panes horizontally and vertically as many times as you like. Calligra Plan (planner) finally gets a new release. It helps you plan your projects Gantt style. KDE Connect now has a new feature to write and read SMS messages from your desktop. Also, you can now control the volume of your phone from your desktop. The Plasma Browser Integration plugin gained a nice multi media feature, giving you the ability to blacklist sound sources from certain websites.

The Plasma desktop has changed from version 5.12.8 to version 5.18.4. Which are 6 major releases, so there are many changes to look forward to. A lot of effort has gone into the look and feel of the desktop. The login screen and lock screen were completely redesigned and look much better. The system settings are also totally redesigned, making them better suited for small screen (read mobile) devices. The Breeze icons also have seen some adjustments to make them look sharper. GTK support is much improved. GTK applications now respect KDE settings, KDE color schemes, have shadows on X11 and support the KDE global menu. The Color scheme and Window Decoration pages were redesigned. And you can now set a ‘Picture of the Day’ as your wallpaper. Which is a really cool feature.

If you say KDE 5, you say widgets. And widgets are now easier to rearrange then ever. You can put the desktop into Global Edit mode and easily drag and resize all widgets. The notifications system has been completely rewritten and now includes a ‘Do not disturb’ feature. The system tray now shows a warning whenever audio is recorded, enhancing your privacy. A new widget for display configuration is useful if you have multiple monitors or if you often work from different places. The power widget now shows the battery status of Bluetooth devices. The network manager widget now includes support for WireGuard VPN tunnels. And it is now faster to refresh WiFi networks. And a new system tray widget lets you control the Night Color feature.

The Discover software manager is much improved. Which was really necessary. I intend to address the current state of KDE Discover and Gnome Software on Leap 15.2 in a separate post. You can now sort lists and category pages, including by release date. There are progress bars and spinners added to various parts of the application. It has a new updates page with sections for ‘downloading’ and ‘installing’ applications. You can also deselect updates in the update page. Discover can update your firmware via the ‘fwupd’ project. This is a very nice feature, but only works on supported hardware. It can also update a full Linux distribution. However I can imagine that this feature works better on KDE Neon than on openSUSE (YaST is most likely superior). Discover can auto-install the Flatpak back-end if it’s not installed by default and now supports Snap channels.

KDE Plasma 5.18 has much improved Wayland support (the new display manager). Including initial support for proprietary Nvidia drivers. KWin (the Window manager) now supports fractional scaling on Wayland, which is useful on HiDPI screens. Night Color is now available on X11 (the old display manager). You can now manage your Thunderbolt devices from the system settings. And the desktop is much more responsive.

Krita is now on version 4.2.9 and now supports painting in HDR. The brush speed is much improved, the color palette is easier to use, the artistic color selector has been cleaned up and a new color gamut feature was added. A lot of bug fixes make the application more stable than ever.

LibreOffice moved from version 6.1.3 to version 6.4.2. The tabbed user interface (which looks similar to Microsoft Office Ribbon interface) moved out of the experimental stage and is now fully baked. Writer has seen many improvements. You can now place comments on images / charts. You can now mark comments as resolved. You can now hide tracked changes. You can set the text direction in text frames. You can avoid overlapping shapes, by selecting an auto-detection mode. Handling of tables is much improved. And the sidebar now has a panel for Table actions. Calc has gained a new multivariate regression analysis. Data validation now supports custom formulas. Which is very handy. In Impress, you can now consolidate multiple text boxes into one text box. Overall improvements in LibreOffice are better support for (and faster opening of) Microsoft Office document formats.

The Linux kernel was updated to version 5.3.18. Since the last version, a lot of improvements have gone into the Linux kernel. However, some of these improvements were already backported into the openSUSE 4.12.14 kernel. In this paragraph I will detail some of the major changes between the official kernels 4.12 and 5.3. Intel has added Comet Lake, Cannonlake and Coffeelake support. Intel Icelake Gen11 graphics are now supported. Intel Icelake and Geminilake now support HDR displays. Nouveau, the open source NVIDIA driver, has gained support for GeForce GTX 1650, GeForce GTX 1660 and GeForce GTX 1660 Ti. It also gained support for HDMI 2.0. AMD added various Ryzen laptop and Threadripper improvements. AMD added support for Radeon RX Vega M and for Navi GPU’s (including Radeon RX 5700). AMD added FreeSync display support (for supported GPUs and Displays).

The Linux kernel has improved Btrfs performance and features, including Swap files and Zstd compression support. Realtek WiFi drivers were added for the RTW88 and RTL8822BE chips. The Realtek R8169 driver was updated. The Linux kernel now has full support for Raspberry Pi 3B and 3B+. The kernel also added support for the Raspberry Pi Touchscreen driver. The kernel now has MacBook and MacBook Pro keyboard support. Significant power savings were accomplished for certain hardware by optimizing idle time. And mitigations for the Spectre vulnerability were added for most hardware architectures.

Mozilla Firefox (Extended Support Release) was updated from 60.6 to 68.6. This is a relatively old version, as the regular release of Firefox is now on version 76. I advice everyone to add the Mozilla repository and change Firefox to the non ESR version. But if you use Firefox 68 ESR, there are some nice improvements. On the user interface the new Firefox Home (which you see when you open a new tab) allows users to display up to 4 rows of top sites, pocket stories and highlights. You can now select multiple tabs from the tab bar and close/move/bookmark/pin them all at once. The toolbar now shows your Firefox Sync status. Firefox now prevents websites from automatically playing sounds. The Dark theme has seen some improvements, including a dark mode for the reader. And you can now save passwords in private browsing mode. There are various technical enhancements. Most noticeable are the performance improvements (due to Quantum CSS improvements and Clang Link Time Optimization) and smoother scrolling. Firefox now supports CSS Shapes and CSS Variable Fonts. It now supports the WebP image format. And it now uses TLS 1.3 by default. Another nice improvement is that WebExtentions now run in their own process on Linux.

Mozilla Thunderbird moved to version 68, which is the most recent version. There are some small improvements in comparison to Thunderbird 60. You can now mark all folders of an account as read. Language packs can now be selected in the advanced options. Only WebExtension themes and WebExtension dictionaries are supported in this new version.

Rapid Photo Downloader is now on version 9.22. A notable addition is the support for Canons CR3 RAW format. However this is not supported on openSUSE Leap 15.2 because it uses an older ExifTool version. A (future looking) addition is the support for the HEIF / HEIC file format.

Scribus is updated to version 1.5.5. Most of the changes are under the hood. However there are some nice improvements, such as the ability to use Scribus with a dark UI color scheme. And the possibility to search for a particular function (like in GIMP).

Telegram Desktop has now moved to version 2.0. Notable improvements are the ability to organize chats into chat folders, the ability to pin an unlimited number of chats in each folder, a picture-in-picture mode to watch videos, the ability to rotate photos/videos in the media viewer, an autoplay function for videos, the ability to schedule a message to be send at a later time and the ability to set reminders for yourself in the saved messages chat.

Installation options

The standard installer comes as a full DVD image (with lots of included packages for offline installation) and as the (smaller) Network installer image. You can download these images for PC (X86_64) for ARM (AArch64) and for OpenPower (ppc64le) hardware.

Live images are available for GNOME and for KDE. These images allow you to try openSUSE Leap 15.2 Beta without installing it to your hard drive. There is also a (live) Rescue image available. JeOS can be used in a virtualized (Cloud) environment and images are available for KVM, Xen, HyperV, VMWare and for the OpenStack Cloud. You can find all images for openSUSE Leap 15.2 Beta here.

Published on: 13 May 2020

Jekyll features - PlantUML base64 plugin (gems)

Your are a software engineer who love code? Then maybe you also love with diagrams (I said maybe!). There is lot of diagrams and we need it to capture requirement, draft code base, network topology, timeline and more. So diagram is important.

This blog is built using Jekyll and I am looking for some solution to allow me to draw diagram if needed with combination of markdown syntax, this should be possible.

I prefer to use PlantUML compare to others tools that available. It will convert my plain text to diagram. Thus plain text is useful for revision. PlantUML support lots of diagram such as sequence diagram, use case diagram, class diagram, activity diagram, component diagram, state diagram, object diagram, deployment diagram, timing diagram, gantt chart and many more.. there is existed few plugins on rubygems but this plugin need a little touch-up.

I fork "jekyll-remote-plantuml" and change a litle bits :smirk:

So because I know storing generated image into repo will make that repo grow bigger in term of size so I decide to format the image into base64 each time building jekyll website and cache downloaded image during CI/CD

It quite silly, I do some code modification to accomplish my objective. So thank you original developer of jekyll-remote-plantuml gem for awesome work.

What and why?

My plugins named as jekyll-plantuml-base64 do everything same as what jekyll-remote-plantuml do but the image will be converted into base64.

Why base64? Because I don’t need to store this image on my repository and there is a trick to cached this image during CI/CD to speed up the built process next time.

How to install ?

Option 1: To install this plugin on Jekyll, you just have to follow the guideline of Jekyll documentation

Option 2: Use rubygems, by adding gem 'jekyll-plantuml-base64', '~> 0.1.4.36' on your gemfile then bundle install

Option 3: Pull the gems directly from git by adding gem 'jekyll-remote-plantuml', '0.1.4.36', git: 'https://github.com/RobbiNespu/jekyll-remote-plantuml' on your gemfile and then bundle install

How to use ?

To use the jekyll-plantuml-base64 plugin, you just have to wrap you text between % plantuml % and % endplantuml % tags.

For example, to create a basic shema between Bob and Alice, you can write the following code

which will generated:

As you see this image created (retrieve the binary from a remote provider), stored and re-formated as base64 before displayed on you website.

Cool huh?

Member

Member foursixnine

foursixnine