Highlights of YaST Development Sprint 85

The Contents

- Encryption got so many improvements that we are writing a separate post (stay tuned).

- Refactored Network on its way to Tumbleweed.

- When you fix a corner case, automatic tests will break in a different corner.

- Not only in flight simulators but also in storage device graphs it matters which way is up and which is down.

Refactored Network on its way to Tumbleweed

A few weeks ago we submitted the first round of changes to the network module to Tumbleweed. At that point, it was still using the old data model for most operations (except routing and DNS handling) and a lot of work remained to be done.

We have been working hard on improving the overall quality of this module and we will submit an updated (and much improved) version in the upcoming days. To summarize, here are some highlights:

- Completed the new data model (support for TUN/TAP, bridges, bonding, VLANs, etc.).

- New wireless configuration workflow.

- Revamped support for interface renaming and driver assignment, including better udev rule handling.

- Fixed

/etc/hostshandling when switching from static to DHCP based configuration. - Many small fixes in several areas.

Are we done with the refactoring? No, we are still working on improving S390 support and fixing small issues, but most of the work is already done.

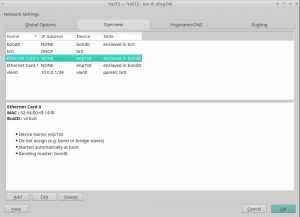

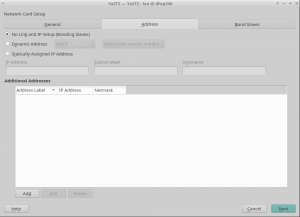

Of course, as soon as we finish, we will publish a blog entry with the gory details. But, as we know that you love screenshots, let us show you a handful of them.

Although we have not introduced big user interface changes, we have tried to improve small things, like properly displaying whether an interface belongs to a VLAN or hiding the "Hardware" tab for virtual interfaces.

DNS resolution not working during installation, or: openQA is different

When we got a bug report that DNS resolution was not working during

installation (since SLE-15 apparently), a solution seemed

straightforward: /run/netconfig/resolv.conf was missing because the

/run directory was not mirrored (bind-mounted) into the target system.

That’s a task that used to be done by yast-storage in times before

SLE-15 and was for some unknown reason forgotten when we implemented

yast-storage-ng. A one-line fix was easily done, tested, and

submitted.

Or so it seemed.

A few days later we got reports from SLE openQA that tests started

to fail with this patch. Nothing networking related but the installation

did not finish because the 10 seconds countdown dialog (‘going to

reboot’) at the very end of the installation was frozen. The UI didn’t

accept any input whatsoever. But whatever we tried, the issue was not

reproducible outside openQA. YaST logs from openQA showed that

/run got mounted as planned and was cleanly unmounted at the end of

the installation – before that frozen dialog. So no clue so far and the

issue was set aside for a while. Until the same reports came in from

Tumbleweed testing. It was clearly linked to this one-line patch. But

how?

It stayed a mystery until a chat with an openQA expert shed some light on the issue. What we thought was happening was: openQA stopped the dialog (by pressing a button) and when it tried to go on the OK button did not respond anymore. What we learned does actually happen is this: openQA stops the dialog, then switches from X to the text console, collects logs, switches back to X, and then the UI does not respond anymore. So that was quite an essential point missing.

And with this it was easily reproduced outside openQA: the X logs showed

that the X server lost all its input devices after the switch. And that

was because someone had deleted the whole /run directory. The YaST

logs didn’t contain a hint (of course not, would have been too easy) but

grepping the sources found the place where YaST deleted the directory.

The code had been added after complaints that the installation left a

cluttered /run directory – of course the installation did leave files

there, since it was forgotten to bind-mount the directory. So once the

mentioned patch bind-mounted it again the deletion code cleaned up not

/run in the installation target system but the real /run as well –

cutting off the X server from the outside world resulting in freezing

the openQA test.

And the moral of the story is: probably none. But it highlights again that the automated test setup can have unexpected feedback on the test itself. Luckily in this case, as the issue would not have been noticed otherwise.

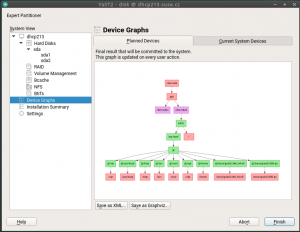

Computer, Enlarge the Device Graph

The partitioner module has a graphical view to help you see the relations in more complex storage setups:

If you turn the mouse scroll wheel up or down, we will zoom the view in or out. Now the direction matches the behavior in web browsers and online maps, previously we had it the wrong way around.

Update: during proofreading, a team mate told me: "A device graph? That’s not a device graph. THAT’s a device graph:"

FOSDEM video hw: TFP401 capture test boards for everyone!

We now have the kit for 4 further test systems. 2 for the fosdem office, 2 for the openfest guys. Here's a picture of them all:

Up top, two complete sets, with TFP401 module and LCD, those are for bulgaria. Then the bottom boards, and the tfp401 module are for the FOSDEM offic, where there's already one tfp401 module, and a ton of BPI LCDs. I will be shipping these out later today.

To add to that, i now have a second complete test setup myself.

Mind you, this is still our test setup, allowing us to work on all bits from the capture engine on downstream (video capture, kms display, h264 encoding). We will need our full setup with the ADV7611 to add HDMI audio to the mix. But 80-90% of the software and driver work can be done with the current test setup with tfp401 modules.

TFP401 EDID ROM

I have gone and flashed all the TFP401 modules with the tool i quickly threw together. I created a matching terminal cable to go from the banana-pi M1 to the edid connector on the board, and made one for Uwe and the FOSDEM office (as they both have a module present already).It turns out that this rom is always writable, and that you could even do so through the hdmi connector. My. My.

Howto rework

Ever since our first successful capture, there has been a howto in the fosdem video hardware github wiki. I have now gone and walked through it again, and updated some bits and pieces.Wait and see whether the others can make that work :)

Bulldozing the TFP401 backlight

The Adafruit tfp401 module gets quite warm, and especially the big diode in the backlight circuit seems to dissipate quite a lot of heat. It usually is too hot to touch.A usb amp meter told me that the module was drawing 615mW when hdmi was active. So I went and ran most of the backlight circuit components from the board, and now we're drawing only 272mW. Nice!

A look into the box we will be replacing.

FOSDEMs Mark Vandenborre posted a picture of some video boxes attached to a 48 port switch.

There are 58 of those today, so mentally multiply the pile under the switch by 10.

Marian Marinov from Openfest (who will be getting a test board soon) actually has a picture of the internals of one of the slides boxes on his site:

This picture was probably made during openfest 2018, so this is from just before we rebuilt all 29 slides boxes. One change is that this slides box lacks the IR LED to control the scaler by playing pcm files :)

Left, you can see scaler (large green board) and the splitter (small green board). In the middle, from top to bottom, the hardware h264 encoder, the banana-pi, and the status LCD. Then there's an ATX power supply, then, hidden under the rats nest of cables, there's a small SSD, and then an ethernet switch.

Our current goal is to turn that into:

- 1 5V power supply

- 1 lime2 with ADV7611 daughterboard.

- keep the lcd.

- keep the 4 port switch.

- perhaps keep the SSD, or just store the local copy of the dump on the SD card (they are large and cheap now).

We would basically shrink everything to a quarter the size, and massively drop the cost and complexity of the hardware. And we get a lot of extra control in return as well.

Support

Thanks a lot to the guys who donated through paypal. Your support is much appreciated and was used on some soldering supplies.If anyone wants to support this work, either drop me an email for larger donations if you have a VAT number, so i can produce a proper invoice. Or just use paypal for small donations :)

Next up

Sunxi H264 encoding is where it is at now, i will probably write up a quick and dirty cut down encoder for now, as february is approaching fast and there's a lot to do still.

Noodlings 4 | MX Linux, Pine64, BDLL openSUSE News

Reasons why openSUSE is Fantabulous in 2019

FOSDEMs bespoke video hardware and software.

The string of hardware in front of the display (my 4:3 projector analogue, attached over hdmi) is:

* A status LCD, showing the live capture in the background (with 40% opacity)

* An Olimex Lime2 (red board) with our test board on top (green board).

* A adafruit TFP401 hdmi to parallel RGB encoder board (blue board).

You can see the white hdmi cable running from the lime2 hdmi out to the monitor. This old monitor is my test "projector", the fact that it is 4:3 makes it a good test subject.

You can also see a black cable from the capture board to another blue board with a red led. This is a banana-pi M1 as this is the current SBC being used in the FOSDEM video boxes, and i had one lying around anyway, doing nothing. It spews out a test image.

What you are seeing here is live captured data at 1280x720@60Hz, displayed on the monitor, and in the background of the status LCD, with a 1 to 2 frame delay.

Here is a close-up of the status lcd

The text and logos in front are just mockups, the text will of course be made dynamic, as otherwise it would not be much of a status display.

And here is a close-up of the 1280x1024 monitor:

You will notice 5 16x16 blocks, one for each corner, and one smack-dab in the middle. They encode the position on screen in the B and G components, and the frame count in the R component.

The utility that is running on the lime2 board (fosdem-video-juggler) displays the captured frames on both the LCD and the monitor. This currently tests for all four corners for all 5 blocks.

At 1920x1080, a 24h run showed about 450 pixels being off. Those were all at the start of the frame (pixels 0,0 and 1,0), as this is where our temporary TFP401 board has known issues. This should be fixed when moving to an ADV7611 encoder.

The current setup, and the target resolution for FOSDEM, is 1280x720, (with a very specific modeline for the TFP401). Not a single pixel has been off in an almost 40h run. Not a single one in 8.6 million frames.

The starry-eyed plan

FOSDEM has 28 parallel tracks, all streamed out live. Almost 750 talks on two days, all streamed out live. Nobody does that, period.What drives this, next to a lot of blood, sweat and tears, are 28 speaker/slides video boxes, and 28 camera video boxes, plus spares. They store the capture, and stream it over the network to be re-encoded on one refurbished laptop per track, which then streams out live. The videobox stream also hits servers, marshalled in for final encoding after devroom manager/speaker review. The livestreams are just a few minutes behind; when devrooms are full, people are watching the livestream just outside the closed off devroom. As a devroom manager, the review experience is pretty slick, with review emails being received minutes after talks are over and videos being done in the next hour or so after that.

The video boxes themselves have an ingenious mix of an hdmi scaler, an active hdmi splitter, a hardware h.264 encoder (don't ask), a banana pi m1 (don't ask), an small SSD, an ethernet switch, and an atx PSU. All contained in big, heavy, laser cut wooden box. It's full of smart choices, and it does what it should do, but it is far from ideal.

There's actual quite a few brilliant bits going on in there. Like the IR LED that controls the hdmi scalers OSD menu. That LED is connected to the headphone jack of the banana pi, and Gerry has hacked up PCM files to be able to set scaling resolutions. Playing audio to step through an OSD menu! Gerry even 3D printed special small brackets, that fit in a usb connector, to hold the IR LED in place. Insane! Brilliant! But a sign that this whole setup is far from ideal.

So last november, during the video team meetup, we mused about a better solution: If we get HDMI and audio captured straight by the Allwinner SoC, then we can drive the projector directly from the HDMI out, and use the on-board h.264 encoder to convert the captured data into a stream that can be sent out over the network. This would give us full control over all aspects of video capture, display and encoding, and the whole thing would be done in OSHW, with full open source software (and mainline support). Plus, the whole thing would be a lot cheaper, a lot smaller, and thus easier to handle, and we would be able to have a ton of hot spares around.

Hashing it out

During the event, in the rare bits of free time, we were able to further discuss this plan. We also had a chat with Tsvetan from Olimex, another big fan of FOSDEM, as to what could be done for producing the resulting hardware, what restrictions there are, how much lead time would be needed etc.Then, on monday, Egbert Eich, Uwe bonnes and i had our usual "we've been thrown out of our hotels, but still have a few hours to kill before the ICE drives us back"-brunch. When i spoke about the plan, Uwes face lighted up and he started to ask all sorts of questions. Designing and prototyping electronics is his day job at the university of Darmstadt, and he offered to design and even prototype the necessary daughterboard. We were in business!

We decided to stick with the known and trusted Allwinner A20, with its extensive mainline support thanks to the large linux-sunxi community.

For the SBC, we quickly settled on the Olimex Lime2, as it just happened to expose all the pins we needed. Namely, LCD0, CSI1 (Camera Sensor Interface, and most certainly not MIPI-CSI, thanks Allwinner), I2S input and some I2C and interrupt lines. As are most things Olimex, it's fully OSHW. A quick email to Tsvetan from Olimex, and we had ourselves a small pile of Lime2 boards and supporting materials.

After a bit of back and forth, we chose the Analog Devices ADV7611 for a decoder chip, an actual HDMI to parallel RGB + I2S decoder. This is a much more intelligent and powerful converter than the rather dumb (no runtime configuration), and much older, TFP401, which actually is a DVI decoder. As an added bonus, Analog Devices is also a big open source backer, and the ADV7611 has fully open documentation.

Next to FOSDEM, Uwe also makes a point of visiting Embedded World here in Nuernberg. So just 2 weeks after FOSDEM, Uwe and I were able to do our first bit of napkin engineering in a local pub here :)

Then, another stroke of luck... While googling for more information about the ADV7611, i stumbled over the Videobrick project. Back in 2014/2015, when i was not paying too much attention to linux-sunxi, Georg Ottinger and Georg Lippitsch had the same idea, and reached the same conclusions (olimex lime1, adv7611). We got in contact, and Georg (Ottinger ;)) even sent us his test board. They had a working test board, but could not get the timing right, and tried to work around it with a hw hack. Real life also kicked in, and the project ended up losing steam. We will re-use their schematic as the basis for our work, as they also made that OSHW, so their work will definitely live on :)

In late April, FOSDEM core organizers Gerry and Mark, and Uwe and I met up in Frankfurt, and we hashed out some further details. Now the plan included an intermediate board, which connects to the rudimentary TFP401 module, so we could get some software and driver work started, and verify the functionality of some hw blocks.

Back then, i was still worried about the bandwidth limitations of the Allwinner CSI1 engine and the parallel RGB bus. If we could not reliably get 1280x720@60Hz, with a reasonable colour space, then the A20 and probably the whole project was not viable.

720p only?

Mentioning 1280x720@60Hz probably got you worried now. Why not full HD? Well, 1280x720 is plenty for our use-case.Speakers are liable to make very poor slide design choices. Sometimes slides are totally not suited for display on projectors, and text often can not be read across the room. FOSDEM also does not have the budget to rent top of the line projection equipment for every track, we have to use what is present at the university. So 720p already gives speakers far too many pixels to play with.

Then, the sony cameras that FOSDEM rents (FOSDEM owns one itself), for filming the speaker from across the room, also do 1280x720, but at 30/25Hz. So 720p is what we need.

Having said that, we have in the meantime confirmed that the A20 can reliably handle full HD at 60Hz at full 24bit colour. With one gotcha... This is starting to eat into our available bus and dram bandwidth, and the bursty nature of both capture and the scaler on each display pipe make display glitchy with the current memory/bus/engine timing. So while slides will be 720p, we can easily support FullHD for the camera as there is no projector attached there, or if we are not scaling.

Intermediate setup

Shortly after our Frankfurt meetup, Uwe designed and made the test boards. As said, this test board and the TFP401 module is just temporary hardware to enable us to get the capture and KMS drivers in order, and to get started on h.264 encoding. The h.264 encoding was reverse engineered as part of the cedrus project (Jens Kuske, Manuel Braga, Andreas Baierl) but no driver is present for this yet, so there's quite a bit of work there still.The TFP401 is pretty picky when it comes to supported modes. It often messes up the start of a frame; a fact that i first noticed on a Olimex 10" LCD that i connected to it to figure out signal routing. The fact that our capture engine does not know about Display Enable also does not make things any better. You can deduce from the heatsink in the picture that that TFP401 also does become warm. All that does not matter for the final solution, as we will use the ADV7611 anyway.

Current status

We currently have the banana pi preloading a specific EDID block with a TFP401 specific modeline. And then we have our CSI1 driver preset its values to work with the TFP401 correctly (we will get that info from the ADV7611 in future). With those settings, we do have CSI capture running reliably, and we are showing it reliably on both the status LCD and the "projector". Not a single pixel has been lost in a ~40h run, with about 21.6TB of raw pixel data transferred, or 8.6 million frames (i then lost 3 frames, as i logged into to bpi and apt-get upgraded, wait and see how we work around load issues and KMS in future).The allwinner A20 capture engine has parallel RGB (full 24bit mind you), a pixelclock, and two sync signals. It does not have a display enable line, and needs to be told how many clocks behind sync the data starts. This is the source of confusion for the videobrick guys, and forces us to work around the TFP401s limitations.

Another quirk of the design of the capture engine is that it uses one FIFO per channel, and each FIFO outputs to a separate memory address, with no interleaving possible. This makes our full 24bit capture a very unique planar RGB888, or R8G8B8 as i have been calling it. This is not as big an issue as you would think. Any colour conversion engine (we have 2 per display pipe, and at least one in the 2d engine) can do a planar to packed conversion for all of the planar YUV formats out there. The trick is to use an identity matrix for the actual colour conversion, so that the subpixels values go through unchanged.

But it was no surprise that neither V4L2 nor DRM/KMS currently know about R8B8G8 though :)

What you see working above already took all sorts of fixes all over the sunxi mainline code. I have collected about 80 patches against the 5.2 mainline kernel. Some are very board specfic, some are new functionality (CSI1, sprites as kms planes), but there are also some fixes to DRM/KMS, and some rather fundamental improvements to dual display support in the sun4i kms driver (like not driving our poor LCD at 154Hz). A handful of those are ready for immediate submission to mainline, and the rest will be sent upstream in time.

I've also learned that "atomic KMS" is not really atomic, and instead is "batched" (oh, if only i had been allowed to follow my nokia team to intel back in 2011). This forces our userspace application to be a highly threaded affair.

The fundamental premise of our userspace application is that we want to keep all buffers in flight. At no point should raw buffers be duplicated in memory, let alone hit storage. Only the h.264 encoded stream should be sent to disk and network. This explains the name of this userspace application; "juggler". We also never want to touch pixel data with a CPU core, or even the GPU, we want the existing specialized hardware blocks to take care of all that (display, 2d engine, h.264 encoder), as they can do so most efficiently.

This juggler currently captures a frame through V4l2, then display that buffer on the "projector" and on the status LCD, as overlays or "planes", at the earliest possible time. It then also tests the captured image for those 5 marker blocks, which is how we know that we are pixel perfect.

There is a one to two frame delay between hdmi-input and hdmi-output. One frame as we need to wait for the capture engine to complete the capture of a frame, and up to one frame until the target display is done scanning out the previous frame. While we could start guessing where the capture engine is in a frame, and could tie that to the line counter in the display engine, it is just not worth it. 1-2 frames, or a 16.67-33.33ms delay is more than good enough for our purposes. The delay on the h.264 stream is only constrained by the amount of RAM we have, as we do want to release buffers back to the capture engine at one point ;)

Current TODO list.

Since we have full r8g8b8 input, i will have to push said buffer through the 2d engine (2d engines are easy, just like overlays), so that we can feed NV12 to the h.264 encoder. This work will be started now.I also still need to go write up hdmi hotplug, both on the input (there's an interrupt for that) as on the output side.

The status text on the status LCD is currently a static png, as it is just a mockup. This too will become dynamic. The logo will become configurable. This, and other non-driver work, can hopefully be done by some of the others involved with FOSDEM video.

Once Uwe is back from vacation, he will start work on the first version of the full ADV7611 daughterboard. Once we have that prototyped, we need to go tie the ADV7611 into the CSI1 driver, and provide a solid pair of EDID blocks, and we need to tie I2S captured audio into the h.264 stream as well.

Lots of highly important work to be done in the next 4 months.

Where is this going?

We should actually not get ahead of ourselves too much. We still need to get close to not losing a single pixel at FOSDEM 2020. That's already a pretty heady goal.Then, for FOSDEM2021, we will work towards XLR audio in and output, and on only needing a single ethernet cable routed across a room (syncing up audio between camera and speaker boxes, instead of centralizing audio at the camera). We will add a 2.54mm pitch connector on top of the current hdmi capture daughterboard to support that audio daughterboard.

Beyond that, we might want to rework this multi-board affair to make an all-in-one board with the gigabit ethernet phy replaced with a switch chip, for a complete, single board OSHW solution. If we then tie in a small li-ion battery, we might be able to live without power for an hour or so. One could even imagine overflow rooms, where the stream of one video box is shown on another video box, all using the same hw and infrastructure.

All this for just FOSDEM?

There seems to be a lot of interest from several open source and hacker conferences. The core FOSDEM organizers are in contact with CCC and debconf over voctomix, and they also went to FOSSAsia and Froscon. Two other crucial volunteers, Vasil and Marian, are core organizers of Sofia's openFest and Plovdivs tuxcon, and they use the existing video boxes already.

So unless we hit a wall somewhere, this hardware, with the associated driver support, userspace application, and all the rest of the FOSDEM video infrastructure will likely hit an open source or hacker conference near you in the next few years.

Each conference is different though, and this is where complete openness, both on the hardware side, and on all of the software solutions needed for this, comes in. The hope is that the cheap hardware will just be present with the organization of each conference, and that just some configuration needs to happen for this specific conference to have reliable capture and streaming, with minimal effort.

Another possible use of this hardware, and especially the associated driver support, is, is of course a generic streaming solution, which is open source and dependable and configurable. It is much smarter to work with this hardware than to try to reverse engineer the IT9919 style devices for instance (usually known as HDMI extenders).

Then, with the openness of the ADV7611, and the more extensive debugfs support that i fear it is bound to grow soon, this hardware will also allow for automated display driver testing. Well, at least up to 1080p60Hz HDMI 1.4a. There actually was a talk in my devroom about this a while ago (which talked about one of those hdmi extenders).

So what's the budget for this?

Nothing.FOSDEM is free to attend, and has a tiny budget. The biggest free/open source software conference on the planet, with their mad streaming infrastructure, spends less per visitor than said visitor will spend on public transportation in brussels over the weekend. Yes, if you take a cab from the city center to the venue, once, you will already have spent way more than the budget that FOSDEM has allocated for you.

FOSDEM is a full volunteer organization, nobody is paid to work on FOSDEM, not even the core organizers. Some of their budget comes from corporate sponsors (with a limit on what they can spend), visitors donating at the event, and part of the revenue of the beer event. This is what makes it possible for FOSDEM to exist as it does, independent of large corporate sponsors who then would want a controlling stake. This is what makes FOSDEM as grass roots and real as it is. This is why FOSDEM is that great, and also, this is why FOSDEM has endured.

The flip side of that is that crucial work like this cannot be directly supported. And neither should it be, as such a thing would quickly ruin FOSDEM, as then politics takes hold and bickering over money starts. I do get to invoice the hardware i needed to buy for this, but between Olimex' support, and the limited bits that you see there, that's not exactly breaking the bank ;)

With the brutal timeline we have though, it is near impossible for me, a self-employed consultant, to take in paid work for the next few months. Even though recruiting season is upon us again, i cannot realistically accept work at this time, not without killing this project in its tracks. If i do not put in the time now, this whole thing is just not going to happen. We've now gone deep enough into this rabbit hole that there is no way back, but there is a lot of work to be done still.

Over the past few months I have had to field a few questions about this undertaking. Statements like "why don't you turn this into a product you can sell" are very common. And my answer is always the same; Neither selling the hardware, nor selling the service, nor selling the whole solution is a viable business. Even if we did not do OSHW, the hardware is easily duplicated, so there is no chance for any real revenue let alone margin there. Selling a complete solution or trying to run it as a service, that quickly devolves into a team of people travelling the globe for two times 4 months a year (conference season). That means carting people and hardware around the globe, dealing with customs, fixing and working around endless network issues, adjusting to individual conferences needs as to what the graphics on the streams should look like, then dealing with stream post production for weeks after each event. That's not going to help anyone or anything either.

The only way for this to work is for the video capture hardware, the driver support, the "juggler", and the associated streaming infrastructure to be completely open.

We will only do a limited run of this hardware, to cover our own needs, but the hardware is and will remain OSHW, and anyone should be able to pay a company like Olimex to make another batch. The software is all GPLv2 (u-boot/kernel) and GPLv3 (juggler). This will simply become a tool that conference organizers can deploy easily and adjust as needed, but this also makes conferences responsible for their own captures.

This does mean that the time spent on writing and fixing drivers, writing the juggler tool, and designing the hw, all cannot be directly remunerated in a reasonable fashion.

Having said that, if you wish to support this work, i will happily accept paypal donations (at libv at skynet dot be). If you have a VAT number, and wish to donate a larger amount, i can provide proper invoices for my time. Just throw me an email!

This would not be a direct donation to FOSDEM though, and i am not a core FOSDEM organizer, nor do i speak for the FOSDEM organization. I am just a volunteer who has been writing graphics drivers for the past 16 years, who is, first and foremost, a devroom manager (since 2006). I am "simply" the guy who provides a solution to this problem and who makes this new hardware work. You will however be indirectly helping FOSDEM, and probably every other free/open source/hacked conference out there.

Lot's of work ahead though. I will keep you posted when we hit our next milestones, hopefully with a less humongous blog entry :)

Noodlings 3 | Commander X16, BDLL and openSUSE News

Highlights of YaST Development Sprint 84

The YaST Team finished yet another development sprint last week and we want to take the opportunity to let you all glance over the engine room to see what’s going on.

Today we will confess an uncomfortable truth about how we manage the Qt user interface, will show you how we organize our work (or at least, how we try to keep the administrative part of that under control) and will give you a sneak peak on some upcoming YaST features and improvements.

Let’s go for it!

There Be Dragons: YaST Qt UI Event Handling

The YaST Qt UI is different in the way that it uses Qt. Normal Qt applications are centered around a short main program that after initializing widgets passes control to the Qt event loop. Not so YaST: it is primarily an interpreter for the scripts (today in Ruby, in former times in YCP) that are executed for the business logic. Those scripts, among other things, also create and destroy widget trees. But the control flow is in the script, not in a Qt event loop. So YaST uses different execution threads to handle both sides: graphic’s system events for Qt widgets and the control flow from the scripts.

This was always quite nonstandard, and we always needed to do weird things to make it happen. We always kind of misused Qt to hammer it into shape for that. And we always feared that it might break with the next Qt release, and that we might have a hard time to make it work again.

This time has now come with bug#1139967, but we were lucky enough

to find a Qt call to bring it back to life; a QEventLoop::wakeUp()

call fixed it. We don’t quite know (yet) why, though. Any hint, anyone?.

Should we even tell you that? Well, we think you deserve to know. After all, it worked well for about 20 years (!)… and now it’s working again.

The Refactoring of YaST Network Keeps Going

What is still not working that fine is the revamped network management. We have been working on it during the latest sprint, but it will take at least another one before it’s stable enough to be submitted to openSUSE Tumbleweed.

On which parts have we be working during the this sprint? Glad you asked! :wink: We are cleaning the current mess in wireless configuration. Soon that part will be more intuitive and consistent with other types of network. We are also making sure we propose meaningful defaults for the new cards added to the network configuration (all types of cards, not only wireless). The functionality to configure udev in order to enjoy stable names for the network interfaces has also received some love. The new version is more stable and flexible. And last but not least, we are improving the device activation in s390 systems for it to be more straightforward in the code and more clear in the user interface.

If everything goes as planned, by the end of the next sprint we will be ready to submit the improved YaST Network to Tumbleweed. That will be the right time for a dedicated blog post with screenshots and further explanations of all the changes.

Enhancing the Partitioner Experience with Encrypted Devices

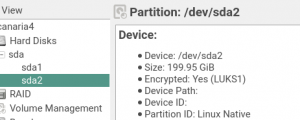

And talking about ongoing work, we are currently working to broaden the set of technologies and use-cases the Partitioner supports when it comes to data encryption. As with the network area, the big headlines in that regard will have to wait for future blog posts. But if you look close enough to the user interface of the Partitioner available in Tumbleweed you can start spotting some small changes that anticipate the upcoming new features.

The first change is very subtle. When visualizing the details of an encrypted device, next to the previously existing “Encrypted: Yes” text you will now be able to see the concrete type of encryption. For all devices encrypted using YaST, that type will always be LUKS1 since that’s the only format that YaST has supported… so far. :wink:

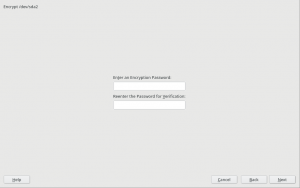

Some other small changes are visible when editing an encrypted device. If such a device was not originally encrypted in the system, nothing changes apart from minor adjustments in the labels. The user simply sees a form with an empty field to enter the password.

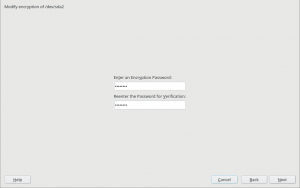

When editing for a second time a device that was already marked for encryption during the current execution of the Partitioner, the form is already prefilled with the password entered before. In the past, the previous encryption layer was ditched (so it’s password and other arguments were forgotten) and the user had to define the encryption again from scratch. That will become even more relevant soon, when the form for encryption becomes more than just a password field. Stay tuned for news in that regard.

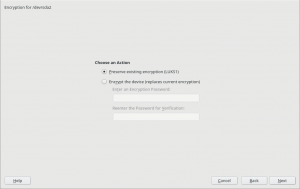

Moreover, when editing a device that is already encrypted in the system, an option is offered to just use the existing encryption layer instead of replacing it with a (likely more limited) encryption created by the Partitioner.

Apart from opening the door for more powerful and relevant changes in the area of encryption, these changes represent an important usability improvement by themselves.

Tidying up the YaST Team’s Trello Board

As you can see in this report and in all the previous ones, the YaST Team works constantly on many different areas like installation, network management, storage technologies… you name it. We use Trello to organize all that work. For each bug in Bugzilla or feature in Jira there is a corresponding Trello card. As it happens, sometimes when a bug is closed its Trello card is forgotten to be updated.

A check with ytrello revealed that, out of a total of 900-something cards, about 500 were outdated and could be closed. More than the half! Why so many?

We found that quite a number of these cards were open cards in closed

(archived) lists. So when you archive a list, don’t forget to archive

its cards before. We have just learned that Trello does not do this

automatically. That’s exactly why there’s a menu item Archive All Cards

in This List... besides Archive This List in the Trello user

interface.

Back to work!

This has been a summer of promises on our side. We told you we are working to improve our user interface library (libYUI), revamping the code to manage the network configuration, extending the support for encryption… which means we have a lot work to be finished.

So let us go back to work while you do your part – having a lot of fun!

EndlessOS | Review from an openSUSE User

openSUSE OBS git mirror

There was some discussion about our OBS and how in contrast Gentoo, VoidLinux or Fedora used git to track packages.

So I made an experimental openSUSE:Factory git mirror to see how well it goes and how using it feels.

The repo currently needs around 1GB but will slowly grow over time. I did not want to spend effort to import all history.

Binary files are replaced by cryptographically secure symlinks into IPFS

and I am currently providing files up to 9MB there.

If you can not run ipfs, you can still get these files through any of the public gateways like this:

curl https://ipfs.io$(readlink packages/a/aubio/aubio-0.4.9.tar.bz2) > OUTPUT

So some benefits are already obvious.

It is now much easier to find and download our patches.

Downloading and seaching all of openSUSE is now much faster.

And it works even on Thursdays (when our maintenance window often causes OBS downtimes).

It is meant to be a read-only mirror, so there is no point in opening pull-requests on github.

I hope, you enjoy it and have a lot of fun…

libv

libv Member

Member